Everyday it seems like a new SEO tool or toolset is launching.

I've been quite impressed with the improvements and enhancements to Raven's SEO Tools since they launched. There are so many features in Raven but I want to focus on some of the really unique ones which make Raven a must have for me.

Link Research Tools

Raven has 2 powerful, time-saving tools in their Link Research toolset. Site Finder and Backlink Explorer are 2 tools that really help me quickly assess and work through link profiles and the link landscape of a particular keyword.

Site Finder

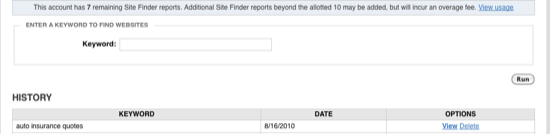

Site Finder is keyword driven and the reports are saved under the website profile you are working on in Raven. While the tool is fast (my auto insurance quotes example took about 6 seconds!) one of the workflow features that I really like is that I can run a bunch of these and go off to do other things within Raven rather than waiting for the reports to come back.

On to Site Finder! :

To use Site Finder, just navigate to it under the Links tab, enter your keyword, and hit "Run":

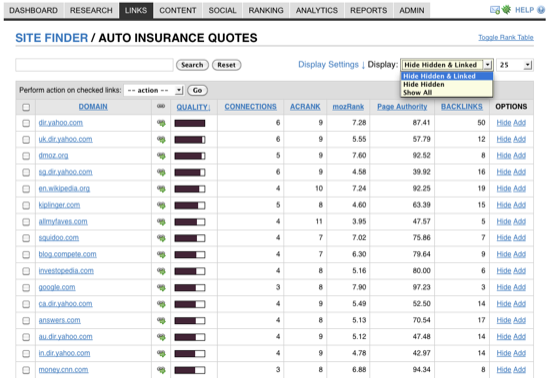

Here are the results returned for my query on auto insurance quotes:

Site Finder gives you quite a bit of data and options in an easy to use interface, here's how it breaks down:

- Search Box - search for a specific domain or reset the results post-search

- Display Settings - show anywhere from 25 - 1k results on the page, show links that are "hidden" (links you "hid" via the options column), or show all links with no filters

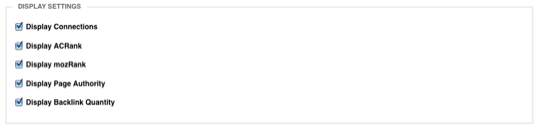

- Display Settings Option Box - click "Display Settings' and you'll get a box where you can toggle ACRank, MozRank, Page Authority, and/or Connections off and on

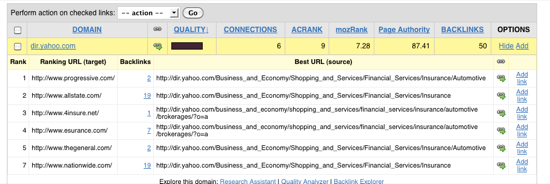

- Domain- the name of a domain which is linking to at least 1 site in the top ten Google Results. Click on the domain link to get a slick drop down of the sites that domain is linking too

- Link Icon - click the icon to display the domain in a new

- Connections - number of sites in the top 10 for your keyword that have a link from that domain

- ACRank - a quick, simple data point which aims to show how important a specific page is (0-15, 15 is the highest) based on referring domains. A more in-depth definition can be found here

- MozRank - SeoMoz's global link popularity score. It mirrors PageRank but SeoMoz says it updates it more frequently and is more precise (scaled 0-10, 10 being the highest). A more in-depth overview can be found here

- Page Authority - a predictor of how likely a page is to rank based on a 100 point, logarithmic scale independent of the page's content. The higher the better :)

- Backlinks - total number of links the domain has going into the top 10 Google results

- Options Tab - if you want to hide a domain from the report (maybe not a link you want to go after, you or your team members can click "hide" and the link will be hidden from the report. If "add" is clicked then the link is added to the link queue in the Link Manager (more on this shortly)

- Export Options - export your report to PDF or CSV (really helpful, especially when running reports on hidden links to gauge how well a link builder might be doing in terms of assessing the appropriate links to hide

So that's Site Finder. The flexibility, power, speed, and collaborative features of Site Finder make it one of my favorite tools to use.

Backlink Explorer

Researching competitor's link profiles is usually a time-consuming piece of the SEO puzzle. While it still involves time, especially on larger link profiles, Backlink Explorer delivers some pretty impressive results quickly and efficiently via a 3rd party tie-in to Majestic SEO.

Another nice thing with Raven is a consistent, clean user interface across the toolset. Here's the spot where you enter the domain you want to research:

Just like Site Finder it will save the report in the history of whatever website profile you are saving the report in. You can explore it at anytime or delete it at anytime:

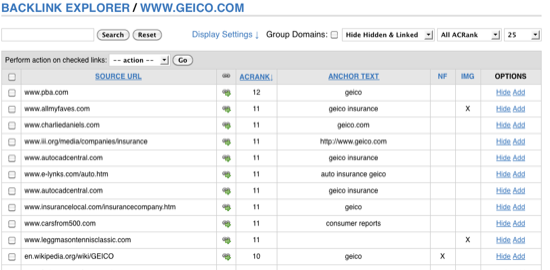

Continuing on with the auto insurance theme, I ran a quick report on GEICO:

Backlink Explorer gives you the following data points and options:

- Search Box - search for a particular domain or words within a domain

- Display Settings - group domains (this is really helpful for cutting down duplicate results from domains with more than one link to the site), show/hide hidden or already linked from domains, filter by ACRank, and display up to 1,000 results on the page

- Display Settings Box - display or hide no-follow, image, or date data fields

- Source URL - the site the link is from

- Link Icon - open page in a new window

- ACRank - as discussed in Site Finder's review, more info here

- Anchor Text - the anchor text of the link

- No-follow - whether it's no-follow or not

- Image - whether it's an image link or not

- Options Box - hide the domain or add it to your link queue

- Export - export results, filtered or non-filtered to CSV

What's really great about this tool is that you can do some pretty heavy filtering to get rid of the noisy links and quickly add the good ones to your link queue. On its face it may seem like it's not that big of a time-saver, but it really is if you are combing through a large profile or multiple link profiles.

You could really buzz through some fairly thick link profiles with the filtering options and put them right into your link queue for you to work on later or for a team member to work on. Once you start working with it you'll quickly see how efficient it is for you or for you and your staff.

Link Management

This is probably my favorite tool in the toolset. Prior to utilizing this tool, I was using lots and lots of spreadsheets to track link building campaigns which got to be pretty time consuming and tough to collaborate on.

It's built in to the Raven SEO Toolbar which allows you to quickly add a link to your link queue, right from your browser, rather than hand copying the website's data to a spreadsheet for further processing. This is a slick feature for a one person show and really sings when used in a collaborative link building environment. The last 2 spots are where your site would be listed and your account profile name:

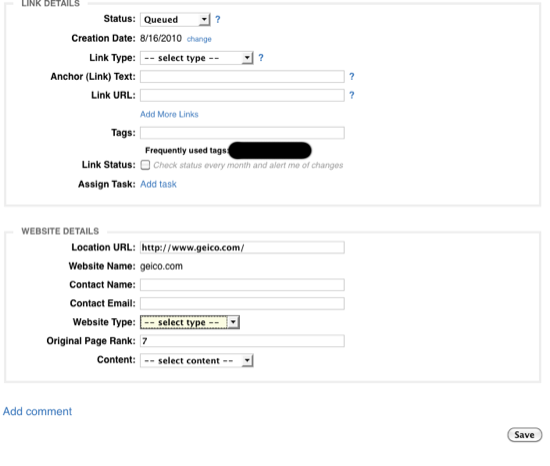

When you are researching link partners, simply click that Add Link button and you are presented with this screen:

The link manager in an of itself is worth the price of admission in my opinion. So here you can:

- Set the status to queued, requested, active, inactive, ignore, or declined. Most of the time it will be "queued" if you are saving it for further handling

- Input the date the record was created

- Select the type of link (organic, paid, blog, exchange, and so on). You can even define custom types in Raven and it will show as an option in this application

- Note the desired anchor text of the link (great for collaboration with link building staff members)

- Include the URL of where you'd like the link to point to

- Add more links if you might be getting more than one link from the page

- Tag the link for sorting within the link manager application

- Set it to be monitored automatically from within Raven

- Add it as a task for you or a staff member

- Raven pulls in the URL, domain name of the site, and PageRank of the page

- If available you can list the contact name and email as well as the type of site it is and even leave a note attached to the record

Try doing all that in a spreadsheet and a bunch of word or text documents for notes :)

Once again, another solid way to save loads of time doing what is probably the most time consuming part of an SEO campaign, link building.

So that was just the toolbar portion of the Link Manager. Within your Raven account you have access to the same "add link" application that you do from the toolbar. Perhaps you have link opportunities that you or a staff member cultivated outside of Raven. You can use this form to plug them right in.

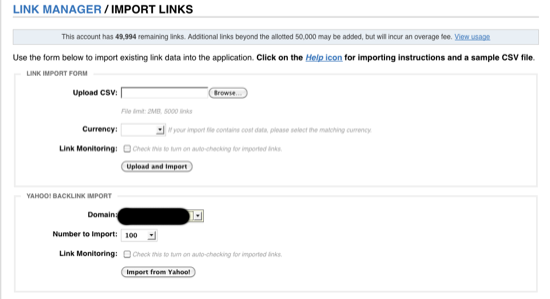

You can also import links into your Raven account.

You can upload a CSV file with custom data that Raven will recognize up to 20 columns of data points. These data points relate to Raven's Link Manager application. So you're able to define all of these (Raven gives you a handy sample CSV to do this from):

- Status

- Link Type

- Link Text

- Link URL

- Website Name

- Website URL

- Website Type

- PR

- Contact Name

- Contact Email

- Contact ID

- Cost Type

- Cost

- Payment Method

- Payment Reference

- Start Date

- End Date

- Creation Date

- Comment

- Owner Name

Currently the currencies supported are USD, GBP, EUR, AUD.

When you upload you can automatically add link monitoring by clicking the link monitoring box.

You can also import up to 1,000 backlinks from Yahoo! via your domain or your competitor's domains (ones you've defined in Raven).

Raven's link monitoring service will alert you if any changes occur to a link or a page the link is on. For example, you would be notified if:

- PageRank changes

- Anchor text changes

- Another link gets added to the page

- They add no-follow to your link

- The location of your link changes

I believe Raven now has about 21 different tools within their toolset now. This one tool, for me, is well worth the subscription cost. It really does save quite a bit of time and there's really nothing else like it on the market that I've seen (in terms of functionality, collaboration, and ease of use).

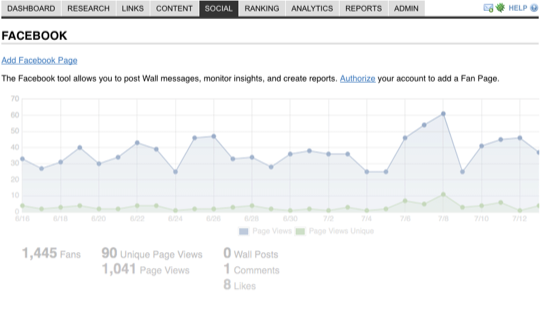

Facebook

There are a growing number of applications out there where you can manage your social media accounts (mainly Twitter and Facebook, but Facebook in this example). If you want the most bang for your buck, Raven offers a state of the art Facebook application within its toolset.

In addition to the deep reporting Raven gives you from within Facebook you can now integrate with Google Analytics from within Raven.

Here are some of the features offered within Raven's Facebook Tool:

- Deep Google Analytics integration

- White label reporting of Facebook metrics

- Automatic wall post scheduling

- Fan tracking, customizable by date range

- Monitor posts, comments, and likes

What I really like about the Facebook tool in Raven is that you can really synch up your analytics information and truly get a handle on what's working and not working over defined periods of time.

The reason why I'm a big fan of the integration here is due to the fact that you are likely going to be using either Twitter or Facebook (or both) in your internet marketing campaign(s). So to have this data in one place and integrated, as well as using the deep metrics that the tools provide, amount to a set of game changing features with respect to Facebook campaign management.

Sometimes with all in one toolsets you see features like this get added and they are kind of watered down. This is not the case here, it's one of the stronger Facebook management tools out there. If you are going to allocate resources to search and social then you need a way to accurately track the ROI of your campaigns and that's exactly what you get with this tool.

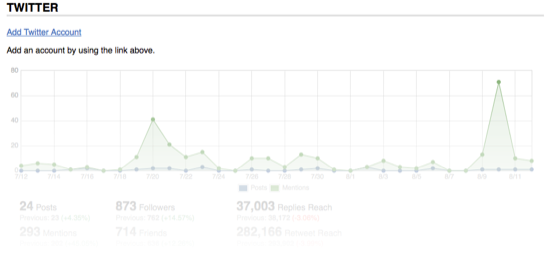

Twitter

Occasionally Social Media campaigns can be tough to quantify in terms of ROI and overall effectiveness. Much like the Facebook Monitor, Raven offers a tool for Twitter users which is a real gem.

Raven's Twitter Tool

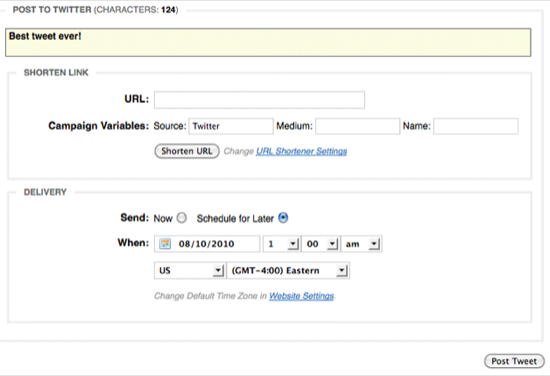

One feature within the Twitter tool is the ability to post a new tweet right away or schedule it for later, integrate with 3 URL shortener services (bit.ly, is.gd, j.mp, and tinyurl), and set custom Google Analytics campaign variables. Raven also gives you the ability to work with bit.ly and j.mp's APIs.

Monitor Twitter Activity and Engagement

If you are allocating resources to Twitter, or being paid by a company to run their Twitter account, then you'll want the ability to see some pretty juicy stats related to your Twitter campaign. With Raven's new Twitter tool you'll be able to see the following:

- Posts

- Followers

- Friends

- Friend to Follower Ratio

- Mentions

- Google Analytics referral data

- Reply and Retweet reach (a great way to see how many readers are seeing the message

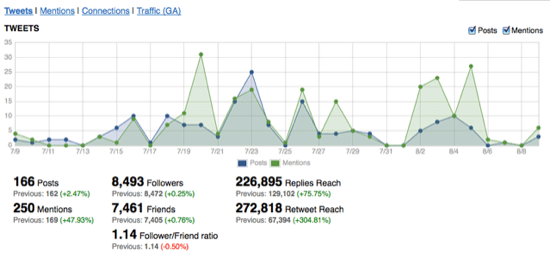

Here's a screenshot of the statistical overlay:

What's really nice about this is the date range comparisons. It's a huge time-saver to manage this data mostly in one place, you can truly get a handle on what's working and what's not working, as well as why it's not working or working. The level of detail and integration is really unique to Raven's suite of tools.

Monitor Tweets Related to Your Account

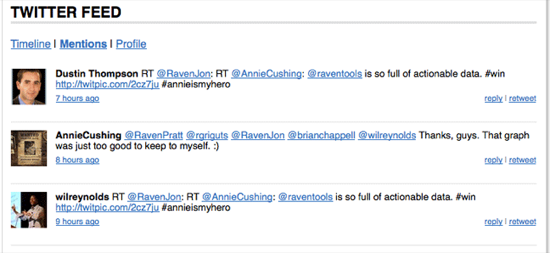

In addition to viewing tweets from your public timeline you can also see all mentions associated with your account, as well as tweets posted from your account:

A great feature here is that if there is a thread associated with a tweet you can click on the "view thread" link and see the entire thread from within the Twitter tool.

You can also access this via Raven's slick iPhone/iPad app

Campaign Reporting

Much like the link tools are worth the full subscription for me, if you have a need for custom reporting then Raven's Campaign Reporting features are probably worth the price of admission for you.

In lockstep with their other tools, the Campaign Reporting feature set is super easy to use:

You can quickly create white-labeled, customized reports for the following modules within Raven:

- Link Building

- Twitter

- Rankings

- Facebook

- Keyword Research

- Competitor Research

- Social Media Monitoring (track mentions of your brand and/or keywords related to your service. It also allows you to manage overall sentiment and track daily buzz)

- Google Analytics

The reporting options include the ability for you to use customized descriptions to explain different parts of the report, summary pages for different sections, and Raven will even generate a table of contents for you.

Brand Templates

Here you can quickly create a completely customized brand template for use with your reports, just click New Brand Template in the campaign home screen.

Give the template a name:

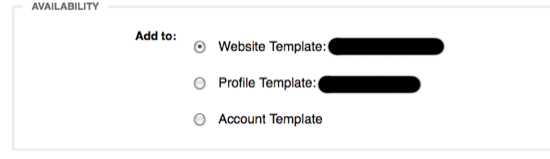

Assign it to a website, a profile or an account:

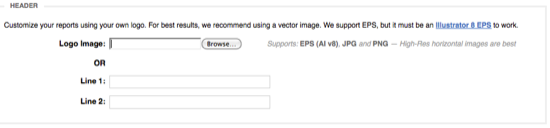

Pick a custom logo or text header:

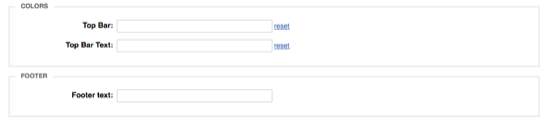

Customize the colors and the footer text

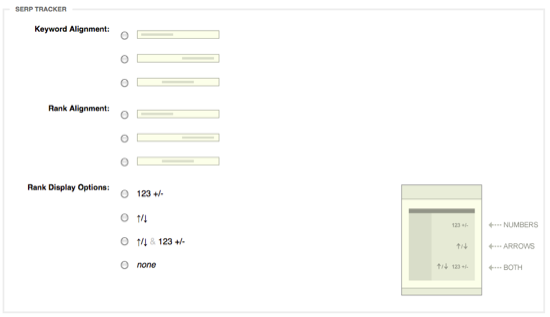

Customize the appearance of your ranking results (keyword and rank alignment, numbers/+/-/arrows)

Report Templates

Report Templates allow you to configure specific aspects of each report, saving you from having to create them over and over again for each client or each report:

Similar to a Brand Template you start by clicking "New Report Template" in the Campaign Report screen. What I like about these reports is that they are fully customizable. Maybe you have clients that just hire you for keyword research, or just links, or both of those and social media (and so on). Well with the customization flexibility of these reports you can set up a custom template for just about any reporting need you may come across.

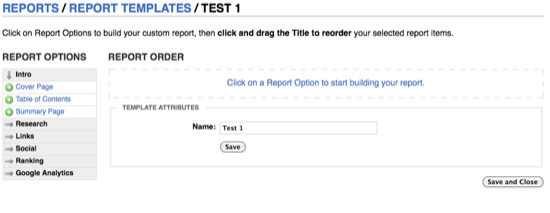

So name your report (I did Test 1) and you'll see the creation options on the left side:

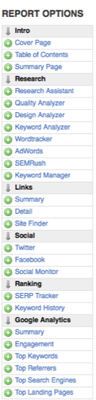

To give you an idea of how deep your customization and reporting options are, here is that left bar fully extended:

Every singe one of those tabs is a customizable report :) So you just click on the ones you want to add and they are added to the report template.

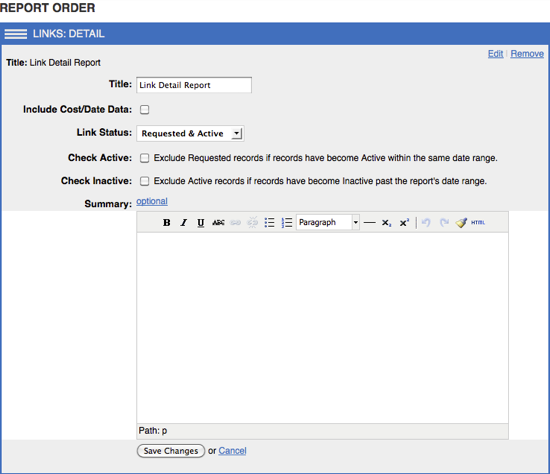

Customizing Reporting Fields

When you add the fields to a template, or when you are creating the report, you can expand the section and customize each one (the summary page and title are report-wide options, but they each have other options depending on the piece you are reporting on). Here's the customization options you get with the link detail module:

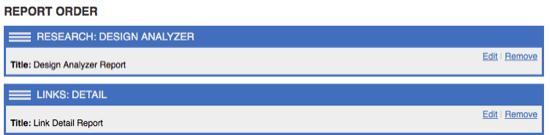

Once you add more than one, you can collapse them and reorder them in a drag and drop fashion:

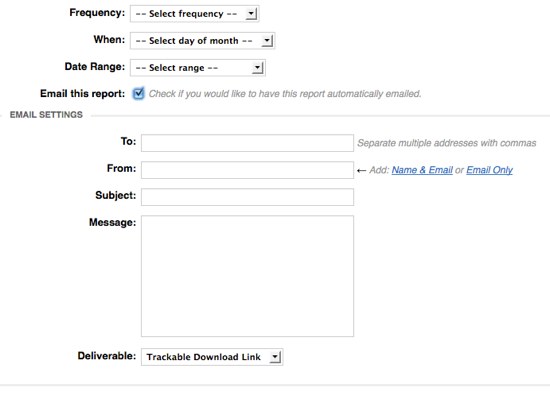

Scheduling and Auto Delivery

Maybe you want to auto-deliver reports to employees for further customization or presentation work, or maybe you want to set and forget the delivery of reports to your clients. You can send reports as attached PDF's or as trackable download links.

You can do monthly, daily, weekly, or quarterly reports and select a day between 1-28 as well as define a custom date range.

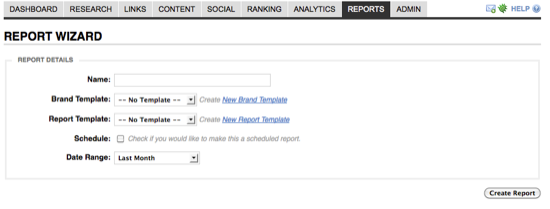

Create the Report

It's really easy to create a detailed, customized report within Raven. Name your report, select your brand and report templates, set you scheduling and delivery options, and create! It is really that simple. As mentioned in the Report Template section you can add, customize, and arrange all those reporting areas to suit your reporting needs.

Additional Features

While I focused on key areas that sold me on Raven, I also utilize their other tools. In addition to the tools mentioned above Raven's tools also include

- Blog Manager - manage unlimited WordPress blogs (or any blog that supports XML-RPC

- Competitor Manager - track competitors and see key metrics like PageRank, pages in Google's index, and links.

- Contact Manager - this is where Raven stores (via this feature and via the Link Manager) contact information (mailing address, email, phone number, username, company, etc) which you can assign to different links, websites, and tasks

- Content Manager - a place where you can manager articles, website content, and posts. You can add keyword analyzer features to check frequency, density, and relevance. You can also list where the article or post was used (quite handy for link building campaigns)

- Design Analyzer - what I really like about this tool is the ability to look at your website in a Lynx browser

- Event Manager - similar to GA annotations, the event manager can help you track any type of event related to your site. You can even include these in your reports, which is great for in-house record-keeping and/or client reports.

- Firefox Toolbar - a killer link building assistant as discussed in the link section of this review. You can easily switch between your site profiles in the toolbar, use the analyzer features, and use logins for different social media personas.

- Keyword Manager - a place to store potential and active keywords. A handy tagging system can be used to group keywords and you can add them to your rank tracker in one click.

- Persona Manager - store multiple social network profiles and logins. In addition, you can also share these with staff members. This functionality is also available in the Toolbar.

- Quality Analyzer - you can use this in your Raven account and from the Toolbar (which is a nice feature when scouring the web for links). It measures the site's indexed page count in Google and Yahoo, links from Yahoo, .edu links, .gov links, domain age, domain expiration, Google PageRank, Alexa Traffic Rank, and whether or not the site is in DMOZ. It assigns a numerical score based on this data.

- Research Assistant - enter a domain to see data regarding the site's paid keywords, organic keywords, and competitors in both. You can one-click add a keyword or a competing URL to either the keyword/competition manager or to your SERP tracker (rank checker). Enter a keyword to see matching keywords and related keyword with data from SEM Rush, Google, and Wordtracker. View a page to see semantic data powered by OpenCalais.Com and keywords (related to the page's content) from AlchemyAPI.Com.

- SERP Tracker - Raven's rank checker, runs once per week automatically, has historical chart and data viewing capabilities, and supports a bunch of international versions of Google, Yahoo, and Bing.

- Google Analytics Integration - tie in your Google Analytics account for easy viewing and slick reporting.

- Social Media - in addition to Facebook and Twitter Raven also offers brand/keyword monitoring services, integration with KnowEm and Omgili.

- Website Directory - records of all the websites used in your campaign with filtering options to sort out different site and link types.

- iPhone and iPad apps

Give Raven a Try

Raven's integration is slick and powerful:

- Google, SEM Rush, and Wordtracker for keyword research

- Majestic SEO & SeoMoz for link building and research

- Google Analytics integration

- Twitter & Facebook integration with lots of engagement goodies

Raven currently offers a free 30 trial, no credit card required, on all their plans. The combination of SEO tools, link building tools, social media integration, and custom reporting options were strong selling points for me especially at the price points Raven offers. I think you can also see the significant time saving benefits Raven provides, especially in the reporting module.

There isn't much to lose, a free 30 day trial that doesn't require you to enter any payment information. So give Raven's SEO Tools a try.

Pricing and Free Trial Info