Local is Huge

Google's US ad revenue is roughly 15 billion & the size of the US Yellow Pages market is roughly 14 billion. Most of that money is still in print, but that shift is only accelerating with Google's push into local.

Further, cell phones are location aware, can incorporate location into search suggest, and on the last quarterly conference call Google's Jonathan Rosenberg highlighted that mobile ads were already a billion Dollar market for Google.

Google has been working on localization for years, and as a top priority. When asked "Anything you’ve focused on more recently than freshness?" Amit Singal stated:

Localization. We were not local enough in multiple countries, especially in countries where there are multiple languages or in countries whose language is the same as the majority country.

So in Austria, where they speak German, they were getting many more German results because the German Web is bigger, the German linkage is bigger. Or in the U.K., they were getting American results, or in India or New Zealand. So we built a team around it and we have made great strides in localization. And we have had a lot of success internationally.

The Big Shift

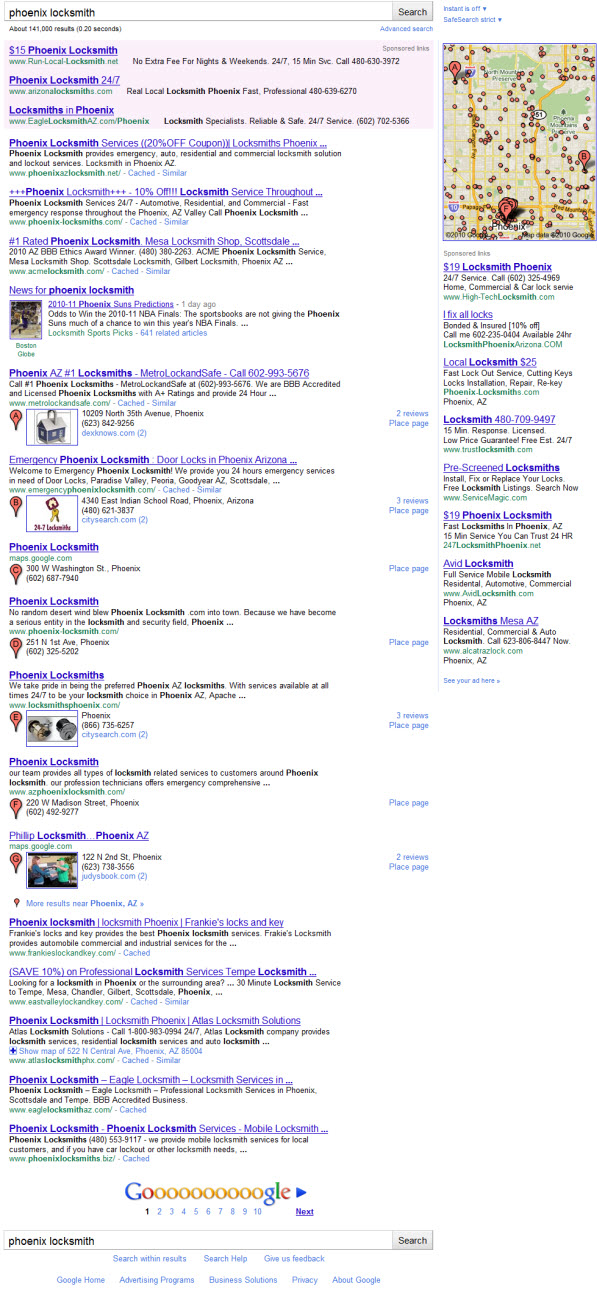

I have been saving some notes on the push toward local for a while now, and with Google's launch of the new localized search results it is about time to do an overview. First here is Google's official announcement, and some great reviews from many top blogs.

Some of the localized results not only appear for things like Chicago pizza but also for single word searches in some cases, like pizza or flowers.

Promoting local businesses via the new formats has many strategic business benefits for Google

- assuming they track user interactions, then eventually the relevancy is better for the end users

- allows local businesses to begin to see more value from search, so they are more likely to invest into a search strategy

- creates a direct relationship with business owners which can later be leveraged (in the past Google has marketed AdWords coupons to Google Analytics users)

- if a nationwide brand can't dominate everywhere just because they are the brand, it means that they will have to pony up on the AdWords front if they want to keep 100% exposure

- if Google manages to put more diversity into the local results then they can put more weight on domain authority on the global results (for instance, they have: looked at query chains, recommended brands in the search results, shown many results from the lead brand on a branded search query, listed the official site for searches for a brand + a location where that brand has no office, etc.)

- it puts eye candy in the right rail that can make searchers more inclined to look over there

- it makes SEO more complex & expensive

- it allows Google to begin monetizing the organic results (rather than hiding them)

- it puts in place an infrastructure which can be used in other markets outside of local

Data Data Data

Off the start it is hard to know what to make of this unless one draws historical parallels. At first one might be inclined to say the yellow page directories are screwed, but the transition could be a bit more subtle. The important thing to remember is that now that the results are in place, Google can test and collect data.

More data sources is typically better than better algorithms, and Google has highlighted that one of their richest sources of data is through tracking searcher behavior on their own websites.

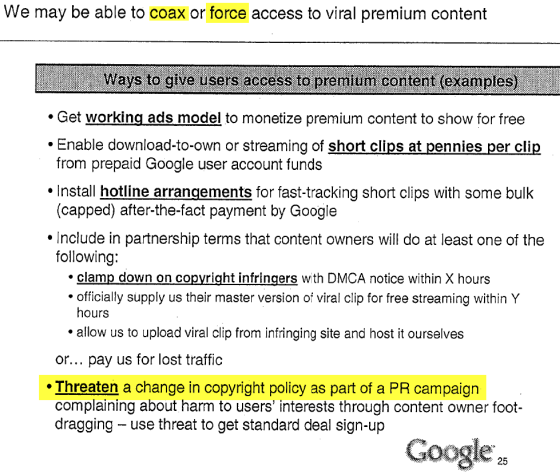

Pardon Me, While I Steal Your Lunch

There are 2 strong ways to build a competitive advantage on the data front:

- make your data better

- starve competing business models to make them worse

Off the start yellow page sites might get a fair shake, but ultimately the direction they are headed in is being increasingly squeezed. In a mobile connected world with Google owning 97% search marketshare, while offering localized search auto-complete, ads that map to physical locations, and creating a mobile coupon offers network, the yellow page companies are a man without a country. Or perhaps a country without a plot of land. ;)

They are so desperate that they are cross licensing amongst leading competitors. But that just turns their data into more of a commodity.

Last December I cringed when I read David Swanson, the CEO of R.H. Donnelley, state: "People relate to us as a product company -- the yellow-pages -- but we don't get paid by people who use the yellow-pages, we get paid by small businesses for helping them create ad messages, build websites, and show up in search engine results. ... Most of the time today, you are not even realizing that you are interacting with us."

After seeing their high level of churn & reading the above comment, at that point I felt someone should have sent him the memo about the fate of thin affiliates on AdWords. Not to worry, truth would come out in time. ;)

Making things worse, not only is local heavily integrated into core search, with search suggest being localized, but Google is also dialing for Dollars offering flat rate map ads (with a free trial) and is testing fully automated flat rate local automated AdWords ads again.

Basic Economics

How does a business maximize yield? Externalize costs & internalize profits. Pretty straightforward. To do this effectively, Google wants to cut out as many middle men out of the game as possible. This means Google might decide to feed off your data while driving no traffic to your business, but rather driving you into bankruptcy.

Ultimately, what is being commoditized? Labor. More specifically:

- the affiliate who took the risk to connect keywords and products

- the labor that went into collecting & verifying local data

- the labor that went into creating the editorial content on the web graph and the links which search engines rely on as their backbone.

- the labor that went into manually creating local AdWords accounts, tracking their results, & optimizing them (which Google tracks & uses as the basis for their automated campaigns)

- the labor that went into structuring content with the likes of micro-formats

- the labor that went into policing and formatting user reviews

- many other pieces of labor that the above labor ties into

Of course Google squirms out of any complaints by highlighting the seedy ends of the market and/or by highlighting how they only use such data "in aggregate" ... but if you are the one losing your job & having your labor used against you, "the aggregate" still blows as an excuse.

But if Google drives a business they are relying on into bankruptcy, won't that make their own search results worse?

Nope.

For 2 big reasons:

- you are only judged on your *relative* performance against existing competitors

- after Google drives some other players out of the marketplace and/or makes their data sets less complete, the end result is Google having the direct relationships with the advertisers and the most complete data set

The reason many Google changes come with limited monetization off the start is so that people won't question their motives.

Basically I think they look at it this way: "We don't care if we kill off a signal of relevancy because we will always be able to create more. If we poison the well for everyone else while giving ourselves a unique competitive advantage it is a double win. It is just like the murky gray area book deal which makes start up innovation prohibitively expensive while locking in a lasting competitive advantage for Google."

You would never hear Google state that sort of stuff publicly, but when you look at their private internal slides you see those sorts of thoughts are part of their strategy.

What is Spam?

The real Google guidelines should read something like this:

Fundamentally, the way to think about Google's perception of spam is that if Google can offer a similar quality service without much cost & without much effort then your site is spam.

Google doesn't come right out and say that (for anti-trust reasons), but they have mentioned the problem of search results in search results. And their remote rater documents did state this:

After typing a query, the search engine user sees a result page. You can think of the results on the result page as a list. Sometimes, the best results for "queries that ask for a list" are the best individual examples from that list. The page of search results itself is a nice list for the user.

...But This is Only Local...

After reading the above some SEOs might have a sigh of relief thinking "well at least this is only local."

To me that mindset is folly though.

Think back to the unveiling of Universal search. At first it was a limited beta test with some news sites, then Google bought Youtube, and then the search landscape changed...everyone wanted videos and all the other stuff all the time. :D

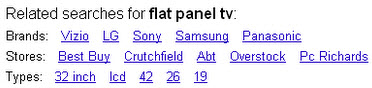

Anyone who thinks this rich content SERP which promotes Google is only about local is going to be sorely disappointed as it moves to:

- travel search (Google doesn't need to sell airline tickets so long as they can show you who is cheapest & then book you on a high margin hotel)

- any form of paid media (ebooks, music, magazines, newspapers, videos, anything taking micro-payments)

- real estate

- large lead generation markets (like insurance, mortgage, credit cards, .edu)

- ecommerce search

- perhaps eventually even markets like live ticketing for events

Google does query classification and can shape search traffic in ways that most people do not understand. If enough publishers provide the same sorts of data and use the same types of tags, they are creating new sets of navigation for Google to offer end users.

No need to navigate through a publisher's website until *after* you have passed the click toll booth.

Try #3 at Reviews

Google SearchWiki failed in large part because it confused users. Google launched SideWiki about a year ago, but my guess is it isn't fairing much better. When SideWiki launched Danny Sullivan wrote:

Sidewiki feels like another swing at something Google seems to desperately desires — a community of experts offering high quality comments. Google says that’s something that its cofounders Larry Page and Sergey Brin wanted more than a system for ranking web pages. They really wanted a system to annotate pages across the web.

The only way they are going to get that critical mass is by putting that stuff right in the search results. It starts with local (& scrape + mash in other areas like ecommerce), but you know what they want & they are nothing if not determined to get what they want! ;)

Long Term Implications

Scrape / mash / redirect may be within the legal limits of fair use, but it falls short in spirit. At some point publishers who recognize what is going on will align with better partners. We are already seeing an angry reaction to Google from within the travel vertical and from companies in the TV market.

Ultimately it is webmasters, web designers & web developers who market and promote search engines. If at some point it becomes consensus that Google is capturing more value than they create, or that perhaps Google search results have too much miscellaneous junk in them, they could push a lot more searchers over to search services which are more minimalistic + publisher friendly. Blekko launches Monday, and their approach to search is much like Google's early approach was. :)