Economics Drive Literally Everything

Media is all about profit margins. eHow was originally founded in 1998 & had $36 million in venture capital behind it. But the original cost structure was flawed due to high content costs. The site failed so badly that it was sold in 2004 for $100,000. The original site owners had GoogleBot blocked. Simply by unblocking GoogleBot and doing basic SEO to existing content the site had a revenue run rate of $4 million Dollars within 2 years, which allowed the site to be flipped for a 400-fold profit.

Demand Media bought it in 2006 and has pushed to scale it while making cheaper and lower quality content. Demand Media has since gone public with a $1.5+ billion valuation based largely on eHow. Just prior to the DMD IPO Google's Matt Cutts wrote a warning about content mills on the official Google blog.

Seeing Value Where Others do Not

People are arguing if Demand Media is overvalued at its current valuation, and at one point the NYT was debating buying Demand Media by rolling About.com into Demand & own 49% of the combined company. But the salient point to me is that we are talking about something that was bought for only $100,000 7 years ago. Sure the opportunities today may be smaller scale and different, but if you see value where others do not then recycling something that has been discarded can be quite valuable.

In this 6-page article about the eHow way on page 6 there was a recycling tip any SEO can do with the help of some OCR software:

The key, he said, was to keep costs low. If possible, don't pay for the intellectual content. Look for material, he urged, on which the copyright has expired. Any book published in the U.S. before 1923 was available.

He said he was in the process of turning vanloads of old books into websites. With a few hours of labor, for example, you could take a turn-of-the-century Creole cookbook and transform it into the definitive site for vintage Creole recipes. Google's AdSense program would then load the thing up with ads for shrimp and cooking pots and spices and direct people looking for shrimp recipes to your website.

A spin on the above, LoveToKnow has published a 1911 encyclopedia online. And ArticlesBase (an article farm which built up its popularity by linking to contributior sites) now slaps nofollow on all outbound links & is pulling in a cool $500k per month!

How did ArticlesBase grow to that size? It and Ezinearticles were a couple of the "selected few" which lasted through the last major burn down of article directories about 3 or 4 years ago. But it seems their model has peaked after this last Google update.

Search is Political

Content farms are proving to be a political issue in search. They are beginning to replace "the evil SEO" in the eye of the press as "what creates spam." Rich Skrenta created a spam clock which stated that a million spam pages are created every hour. He then followed up by banning 20 content farms from the Blekko search results & burning spam man. ;)

Microsoft also got in on the bashing, with Harry Shum highlighting that Google was funding web pollution. When Blekko's model is based on claiming Google polluted the web with crap, Microsoft says the same thing, and there is a rash of end user complaints, there are few independent experts the media can call upon to talk about Google - unless they decide to talk to SEOs, who tend to be quite happy to highlight Google's embarrassing hypocrisy. Freelance writers may claim that marketing is what screwed up the web, but ultimately Google has nobody credible and well known to defend them at this point. The only people who can defend Google's approach are those who have a taste in the revenue stream. Hence why Google had to act against content farms.

Always Be Selling

Demand Media's CEO is the consummate sales professional, when Google first warned about content farms Mr. Rosenblatt he used the above to disclaim that Google means "duplicate content" when they write about content farms. Then Demand quickly scrambled after they were caught publishing plagiarized content the following day. :)

Google stepping up their public relations smear campaigns against Bing and others is leaving Google looking either hypocritical or ignorant in many instances, like when a Google engineer lambasted an ad network without realizing Google was serving the scam ad.

Social Answers?

While on the content farm topic, it is worth mentioning that Answers.com was bought for $127 million & there is also a bunch of news about Ask's strategy in the Ask section near the bottom of this newsletter. On the social end of the answer farm model, Facebook was rumored to be looking into the space & Twitter bought a social answer service called Fluther. Even Groupon seems to be looking at the space. Quora is well hyped on TechCrunch, but will have a hard time expanding beyond the tech core it has developed.

High Quality Answer Communities?

At first glance StackExchange's growth looks exciting, but it has basically gone nowhere outside of the programming niche. In my opinion they are going to need to find subject matter experts to lead some of their niche sites & either pay those experts or give them equity in the sites if they want to lead in other markets. Worse yet, few people are as well educated about online schemes as programmers, so the other sites will not only lack leadership, but will also be much harder to police. Just look at the junk on Yahoo! Answers! There are Wordpress themes and open source CMS tools for QnA sites, but I would pick a tight niche early if I was going to build one as the broader sites seem to be full of spam and the niche sites struggle to cross the chasm. As of writing this, fewer than 50 Mahalo answers pages currently indexed in Google have over 100 views. It flat out failed, even with financial bribes and a PR spin man behind it.

At first glance StackExchange's growth looks exciting, but it has basically gone nowhere outside of the programming niche. In my opinion they are going to need to find subject matter experts to lead some of their niche sites & either pay those experts or give them equity in the sites if they want to lead in other markets. Worse yet, few people are as well educated about online schemes as programmers, so the other sites will not only lack leadership, but will also be much harder to police. Just look at the junk on Yahoo! Answers! There are Wordpress themes and open source CMS tools for QnA sites, but I would pick a tight niche early if I was going to build one as the broader sites seem to be full of spam and the niche sites struggle to cross the chasm. As of writing this, fewer than 50 Mahalo answers pages currently indexed in Google have over 100 views. It flat out failed, even with financial bribes and a PR spin man behind it.

A Warning

A Google engineer nicknamed moultano stated the following on Hacker News:

At the organizational level, Google is essentially chaos. In search quality in particular, once you've demonstrated that you can do useful stuff on your own, you're pretty much free to work on whatever you think is important. I don't think there's even a mechanism for shifting priorities like that.

We've been working on this issue for a long time, and made some progress. These efforts started long before the recent spat of news articles. I've personally been working on it for over a year. The central issue is that it's very difficult to make changes that sacrifice "on-topic-ness" for "good-ness" that don't make the results in general worse. You can expect some big changes here very shortly though.

A good example of the importance of padding out results with junk on-topic content to aid perceived relevancy can be seen by looking at the last screenshot of a search result here. Blekko banned the farms, but without them there is not much relevant content that is precisely on-topic. (In other words, content farms may be junk, but it is hard to have the same level of perceived relevancy without them).

New Signals

Google created a Chrome plugin to solicit end user feedback on content mills, but that will likely only reach tech savvy folks & the feedback is private. Google can claim to use any justification for removing sites they do not like though, just like they do with select link buying engagements. Look the other way where it is beneficial, and remove those which you personally dislike.

In a recent WSJ article Amit Singhal was quoted as saying new signals have been added to Google's relevancy algorithms:

Singhal did say that the company added numerous “signals,” or factors it would incorporate into its algorithm for ranking sites. Among those signals are “how users interact with” a site. Google has said previously that, among other things, it often measures whether users click the “back” button quickly after visiting a search result, which might indicate a lack of satisfaction with the site.

In addition, Google got feedback from the hundreds of people outside the company that it hires to regularly evaluate changes. These “human raters” are asked to look at results for certain search queries and questions such as, “Would you give your credit card number to this site?” and “Would you take medical advice for your children from those sites,” Singhal said.

Evolving the Model

One interesting way to evolve the content farm model is through the use of tight editorial focus, a core handful of strong editors, and wiki software. WikiHow was launched by a former eHow owner, and when you consider how limited their relative resources are, their traffic level and perceived editorial quality are quite high. Jack Herrick has struck how-to gold twice!

Going Political?

AOL purchased The Huffington Post for $315 million. Here are some reviews of that purchase. The following analysis is a bit rough, but I still think it is spot on - contrary to popular belief, most of Huffington Post's pageviews are still driven by their professionally sourced content.

Editors who have a distaste for pageview journalism are already quitting AOL. But if you are interested in the content farm business model, AOL's business plan was leaked publicly. Oooops. :D

Conflating Scraper Sites vs Content Farms

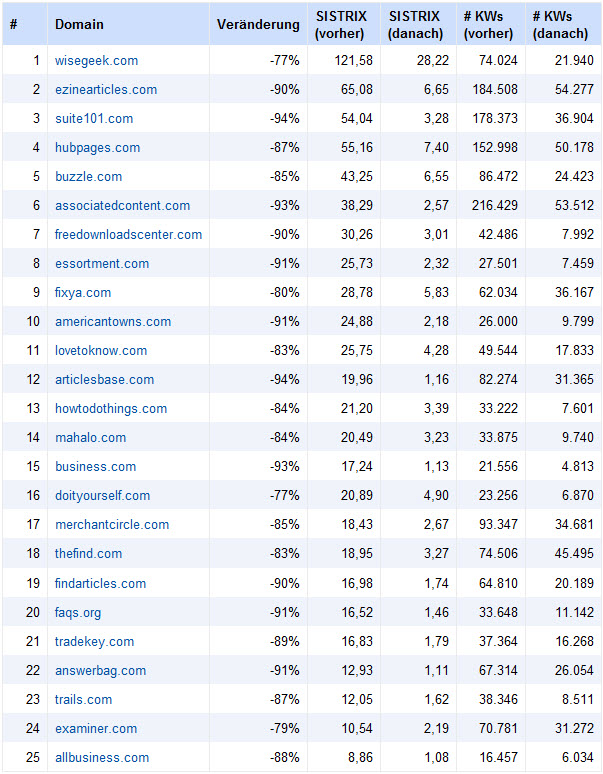

In addition to general content farms, Google is fighting a war against scraper websites. One such algorithmic update has already been done against sites repurposing content, and the content farm algorithm just recently went live & whacked a bunch of content farms. Check out the top losers from Sistrix's data.

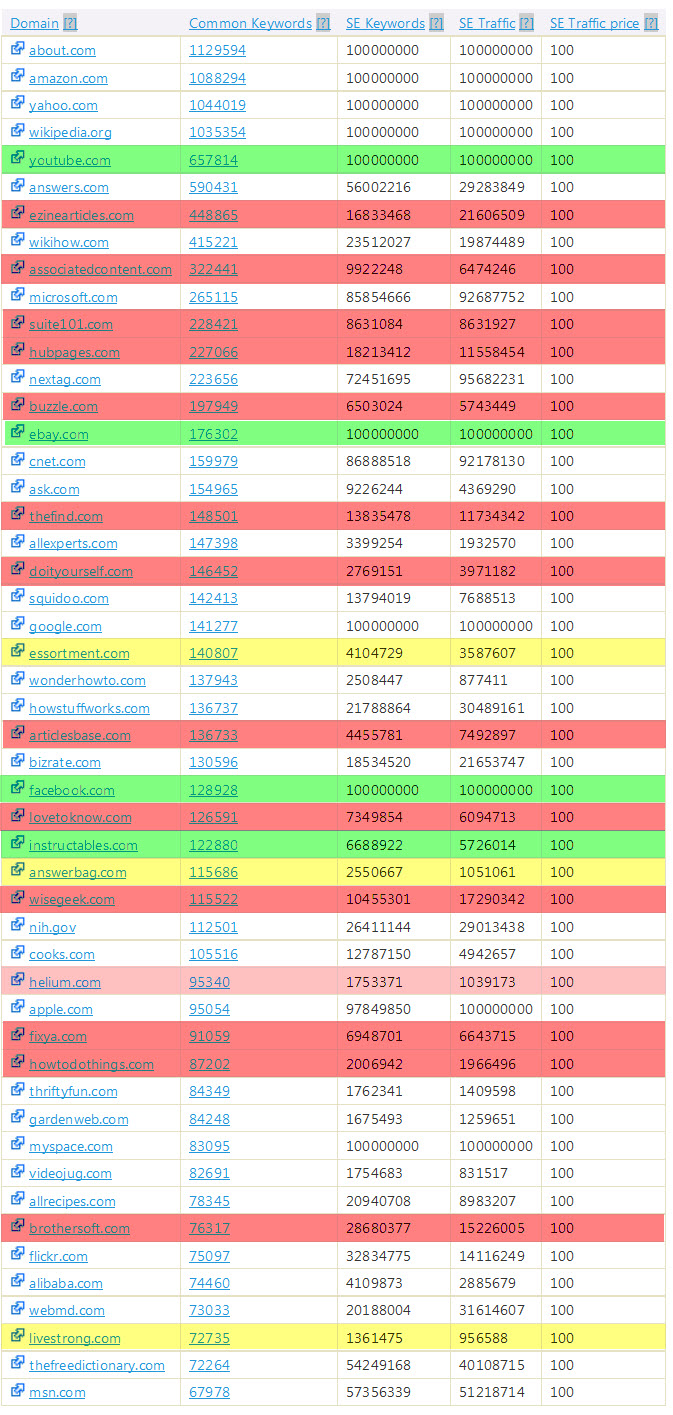

Notice any content farms missing from the above list? Maybe the biggest one? Here is a list of some of eHow's closest competing sites (based on keywords, from SEM Rush). The ones in red got pummeled, the ones in yellow dropped as well & were fellow Demand Media sites, and the ones in green gained traffic.

Getting Hammered

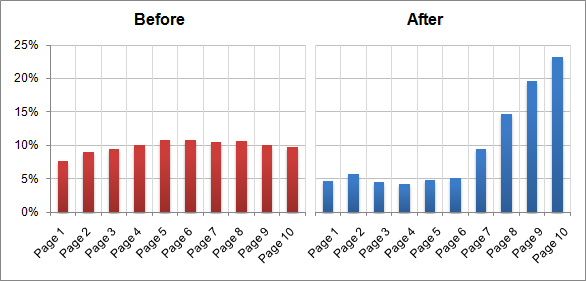

Jason "will do anything for ink" Calacanis recently gave an about face speech claiming people need to step away from the content farm business model, and in doing so admitted that roughly everything he said about Mahalo over the past couple years was a complete lie. Surprise, surprise. The interesting bit is that the start up community - which used to fawn over his huckster PR driven ploys - no longer buys them. Jason claimed to have "pivoted" his business model again, but once again we see more garbage content. His credibility has been spent. And so have his rankings! Sistrix shows that not only is he ranking for fewer keywords, but that the graph has skewed downward to worse average positions.

After the Crash, What is Next?

The biggest content farms like Ask & eHow will still do well in the short run. Over the long run I see Google bringing the results of content farms to the attention of book publishers & then working to slowly rotate out from farmed content to published book content. Most readers do not know that most book writers are lucky if they earn $10,000 writing a 300 or 400 page tome. Publishers tell book authors that with the additional exposure they can often sell lots more other things, but unless the content is highly targeted that might not back out well for the author. But that cheap content is far better structured and far more vetted than the mill stuff is.

Over the past week I have been seeing more ebooks in the search results, though I am not entirely sure if that is just because I am searching for more rare technical stuff that simply might not be online.

The Question Nobody is Asking

I highlighted Google's hypocritical position in judging intent with links while claiming they need an algorithmic approach to content farms. But nobody is thinking beyond the obvious question. Everyone wants to know who Google punished the most, but nobody is asking who gained the most from this update.

Demand Media put out a statement that their traffic profile did not change materially.

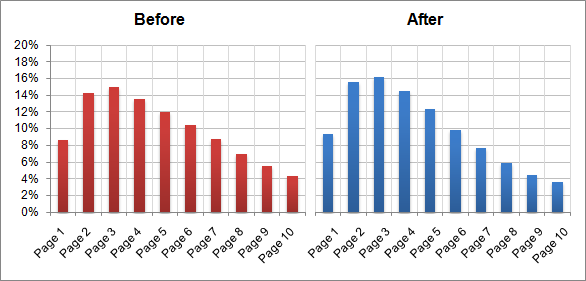

But what they didn't mention is that eHow's rankings are actually up! In fact, their new distribution chart looks just like their old one, only skewed a bit to the left with higher rankings. eHow's profile is 15% better than it was before the update & the only site which gained more traffic from this update than eHow did was Youtube.

How Did We Get Here?

People may have been sorta aware of garbage content & saw it ranking, but were apathetic about it. Most people are far more passive consumers of search than they believe themselves to be - when the default orders switch people still tend to click the top ranked result. It was only when eHow started branding itself as a cheap and disposable answer factory that people started to become outraged with their business model.

Demand Media further benefited from flagrant spammy guideline violations, like 301 redirecting expired domains into deep eHow pages. People I know who have done similar have seen their sites torched in Google. But eHow is different!

If you listen to Richard's interviews, you would never know him to be the type to redirect expired domains:

We really want to let Google speak for themselves. Whatever Matt Cutts and Google want to (say) about quality we totally support that because again that’s their corporate interest. What we said and would have said is we applaud Google removing duplicate content ... removing shallow, low quality content because it clogs the search results. Both we and Google are 100 percent focused on making the consumer happy. It’s the right thing to do and it’s good for our business.

If you syndicate Google's spin you can get away with things that a normal person can't. Which is why eHow renounced the content farm label even faster than they created it.

Article directories & topical hub sites have been online since before eHow was created. But eHow's marketing campaign was so caustic & so toxic that it literally destroyed the game for most of their competitors.

And now that Google has "fought content farms" (while managing to somehow miss eHow with TheAlgorithm) most of Demand Media's competitors are dead & Richard Rosenblatt gets to ride off into the sunset with another hundred million Dollars, as eHow is the chosen one. :D

Long live the content farm!