Changing the Cost-benefit Analysis

In the last post I mentioned how the US government tried to change the cost benefit analysis for some sleazy executives at pharmaceutical corporations which continue to operate as criminal enterprises that simply view repeated fines as a calculable cost of doing business.

If you think about what Google's Panda update did, it largely changed the cost-benefit analysis of many online publishing business models. Some will be frozen with fear, others will desperately throw money at folks who may or may not have solutions, while others who gained will buy additional marketshare for pennies on the Dollar.

"We actually came up with a classifier to say, okay, IRS or Wikipedia or New York Times is over on this side, and the low-quality sites are over on this side." - Matt Cutts

Now that Google is picking winners and losers the gap between winners & losers rapidly grows as the winners reinvest.

And that word invest is key to understanding the ecosystem.

Beware of Scrapers

To those who are not yet successful with search, the idea of spending a lot of money building on a strategy becomes a bit more risky when you see companies like Demand Media that have spent $100's of millions growing an empire only to see 40% of the market value evaporate in a couple weeks due to a single Google update. There are literally thousands of webmasters furiously filing DMCA reports to Google after Panda, because Google decided that the content quality was fine if it was on a scraper site, but the exact same content lacked quality when on the original source site.

And even some sites that were not hit by Panda (even some which have thousands of inbound links) are still getting outranked by mirroring scrapers. Geordie spent hours sharing tips on how to boost lifetime customer value. For his efforts, Google decided to rank a couple scrapers as the original source & filter out PPCBlog as duplicate content, in spite of one of the scrapers even linking to the source site.

Outstanding work Google! Killer algo :D

Even if the thinking is misguided or an out of context headline, Reuters articles like Is SEO DOA as a core marketing strategy? do nothing to build confidence to make large investments in the search channel. Which only further aids people trying to do it on the cheap. Which gets harder to do as SEO grows more complex. Which only further aids the market for lemons effect.

Market Domination

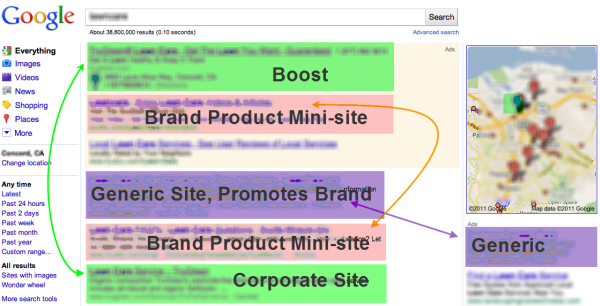

At the opposite end of the spectrum, there are currently some search results which look like this

All of the colored boxes are the same company. You need a quite large monitor to get any level of result diversity above the fold. The company that was on the right side of the classifier can keep investing to build a nearly impenetrable moat, while others who fell back will have a hard time justifying the investment. Who wants to scale up on costs while revenues are down & the odds of success are lower? Few will. But the company with the top 3 (or top 6) results is collecting the data, refining their pitch, and re-investing into locking down the market.

Much like the Gini coefficient shows increasing wealth consolidation in the United States, search results where winners and losers are chose by search engines creates a divide where doing x will be very profitable for company A, while doing the exact same thing will be a sure money loser for company B.

Thin Arbitrary Lines in the Sand

The lines between optimization & spam blur as some trusted sites are able to rank a doorway page or a recycled tweet. Once site owners know they are trusted, you can count on them green lighting endless content production.

Scraping the Scrape of the Scrape

Many mainstream media websites have topics subdomains where they use services like DayLife or Truveo to auto-generate a near endless number of "content pages." To appreciate how circular it all is consider the following

- a reporter makes a minimally informing Tweet

- Huffington Post scrapes that 3rd party Tweet and ranks it as a page

- I write a blog post about how outrageous that Huffington Post "page" was

- SFGate.com has an auto-generated "Huffington Post" topics page (topics.sfgate.com/topics/The_Huffington_Post) which highlighted my blog post

- some of the newspaper scraper pages rank in the search results for keywords

- sites like Mahalo scrape the scrape of the scrape

- etc.

At some point in some such loops I am pretty certain the loops start feeding back into themselves & create a near-infinite cycle :D

An Endless Sea of "Trustworthy" Content

The OPA mentioned a billion dollar shift in revenues which favors large newspapers. But those "pure" old-school media sites now use services like DayLife or Truveo to auto-generate content pages. And it is fine when they do it.

...but...

The newspapers call others scammy agents of piracy and copyright violators for doing far less at lower scale, all while wanting to still be ranked highly (even while putting their own original content behind a paywall), and then go out and do the exact same scraping that they complain about others doing. It is the tragedy of the commons played out on an infinite web where the cost of an additional page is under a cent & everyone is farming for attention.

And the piece of pie everyone is farming for is shrinking as:

Brands Becoming the Media

Rather than subsidizing the media with ads, brands are becoming the media:

Aware that consumers spend someplace between eight and 10 hours researching cars before they contact a dealer, auto markers and dealers are vectoring ever-greater portions of their marketing budgets into intercepting consumers online.

As but one example, Ford is so keen about capturing online tire-kickers that its website gives side-by-side comparisons between its Fiesta and competing brands. While you are on the Ford site, you can price the car of your dreams, investigate financing options, estimate your payment, view local dealer inventories and request a quote from a dealer.

Search Ads Replacing the Organic Search Results

AdWords is eating up more of the value chain by pushing big brands

- comparison ads = same brands that were in AdWords appearing again

- bigger adwords ads with more extensions = less diversity above the fold

- additional adwords ad formats (like product ads) = less diversity (most of the advertisers who first tried it were big box stores, and since it is priced on a CPA profit share basis the biggest brands that typically have more pricing power with manufacturers win)

Other search services like Ask.com and Yahoo! Search are even more aggressive with nepotistic self promotion.

Small Businesses Walking a Tightrope (or, the Plank)

Not only are big brands being propped up with larger ad units (and algorithmically promoted in the organic search results) but the unstable nature of Google's results further favors big business at the expense of small businesses via the following:

- more verticals & more ad formats = show the same sources multiple times over

- less stability = more opportunities for spammers (they typically have high margins & lots of test projects in the work...when one site drops another one is ready to pop into the game...really easy for scrapers to do...just grab content & wait for the original source to be penalized, or scrape from a source which is already penalized)

- less stability = small businesses have to fire employees hard to make payroll

- less stability = lowers multiples on site sales, making it easier for folks like WebMD, Quinstreet, BankRate, and Monster.com to buy out secondary & tertiary competing sites

If you are a small business primarily driven by organic search you either need to have big brand, big ego, big balls, or a lack of common sense to stay in the market in the years to come, as the market keeps getting consolidated. ;)