I was recently chatting with Jonah Stein about Panda & we decided it probably made sense to do a full on interview.

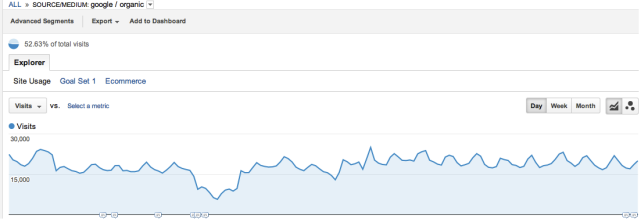

You mentioned that you had a couple customers that were hit by Panda. What sort of impact did that have on those websites?

Both of these sites saw an immediate hit of about 35% of google traffic. Ranking dropped 3-7 spots. The traffic hit was across the board, especially in the case of GreatSchools, who saw all content types hit (school profile pages, editorial content, UGC)

GreatSchools was hit on the 4-9 (panda 2.0) update and called out in the Sistrix analysis.

How hard has GreatSchools been hit? Sistrix data suggested that GreatSchools was loosing about 56% of Google traffic. The real answer is that organic Google-referred traffic to the site fell 30% on April 11 (week over week) and overall site entries are down 16%. Total page views are down 13%. The penalty, of course, is a “site wide” penalty but not all entry page types are being affected equally

Google suggested that there was perhaps some false positives but that they were generally pretty satisfied with the algorithms. For sites that were hit, how do clients respond to the SEOs? I mean did the SEO get a lot of the blame or did the clients get that the change was sort of a massive black swan?

I think I actually took it harder then they did. Sure, it hit their bottom line pretty hard, but it hit my ego. Getting paid is important but the real rush for me is ranking #1.

Fortunately none of my clients think they are inherently entitled to Google traffic, so I didn't get blamed. They were happy that I was on top of it (telling them before they noticed) and primarily wanted to know what Panda was about.

Once you get over the initial shock and the grieving, responding to Panda was a rorschach test, everyone saw something different. But is also an interesting self - reflection, especially when the initial advice coming from Greg Boser and a few others was to start to de-index content.

For clients who are not ad driven, the other interesting aspect is that generally speaking conversions were not hurt as much as traffic, so once you start focusing on the bottom line you discover the pain is a little less severe than it seemed initially.

So you mentioned that not all pages were impacted equally. I think pages where there was more competition were generally hit harder than pages that had less competition. Is that sort of inline with what you saw?

Originally I thought that was maybe the case, but as I looked at the data during the recovery process I became convinced that Panda is really the public face of a much deeper switch towards user engagement. While the Panda score is sitewide the engagement "penalty" or weighting effect on also occurs at the individual page. The pages or content areas that were hurt less by Panda seem to be the ones that were not also being hurt by the engagement issue.

On one of my clients we moved a couple sections to sub-domains, following the HubPages example and the experience of some members of your community. The interesting thing is that we moved the blog from /blog to blog.domain.com and we moved one vertical niche from /vertical-kw to vertical-kw.example.com. The vertical almost immediately recovered to pre-panda levels while the traffic to the blog stayed flat.

So the vertical was suddenly getting 2x the traffic. On the next panda push the vertical dropped 20% but that was still a huge improvement over before we moved to the subdomain. The blog didn't budge.

The primary domain also seemed to improve some, but it was hard to isolate that from the impact of all of the other changes, improvements and content consolidation we were doing.

After the next panda data push did not kill the vertical sub domain, we elected to move a second one. On the next data push, everything recovered - a clean bill of health - no pandalization at all.

but....

GreatSchools completely recovered the same day and that was November 11th, so Panda 3.0. I cannot isolate the impact of any particular change versus Google tweaking the algorithm and I think both sites were potentially edge cases for Panda anyway.

Now that we are in 3.3 or whatever the numbering calls it, I can say with confidence that moving "bad" content to a sub-domain carries the Panda score with it and you won't get any significant recovery.

You mentioned Greg Boser suggesting deindexing & doing some consolidation. Outside of canonicalization, did you test doing massive deindexing (or were subdomains your main means of testing isolation)?

We definitely collapse a lot of content, mostly 301s but maybe 25% of it was just de-indexing. That was the first response. We took 1150 categories/keyword focused landing pages and reduced to maybe 300. We did see some gains but nothing that resembled the huge boost when Panda was lifted.

Back to the rorschach test: We did a lot of improvements that yielded incremental gains but were still weighed down. I reminds me of when I used to work on cars. I had this old Audi 100 that was running poorly so I did a complete tune up, new wires, plugs, etc., but it was still running badly. Then I noticed the jet in the carburetor was mis-aligned. As soon as I fixed that, boom, the car was running great. Everything else we fixed may have been the right thing to do for SEO and/or users but it didn't solve the problem we were experiencing.

The other interesting thing is that I had a 3rd client who appeared to get hit by Panda or at least suffer from Panda like symptoms after their host went down for about 9 hours. Rankings tanked across the board, traffic down 50% for 10 days. They fully recovered on the next panda push. My theory is that this outage pushed their engagement metrics over the edge somehow. Of course, it may not have really been Panda at all but the ranking reports and traffic drops felt like Panda. The timing was after November 11th, so it was a more recent version of the Panda infrastructure.

Panda 1.0 was clearly a rush job and 2.0 seemed to be a response to the issues it created and the fact that demand media got a free pass. I think it took 6-8 months for them to really get the infrastructure robust.

My takeaways from Panda are that this is not an individual change or something with a magic bullet solution. Panda is clearly based on data about the user interacting with the SERP (Bounce, Pogo Sticking), time on site, page views, etc., but it is not something you can easily reduce to 1 number or a short set of recommendations. To address a site that has been Pandalized requires you to isolate the "best content" based on your user engagement and try to improve that.

I don't know if it was intentional or not but engagement as a relevancy factor winds up punishing sites who have built links and traffic through link bait and infographics because by definition these users have a very high bounce rate and a relatively low time on site. Look at the behavioral metrics in GA; if your content has 50% of people spending less than 10 seconds, that may be a problem or that may be normal. The key is to look below that top graph and see if you have a bell curve or if the next largest segment is the 11-30 second crowd.

I also think Panda is rewarding sites that have a diversified traffic stream. The higher percentage of your users who are coming direct or searching for you by name (brand) or visiting you from social the more likely Google is to see your content as high quality. Think of this from the engine's point of view instead of the site owner. Algorithmic relevancy was enough until we all learned to game that, then came links as a vote of trust. While everyone was looking at social and talking about likes as the new links they jumped ahead to the big data solution and baked an algorithm that tries to measure interaction of users as a whole with your site. The more time people spend on your site, the more ways they find it aside from organic search, the more they search for you by name, the more Google is confident you are a good site.

Based on that, are there some sites that you think have absolutely no chance of recovery? In some cases did getting hit by Panda cause sites to show even worse user metrics? (there was a guy named walkman on WebmasterWorld who suggested that some sites that had "size 13 shoe out of stock" might no longer rank for the head keywords but would rank for the "size 13" related queries.

I certainly think that if you have a IYP and you have been hit with Panda your toast unless you find a way to get huge amounts of fresh content (yelp). I don't think the size 13 shoe site has a chance but it is not about Panda. Google is about to roll out lots of semantic search changes and the only way ecommerce sites (outside of the 10 or so brands that dominate Google Products) will have a chance is with schema.org markup and Google's next generation search. The truth is the results for a search for shoes by size is a miserable experience at the moment. I wear size 16 EEEE, so I have a certain amount of expertise on this topic. :)

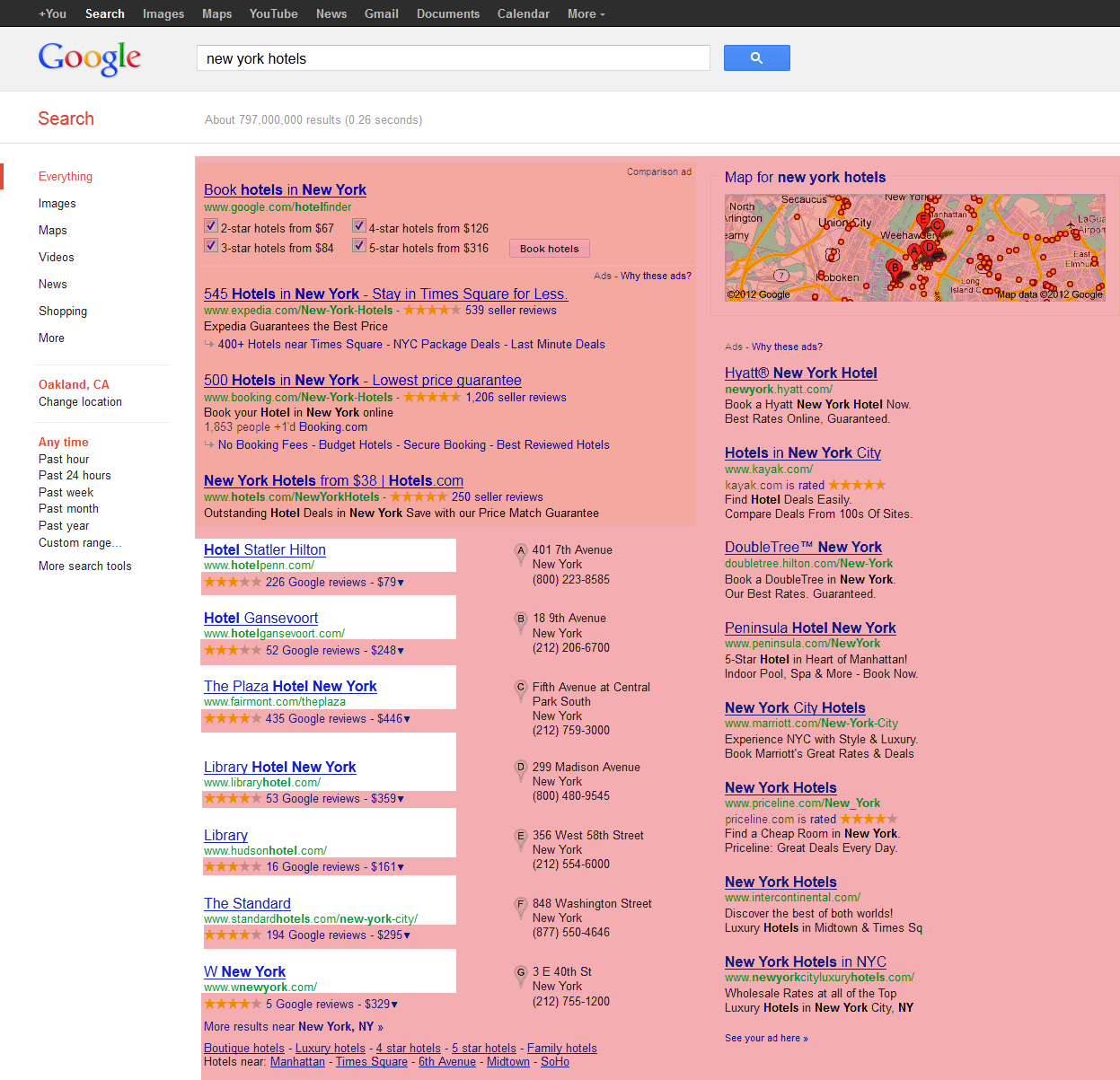

Do you see Schema as a real chance for small players? Or something that is a short term carrot before they get beat with the stick? I look at hotel search results like & and I fear that spreading as more people format their content in a format that is easy to scrape & displace. (For illustration purposes, in the below image, the areas in red are clicks that Google is paid for or clicks to fraternal Google pages.)

I doubt small players will be able to use Schema as a lifeline but it may keep you in the game long enough to transition into being a brand. The reason I have taken your advice about brands to heart and preach it to my clients is that it is short sighted to believe that any of the SEO niche strategies are going to survive if they are not supported with PR, social, PPC and display.

More importantly, however, is that they are going to focus on meeting the needs of the user as opposed to simply converting them during that visit. To use a baseball analogy, we have spent 15 years keeping score of home runs while the companies that are winning the game have been tracking walks, singles, doubles and outs. Schema may deliver some short term opportunities for traffic but I don't think size13shoes.com will be saved by the magic of semantic markup.

On the other hand, if I were running an ecommerce store, particularly if I was competing with Amazon, Bestbuy, Walmart and the hand full of giant brands that dominate the product listings in the SERP, I wouldn't bury my head in the sand and pretend that everyone else wasn't moving in that direction anyway. Maybe if you can do it right you can emerge as a winner, at least over the short and medium term.

In that sense SEO is a moving target, where "best practices" depend on the timing in the marketplace, the site you are applying the strategy to, and the cost of implementation.

Absolutely...but that is only half the story. If you are an entrepreneur who likes to build site based on a monetization strategy, then it is a moving target where you always have to keep your eyes on the horizon. For most of my clients the name of the game is actually to focus on trying to own your keyword space and take advantage of inertia. That is to say that if you understand the keywords you want to target, develop a strategy for them and then go out and be a solid brand, you will eventually win. Most of my clients rank in the top couple of spots for the key terms for their industry with a fairly conservative slow and steady strategy, but I wouldn't accept a new client who comes to me and says they want to rank a new site #1 for credit cards or debt consolidation and they have $200,000 to spend..or even $2,000,000. We may able to get there for the short term but not with strategies that will stand the test of time.

Of course, as I illustrated with the Nuts.com example on SearchEngineLand last month, the same strategy that works on a 14 year old domain may not be as effective for a newer site, even if you 301 that old domain. SEO is an art, not a science. As practitioners we need to constantly be following the latest developments but the real skill is in knowing when to apply them and how much; even then occasionally the results are surprising, disappointing or both.

I think there is a bit of a chicken vs egg problem there then if a company can't access a strong SEO without already having both significant capital & a bit of traction in the marketplace. As Google keeps making SEO more complex & more expensive do you think that will drive a lot of small players out of the market?

I think it has already happened. It isn't about the inability to access a strong SEO it is that anyone with integrity is going to lay out the obstacles they face. Time and time again we see opportunity for creativity to triumph but the odds are really stacked against you if you are an underfunded retailer.

Just last year I helped a client with 450 domains who had been hit with Panda and then with a landing page penalty. It took a few months to sort out and get the reconsideration granted (by instituting cross domain rel=canonical and eliminating all the duplicate content across their network). They are gradually recovering to maybe 80% of where they were before Panda 2.0 but I can't provide them an organic link building strategy that will lift 450 niche ecommerce sites. I can't tell them how they are going to get any placement in a shrinking organic SERP dominated by Google's dogfood, shopping results from big box retailers and enormous Adwords Product Listings with images

From that perspective, if your funding is limited, do you think you are better off attacking a market from an editorial perspective & bolting on commerce after you build momentum (rather than starting with ecommerce and then trying to bolt on editorial?

Absolutely. Clearly the path is to have built Pinterest, but seriously...

if you are passionate about something or have a disruptive idea you will succeed (or maybe fail), but if you think you can copy what others are doing and carve out a niche based on exploits I disagree. Of course, autoinsurancequoteeasy.com seems to be saying you can still make a ton of money in the quick flip world, even with a big bank roll, you need to be disruptive or innovative.

On the other hand, if you have some success in your niche you can use creativity to grow, but it has to be something new. Widget bait launched @oatmeal's online dating site but it is more likely to bury you now than help you rank #1, or at least prevent you from ranking on the matching anchor text.

When a company starts off small & editorially focused how do you know that it is time to scale up on monetization? Like if I had a successful 200 page site & wanted to add a 20,000 page database to it...would you advise against that, or how would you suggest doing that in a post-Panda world?

This is a tough call. I actually have a client in exactly this position. I guess it depends on the nature of the 20,000 pages. If you are running a niche directory (like my client) my advice to them was to add the pages to the site but no index the individual listing until they can get some unique content. This is still likely to run fowl of the engagement issue presented by Panda, so we kept the expanded pages on geo oriented sub-domains.

Earlier you mentioned that Panda challenged some of your assumptions. Could you describe how it changed your views on search?

I always tell prospects that 10-15 years ago my job was to trick search engines into delivering traffic but over the last 5-6 years it has evolved and now my job is to trick clients into developing content that users want. Panda just changed the definition of "good content" from relevant, well linked content to relevant, well linked, sticky content.

It has also made me more of a believer in diversifying traffic.

Last year Google made a huge stink about MSN "stealing" results because they were sniffing traffic streams and crawling queries on Google. The truth is that Google has so many data sources and so many signals to analyze that they don't need to crawl facebook or index links on twitter. They know where traffic is coming from and where it is going and if you are getting traffic from social, they know it.

As Google folds more data into their mix do you worry that SEO will one day become too complex to analyze (or move the needle)? Would that push SEOs to mostly work in house at bigger companies, or would being an SEO become more akin to being a public relations & media relations expert?

I think it may already be too complex to analyze in the sense that it is almost impossible to get repeatable results for every client or tell them how much traffic they are going to achieve. On the other hand, moving the needles is still reasonably easy—as long as you are in agreement about what direction everyone is going. SEO for me is about Website Optimization, about asking everyone about the search intent of the query that brings the visitors to the site and making sure we have actions that match this intent. Most of my engagements wind up being a combination of technical seo/problem solving, analytics, strategy and company wide or at least team wide education. All of these elements are driven by keyword research and are geared towards delivering traffic so it is an SEO based methodology, but the requirements for the job have morphed.

As for moving in house, I have been there and I doubt I will ever go back. Likewise, I am not really a PR or media relations expert but if the client doesn't have those skills in house I strongly suggest they invest in getting them.

Ironically, many companies still fail to get the basics right. They don't empower their team, they don't leverage their real world relationships and most importantly they don't invest enough in developing high quality content. Writing sales copy is not something you should outsource to college students!

It still amazes me how hard it is to get content from clients and how often this task is delegated to whoever is at the bottom of the org chart. Changing a few words on a page can pay huge dividends but the highest paid people in the room are rarely involved enough.

In the enterprise, SEO success is largely driven by getting everyone on board. Being a successful SEO consultant (as opposed to running your own sites) is actually one quarter about being a subject matter expert on everything related to Google, one quarter about social, PR, Link building, conversion, etc and half about being a project manager. You need to get buying from all the stake holders, strive to educate the whole team and hit deliverables.

Given the increased complexity of SEO (in needing to understand user intent, fixing a variety of symptoms to dig to the core of a problem, understanding web analytics data, faster algorithm changes, etc.) is there still a sweet spot for independent consultants who do not want to get bogged down by those who won't fully take on their advice? And what are some of your best strategies for building buy in from various stakeholders at larger companies?

The key is to charge enough and to work on a monthly retainer instead of hourly. This sounds flippant but the bottom line is to balance how many engagements you can manage at one time versus how much you want to earn every month. You can't do justice to the needs of a client and bill hourly. That creates an artificial barrier between you and their team. All of my clients know I am always available to answer any SEO related question from anyone on the team at almost any time.

The increased complexity is really job security. Most of my clients are long term relationships and the ones I enjoy the most are more or less permanent partnerships. We have been very successful together and they value having me around for strategic advice, to keep them abreast of changes and to be available when changes happen. Both of the clients who got hit by Panda have been with me for more than four years.

No one can be an expert in everything. I definitely enjoy analytics and data but I have very strong partnerships with a few other agencies that I bring in when I need them. I am very happy with the work that AnalyticsPros has done for my clients. Likewise David Rodnitzky (PPC Associates) and I have partnered on a number of clients. Both allow me to be involved in the strategy and know that the execution will be very high quality. I only wish I had some link builders I felt as passionate about (given that Deborah Mastaler is always too busy to take my clients.)

You mentioned that you thought user engagement metrics were a big part of Panda based on analytics data & such...how would a person look through analytics data to uncover such trends?

I would focus on the behavioral metrics tab in GA. It is pretty normal to have a large percentage of visitors leave before 10 seconds, but after that you should see a bell curve. Low quality content will actually have 60-70% abandonment in less than 10 seconds, but the trick is for some searches 10 seconds is a good result: weather, what is your address, hours of operations. Lots of users get what they need from searches, sometimes even from the SERP, so look for outliers. Compare different sections of your site, say the blog or those infographics & bad page types.

Its hard to say until you get your hands in the data but if you assume that individual pages can be weighed down by poor engagement and that this trend is maybe 1 year old and evolving, you can find some clues. Learn to use those advance segments and build out meaningful segmentation on your dashboard and you will be surprised how much of this will jump out at you. It is like over optimization: until you believed in it you never noticed & now you can spot it within a few seconds of looking at a page. I won't pretend engagement issues jump out that fast but it is possible to find them, especially if you are an in house SEO who really knows your site.

The other important consideration is that improving engagement for an given page is a win regardless of whether it impacts your rankings or your Panda situation. The mantra about doing what is right for the users, not the search engine may sound cliche but they reality is that most of your decisions and priorities should be driven by giving the user what they want. I won't pretend that this is the short road to SERP dominance but my philosophy is to target the user with 80% of your efforts and feed the engines with the other 20.

Thanks Jonah :)

~~~~~~~~~~

Jonah Stein has 15 years of online marketing experience and is the founder of ItsTheROI, a San Francisco Search Engine Marketing Company that specializes in ROI driven SEO and PPC initiatives. Jonah has spoken at numerous industry conferences including Search Engine Strategies, Search Marketing Expo (SMX), SMX Advanced, SIIA On Demand, the Kelsey Groups Ultimate Search Workshop and LT Pact. He also developed panels Virtual Blight for the Web 2.0 Summit and the Web 2.0 Expo. He has written for Context Web, Search Engine Land and SEO Book

Jonah is also the cofounder of two SaaS companies, including CodeGuard.com, a cloud based backup service that provides a time machine for websites and Hubkick.com, an online collaboration and task management tool that provides a simple way for groups to work together-instantly.