At the abstract level, if many people believe in something then it will grow.

The opposite is also true.

And in a limitless, virtual world, you can not see what is not there.

The Yahoo Directory

Before I got into search, the Yahoo! Directory was so important to the field of search there were entire sessions at SES conferences on how to get listed & people would even recommend using #1AAA-widgets.com styled domains to alphaspam listings to the top of the category.

The alphaspam technique was a carry over from yellow page directories - many of which have went through bankruptcy as attention & advertising shifted to the web.

Go to visit the Yahoo! Directory today and you get either a server error, a security certificate warning, or a redirect to aabacosmallbusiness.com.

Poof.

It's gone.

Before the Yahoo! Directory disappeared their quality standards were vastly diminished. As a webmaster who likes to test things, I tried submitting sites of various size and quality to different places. Some sites which would get rejected by some $10 directories were approved in the Yahoo! Directory.

The Yahoo! Directory also had a somewhat weird setting where if you canceled a directory listing in the middle of the term they would often keep it listed for many years to come - for free. After many SEOs became fearful of links the directory saw vastly reduced rates of submissions & many existing listings canceled their subscriptions, thus leaving it as a service without much of a business model.

DMOZ

At one point Google's webmaster guidelines recommended submitting to DMOZ and the Yahoo! Directory, but that recommendation led to many lesser directories sprouting up & every few years Google would play a whack-a-mole game and strip PageRank or stop indexing many of them.

Many have presumed DMOZ was on its last legs many times over the past decade. But on their 18th birthday they did a spiffy new redesign.

Different sections of the site use different color coding and the design looks rather fresh and inviting.

Take a look.

However improved the design is, it is unlikely to reverse this ranking trend.

Lacking Engagement

Why did those rankings decline though? Was it because the sites suck? Or was it because the criteria to rank changed? If the sites were good for many years it is hard to believe the quality of the sites all declined drastically in parallel.

What happened is as engagement metrics started getting folded in, sites that only point you to other sites become an unneeded step in the conversion funnel, in much the same way that Google scrubbed affiliates from the AdWords ecosystem as unneeded duplication.

What is wrong with the user experience of a general web directory? There isn't any single factor, but a combination of them...

- the breadth of general directories means their depth must necessarily be limited.

- general directory category pages ranking in search results is like search results in search results. it isn't great from the user's perspective.

- if a user already knows a category well they would likely prefer to visit a destination site rather than a category page.

- if a user doesn't already know a category, then they would prefer to use an information source which prioritizes listing the best results first. the layout for most general web directories is a list of results which are typically in alphabetical order rather than displaying the best result first

- in order to sound authoritative many directories prefer to use a neutral tone

If a directory mostly links to lower quality sites Google can choose to either not index it or not trust links from it. And even if a directory generally links to trustworthy sites, Google doesn't need to rank it to extract most the value from it.

The trend of lower traffic to the top tier general directory sites has happened across the board.

Many years ago Google's remote rater guidelines cited Joeant as a trustworthy directory.

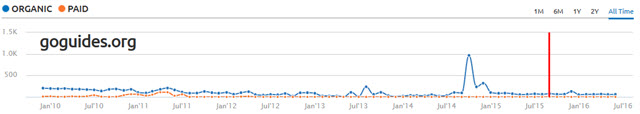

Their traffic chart looks like this.

And the same sort of trend is true for BOTW, Business.com, GoGuides.org, etc.

There is basically nothing a general web directory can do to rank well in Google on a sustainable basis, at least not in the English language.

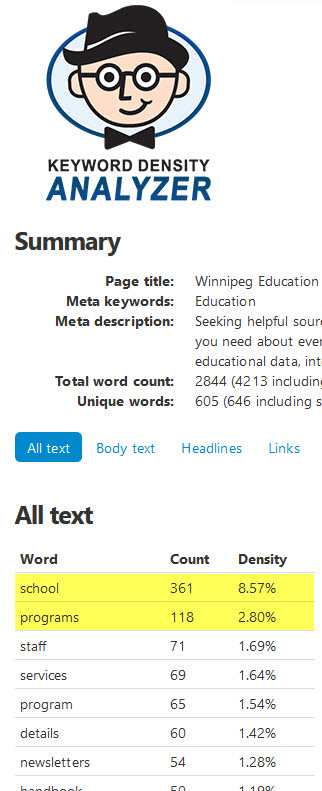

Even if you list every school in the city of Winnipeg that page can't rank if it isn't indexed & even if it is indexed it won't rank well if your site has a Panda-related ranking issue. There are a couple other issues with such a comprehensive page:

- each additional listing is more editorial content cost in terms of building the page AND maintaining the page

- the bigger the page gets the more a user needs something other than alphabetical order as a sort option

- the more listings there are in a tight category the more the likelihood there will be excessive keyword repetition on the page which could get the page flagged for algorithmic demotion, even if the publisher has no intent to spam. Simply listing things by their name will mean repeating a word like "school" over 100 times on the above linked Winnipeg schools page. If you don't consciously attempt to lower the count a page like that could have the term repeated over 300 times.

Knock On Effects

In addition to those web directories getting fewer paid submissions, most are likely seeing a rise in link removal requests. Google's "fear first" approach to relevancy has even led them to listing DMOZ as an unnatural link source in warning emails to webmasters.

What's more, many people who use automated link clean up tools take the declining traffic charts & low rankings of the sites as proof that the links lack value or quality.

That means anyone who gets hit by a penalty & ends up in warning messages not only ends up with less traffic while penalized, but they also get extra busy work to do while trying to fix whatever the core problem is.

And in many cases fixing the core problem is simply unfeasible without a business model change.

When general web directories are defunded it not only causes many of them to go away, but it also means other related sites and services disappear.

- Editors of those web directories who were paid to list quality sites for free.

- Web directory review sites.

- SEOs, internet marketers & other businesses which listed in those directories

Now perhaps general web directories no longer really add much value to the web & they are largely unneeded.

But there are other things which are disappearing in parallel which were certainly differentiated & valuable, though perhaps not profitable enough to maintain the "relevancy" footprint to compete in a brand-first search ecosystem.

Depth vs Breadth

Unless you are the default search engine (Google) or the default social network everyone is on (Facebook), you can't be all things to all people.

If you want to be differentiated in a way that turns you into a destination you can't compete on a similar feature set because it is unlikely you will be able to pay as much for traffic-driven partnerships as the biggest players can.

Can niche directories or vertical directories still rank well? Sure, why not.

Sites like Yelp & TripAdvisor have succeeded in part by adding interactive elements which turned them into sought after destinations.

Part of becoming a destination is intentionally going out of their way to *NOT* be neutral platforms. Consider how many times Yelp has been sued by businesses which claimed the sales team did or was going to manipulate the displayed reviews if the business did not buy ads. Users tend to trust those platforms precisely because other users may leave negative reviews & that (usually) offers something better than a neutral and objective editorial tone.

And that user demand for those reviews, of course, is why Google stole reviews from those sorts of sites to try to prop up the Google local places pages.

It was a point of differentiation which was strong enough that people wanted it over Google. So Google tried to neutralize the advantage.

Blogs

The above section is about general directories, but the same concept applies to almost any type of website.

Consider blogs.

A decade ago feed readers were commonplace, bloggers often cross-linked & bloggers largely drove the conversation which bubbled up through mainstream media.

Google Reader killed off RSS feed readers by creating a fast, free & ad-free competitor. Then Google abruptly shut down Google Reader.

Not only do whimsical blogs like Least Helpful or Cute Overload arbitrarily shut down, but people like Chris Pirillo who know tech well suggest blogging is (at least economically) dead.

Many of the people who are quitting are not the dumb, the lazy, and the undifferentiated. Rather many are the wise trend-aware players who are highly differentiated yet find it impossible to make the numbers work:

The conversation started when revenues were down, and I had to carry payroll for a month or two out of my personal account, which I had not had to do since shortly after we started this whole project. We tweaked some things (added an ad or two which we had stripped back for the redesign, reminded people about ad-blockers and their impact on our ability to turn a profit, etc.) and revenue went back up a bit, but for a hot minute, you’ll remember I was like: “Theoretically, if this industry went further into the ground which it most assuredly will, would we want to keep running the site as a vanity project? Probably not! We would just stop doing it.”

In the current market Google can conduct a public relations campaign on a topic like payday loans, have their PR go viral & then if you mention "oh yeah, so Google is funding the creation of doorway pages to promote payday loans" it goes absolutely nowhere, even if you do it DURING THE NEWS CYCLE.

So much of what exists is fake that anything new is evaluated from the perception of suspicion.

While the real (and important) news stories go nowhere & the PR distortions spread virally, the individual blogger ends up feeling a bit soulless if they try to make ends meet:

"The American Mama reached tens of thousands of readers monthly, and under that name I worked with hundreds of big name brands on sponsored campaigns. I am a member of virtually every ‘blog network’ and agency that “connects brands with bloggers”. ... What’s the point of having your own space to write if you’re being paid to sound like you work for a corporation? ... PR Friendly says “For the right price, I will be anyone you want me to be.” ... I’m not saying blogging is dying, but this specific little monster branch of it, sponsored content disguised as horribly written “day in the life” stories about your kids and pets? It can’t possibly last. Do you really want to be stuck on the inside when it crumbles?"

If you can't get your own site to grow enough to matter then maybe it makes sense to contribute to someone else's to get your name out there.

I recently received this unsolicited email:

"Hello! This is Theodore, a writer and chief editor at SomeSiteName.Com I noticed that you are accepting paid reviews online and you will be glad to know that now you can also publish your Sponsored content to SomeSite via me. SomeSite.Com is a leading website which deals in Technology, Social Media, Internet Stuff and Marketing. It was also tagged as Top 10 _____ websites of 2016 by [a popular magazine]. Website Stats- Alexa Rank: [below 700] Google PageRank: 6/10 Monthly Pageviews: 5+ Million Domain Authority: 85+ Price : $500 via PayPal (Once off Payment) Let me know if you are interested and want to feature your website product like nothing! This will not only increase your traffic but increase in overall SEO Score as well. Thanks"

That person was not actually a member of that site's team, but they had found a way to get their content published on it.

In part because that sort of stuff exists, Google tries to minimize the ability for reputation to flow across sites.

The large platforms are so smug, so arrogant, they actually state the following sort of crap in interviews:

"There's a space in the world for art, but that's different from trying to build products at scale. The one thing that does make me a little nervous is a lot of my designer friends are still focused building websites and I'm not sure that's a growth business anymore. If you look at people who are doing interesting work, they tend to be building inside these platforms like Facebook and finding ways to do interesting work in there. For instance, journalists. Instant Articles is a really great way for stories to be told."

Sure you can bust your ass to build up Facebook, but when their business model changes (bye social gaming companies, hello live streaming video) best of luck trying to follow them.

And if you starve during the 7 lean years in between when your business model is once again well aligned with Facebook you can't go back in time to give yourself a meal to un-starve.

Content Farms

Ehow.com has removed *MILLIONS* of pages of content since getting hit by Panda. And yet their ranking chart looks like this

What is crazy is the above chart actually understates the actual declines, because the shift of search to mobile & increasing prevalence of ads in the search results means estimates of organic search traffic may be overstated significantly compared to a few years prior.

A half-decade ago a bootstrapped eHow competitor named ArticlesBase got some buzz in TechCrunch because they were making about $500,000 a month on about 20 million monthly unique visitors. That business was recently listed on Flippa. They are getting about a half-million unique monthly visitors (off 95%) and about $2,000 a month in revenues (off about 99.6%).

The negative karma with that site (in terms of ability to rank) is so bad that the site owner suggested on Flippa to publish any new content from new authors onto different websites: "its not going to get to 0 as most of the traffic is not google today, but we would suggest to push out the fresh daily incoming content to new sites - thats where the growth is."

Now a person could say "eHow deserves to die" and maybe they are right. BUT one could easily counter that point by noting...

- the public who owns the shares owns the ongoing losses & many top insiders cashed out long ago

- Google was getting a VIG on eHow on their ride up & is still collecting one on the way down (along with funding other current parallel projects from the very same people with the very same Google ad network)

- Demand Media's partner program where they syndicate eHow-like content to newspapers like USA Today keeps growing at 15% to 20% a year (similar process, author, content, business model, etc. ... only a different URL hosting the content)

- look at this and you'll see how many publishing networks are still building the same sort of content but are cross-marketing across networks of sites. What's more some of the same names are at the new plays. For example, Demand Media's founder was the chairman of an SEO firm bought by Hearst publishing & his wife is on the about us page of Evolve Media's ModernMom.com

The wrappers around the content & masthead logos change, but by and large the people and strategies don't change anywhere near as quickly.

Web Portals & News Sites

As the mainstream media gets more desperate, they are more willing to partner with the likes of Demand Media to get any revenue they can.

You see the reality of this desperation in the stock charts for newspaper companies.

Or how about this chart for Yahoo.com.

It doesn't look particularly bad, especially if you consider that Yahoo has shut down many of their vertical sites.

Underlying flat search traffic charts misses declining publisher CPMs and the click traffic mix shift away from organic toward paid search channels as search traffic shifts to mobile devices & Google relentlessly increases the size of the search ads. Yahoo may still rank #3 for keyword x, but if that #3 ranking is below the fold on both mobile and desktop devices they might need to rank #1 to get as much traffic as #3 got a couple years ago.

Yahoo! was once the leading search portal & now they are worth about 1/5th of LinkedIn (after backing out their equity stakes in Alibaba and Yahoo! Japan).

The chart is roughly flat, but the company is up for a fire sale because organic search result displacement & the value of traffic has declined quicker than Yahoo! can fire employees & none of their Hail Mary passes worked.

Ms. Mayer compared the [Polyvore] deal to Google’s acquisition of YouTube in 2006, arguing that “you can never overpay” for a company with the potential to land a huge new base of users.

...

“Her core mistake was this belief that she could reinvent Yahoo,” says a former senior executive who left the company last year. “There was an element of her being a true believer when everyone else had stopped.”

The same line of thinking was used to justify the Tumblr acquisition, which has went nowhere fast - just like their 50+ other acquisitions.

Yahoo! shut down many verticals, fired many workers, sold off some real estate & is exploring selling their patents.

Chewing Up the Value Chain

Smaller devices that are harder to use means the gateways have to try to add more features to maintain relevance.

As they add features, publishers get displaced:

The Web will only expand into more aspects of our lives. It will continue to change every industry, every company, and every life on the planet. The Web we build today will be the foundation for generations to come. It’s crucial we get this right. Do we want the experiences of the next billion Web users to be defined by open values of transparency and choice, or the siloed and opaque convenience of the walled garden giants dominating today?

And if converting on mobile is hard or inconvenient, many people will shift to the defaults they know & trust, thus choosing to buy on Amazon rather than a smaller ecommerce website. One of my friends who was in ecommerce for many years stated this ultimately ended up becoming the problem with his business. People would email him back and forth about the product, related questions, and basically go all the way through the sales process with getting him to answer every concern & recommend each additional related product needed, then at the end they would ask him to price match Amazon & if he couldn't they would then buy from Amazon. If he had more scale he might have been able to get a better price from suppliers and compete with Amazon on price, but his largest competitor who took out warehouse space also filed for bankruptcy because they were unable to make the interest payments on their loans.

We live in a society which over-values ease-of-use & scale while under-valuing expertise.

Look at how much consolidation there has been in the travel market since Google Flights launched & Google went pay-to-play with hotel search.

Expedia owns Travelocity & Orbitz. Priceline owns Kayak. Yahoo! Travel simply disappeared. TripAdvisor is strong, but even they were once a part of Expedia.

How different are the remaining OTAs? One could easily argue they are less differentiated than this article about the history of the travel industry makes Skift against other travel-related news sites.

How many markets are strong enough to support the creation of that sort of featured editorial content?

Not many.

And most companies which can create that sort of in-depth content leverage the higher margins on shallower & cheaper content to pay for that highly differentiated featured content creation.

But if the knowledge graph and new search features are simply displacing the result set the number of people who will be able to afford creating that in-depth featured content is only further diminished.

Over 5 years ago Bing's Stefan Weitz mentioned they wanted to move search from a web of nouns to a web of verbs & to "look at the web as a digital representation of the physical world." Some platforms are more inclusive than Google is & decide to partner rather than displace, but Bing's partnership with Yelp or TripAdvisor doesn't help you if you are a direct competitor of Yelp or TripAdvisor, or if your business was heavily reliant on one of these other channels & you fall out of favor with them.

Chewing Up Real Estate

There are so many enhanced result features in the search results it is hard to even attempt to make an exhaustive list.

As search portals rush to add features they also rush to grab real estate & outright displace the concept of "10 blue links."

There has perhaps been nothing which captured the sentiment better than

The following is paraphrased, but captures the intent to displace the value chain & the roll of publishers.

"the journeys of users. their desire to be taken and sort of led and encouraged to proceed, especially on mobile devices (but I wouldn't say only on mobile devices).

...

there are a lot of users who are happy to be provided with encouragement and leads to more and more interesting information and related, grouped in groups, leading lets say from food to restaurants, from restaurants to particular types of restaurants, from particular types of restaurants to locations of those types of restaurants, ordering, reservations.

I'm kind of hungry, and in a few minutes you've either ordered food or booked a table. Or I'm kind of bored, and in a few minutes you've found a book to read or a film to watch, or some other discovery you are interested in." - Andrey Lipattsev

What role do publishers have in the above process? Unpaid data sources used to train algorithms at Facebook & Google?

Individually each of these assistive search feature roll outs may sound compelling, but ultimately they defund publishing.

Not a "Google Only" Problem

People may think I am unnecessarily harsh toward Google in my views, but this sort of shift is not a Google-only thing. It is something all the large online platforms are doing. I simply give Google more coverage because they have a history of setting standards & moving the market, whereas a player like Yahoo! is acting out of desperation to simply try to stay alive. The market capitalization of the companies reflect this.

Google & Facebook control the ecosystem. Everyone else is just following along.

"digital is eating legacy media, mobile is eating digital, and two companies, Facebook and Google, are eating mobile. ... Since 2011, desktop advertising has fallen by about 10 percent, according to Pew. Meanwhile mobile advertising has grown by a factor of 30 ... Facebook and Google, control half of net mobile ad revenue." - Derek Thompson

The same sort of behavior is happening in China, where Google & Facebook are prohibited from competing.

As publishers get displaced and defunded online platforms can literally buy the media: “There’s very little downside. Even if we lose money it won’t be material,” Alibaba's Mr. Tsai said. “But the upside [in buying SCMP] is quite interesting.”

The above quote was on Alibaba buying the newspaper of record in Hong Kong.

As bad as entire industries becoming token purchases may sound, that is the optimistic view. :D

Facebook's Instant Articles and Google's AMP those make a token purchase unnecessary: "I don't think it's any secret that you're going to see a bloodbath in the next 12 months," Vice Media's Shane Smith said, referring to digital media and broadcast TV. "Facebook has bought two-thirds of the media companies out there without spending a dime."

Those services can dictate what gets exposure, how it is monetized, and then adjust the exposure and revenue sharing over time to keep partners desperate & keep them hooked.

“If Thiel and Nick Denton were just a couple of rich guys fighting over a 1st Amendment edge case, it wouldn't be very interesting. But Silicon Valley has unprecedented, monopolistic power over the future of journalism. So much power that its moral philosophy matters.” - Nate Silver

Give them just enough (false) hope to stay partnered.

All the while track user data more granularly & run AI against it to disintermediate & devalue partners.

TV networks are aware of the risks of disintermediation and view Netflix with more suspicion than informed SEOs view Google:

for all the original shows Netflix has underwritten, it remains dependent on the very networks that fear its potential to destroy their longtime business model in the way that internet competitors undermined the newspaper and music industries. Now that so many entertainment companies see it as an existential threat, the question is whether Netflix can continue to thrive in the new TV universe that it has brought into being.

...

“ ‘Breaking Bad’ was 10 times more popular once it started streaming on Netflix.” - Michael Nathanson

...

the networks couldn’t walk away from the company either. Many of them needed licensing fees from Netflix to make up for the revenue they were losing as traditional viewership shrank.

And just like Netflix, Facebook will move into original content production.

The Wiki

Wikipedia is certainly imperfect, but it is also a large part of why other directories have went away. It is basically a directory tied to an encyclopedia which is free and easy to syndicate.

Every large search & discovery platform has an incentive for Wikipedia to be as expansive as possible.

The bigger Wikipedia gets, the more potential answers and features can be sourced from it. More knowledge graph, more instant answers, more organic result displacement, more time on site, more ad clicks.

Even if a knowledge graph listing is wrong, the harm done by it doesn't harm the search service syndicating the content unless people create a big deal of the error. But if that happens then people will give feedback on how to fix the error & that is a PR lead into the narrative of how quickly search is improving and evolving.

"Wikipedia used to instruct its authors to check if content could be dis-intermediated by a simple rewrite, as part of the criteria for whether an article should be added to wikipedia. There are many rascals on the Internets; none deserving of respect." - John Andrews

Sergy Brin donates to fund the expansion of Wikipedia. Wikipedia rewrites more webmaster content. Google has more knowledge graph grist and rich answers to further displace publishers.

I recently saw the new gray desktop search results Google is tested. When those appear the knowledge graph appears inline with the regular search results & even on my huge monitor the organic result set is below the fold.

The problem with that is if your brand name is the same brand name that is in the knowledge graph & you are not the dominant interpretation then you are below the fold on all devices for your core brand UNLESS you pay Google for every single click.

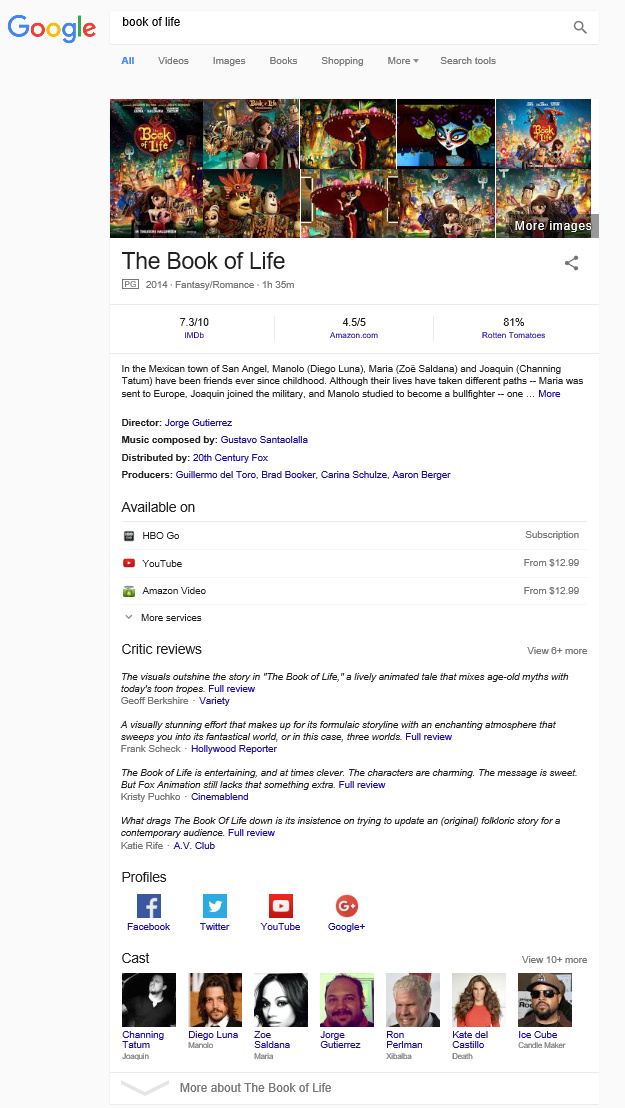

How much should a brand like The Book of Life pay Google for being a roadblock? What sort of tax is appropriate & reasonable? How high will you bid in a casino where the house readjusts the shuffle & deal order in the middle of the hand?

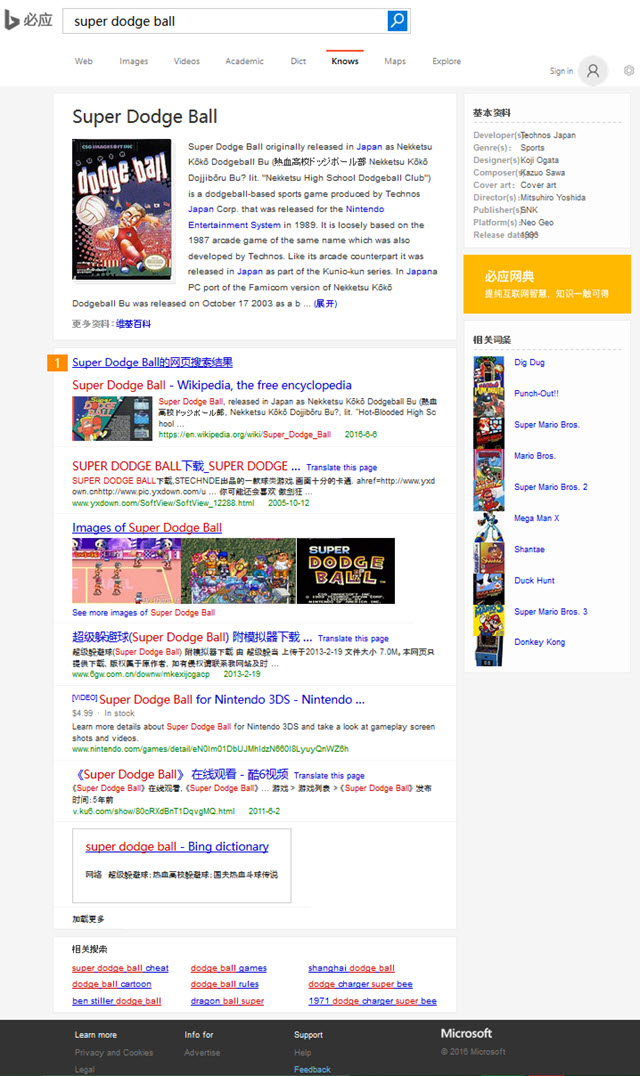

I recently did a search on Bing & inside their organic search results they linked to a Mahalo-like page called Bing Knows. I guess this is a feature in China, but it could certainly spread to other markets.

If they partnered with an eBay or Amazon.com and put a "buy now" button in the search results they'd have just about completely closed the loop there.

Broad Commodification

The reason I started this article with directories is their role is to link to sites. They are categorized collections of links which have been heavily commodified & devalued to the point they are rendered unnecessary and viewed adversely by much of the SEO market (even the ones with decent editorial standards).

Just like links got devalued, so did domain names.

And, as mentioned above in the parts about blogging, content farms, web portals & news sites ... the same trend is happening to almost every type of content.

Online ad revenues are still growing quickly, but they are not flowing through to old media & many former leading bloggers consider blogging dead.

Big platform players like Google and Facebook broaden cross-device user tracking to create new relevancy signals and extract most the value created by publisher. The more information the platform owns the more of a starving artist the partners become.

As partners become more desperate, they overvalue growth (just like Yahoo! with Polyvore):

"It's the golden age right now," [Thrillist CEO Ben Lerer] said. "If you're a digital publisher, you have every big TV company calling you. When I look at media brands, if a media brand disappeared tomorrow, would I notice?" he said. "And there are a bunch of brands that have scale, and maybe a lot of money raised, and maybe this and that, but, actually, I might not know for a year. There's so many brands like that. Like, what does it really stand for? Why does it exist?"

Disruption is not a strategy, but the whole point of accelerating it & pushing it (without an adequate plan for "what's next") is to re-establish feudal lords.

The web is a virtual land where the commodity which matters most is attention. If you go back in time, lords maintained wealth & control through extracting rents.

A few years ago a quote like the following one may have sounded bizarre or out of place

These are the people who guard the company’s status as what ranking team head Amit Singhal often sees characterised as “the biggest kingmaker on this Earth.”

But if you view it through the some historical context it isn't hard to understand

"The nobles still had the power to write the law, and in a series of moves that took place in different countries at different times, they taxed the bazaar, broke up the guilds, outlawed local currencies, and bestowed monopoly charters on their favorite merchants. ... It was never really about efficiency anyway; industrialization was about restoring the power of those at the top by minimizing the value and price of human laborers." - Douglas Rushkoff

Google funding LendUp & ranking their doorway pages while hitting the rest of the industry is Google bestowing "monopoly charters on their favorite merchants."

Headwinds

The issue is not that the value of anything drops to zero, but rather a combine set of factors shrinks down the size of the market which can be profitably served. Each of these factors eat at margins...

- lower CPMs

- the rise of ad blockers (funded largely by some big ad networks paying to allow their own ads through while blocking competing ad networks)

- rise of programmatic ads (which shift advertiser budget away from publisher to various forms of management)

- larger ad sizes: "Based on early testing, some advertisers have reported increases in clickthrough rates of up to 20% compared to current text ads. "

- increase of vertical search results in Google & more ads + self-hosted content in Facebook's feed

- shift of search audience to mobile devices which have no screen real estate for organic search results and lower cost per click (there's a reason Google AdSense is publishing tips on making more from mobile)

- increased algorithmic barrier to entry and longer delay times to rank

The least sexy consultant pitch in the world: "Sure I can probably rank your website, but it will take a year or two, cost you at least $80,000 per year, and you will still be below the fold even if we get to #1 because the paid search ads fill up the first screen of results."

That isn't going to be an appealing marketing message for a new small business with a limited budget.

The Formula

“The open web is pretty broken. ... Railroad, electricity, cable, telephone—all followed this similar pattern toward closedness and monopoly, and government regulated or not, it tends to happen because of the power of network effects and the economies of scale” - Ev Williams.

The above article profiling Ev Williams also states: "An April report from the web-analytics company Parse.ly found that Google and Facebook, just two companies, send more than 80 percent of all traffic to news sites."

The same general trend is happening to almost every form of content - video, news, social, etc..

- a big platform over-promotes a vertical to speed up buy-in (perhaps even offering above market rates or other forms of compensation to get the flywheel started)

- other sources join the market without that compensation & then the compensation stream gets yanked

- displacement of the source by a watered down copy (eHow or Wikipedia styled rewrite), or some zero-cost licensing arrangement (Facebook Instant Articles, Google AMP, syndicating Wikipedia rewrites)

- strategic defunding of the content source

- promise of future gains causing desperate publishers to lean harder into Google or Facebook even as they squeeze more water out of the rock.

Hey, sure your traffic is declining & your revenue is declining faster. You are getting squeezed out, but if you trust the primary players responsible for the shift & rely on Instant Articles or Google's AMP this time will be different.

...or maybe not...

Facts & Opinions

When I saw some Google shills syndicating Google's "you can't copyright facts" pitch without question I cringed, because I knew where that was immediately headed.

A year later the trend was obvious.

So now we get story pitches where the author tries to collect a few quote sources to match the narrative already in their head. Surely this has gone on for a long time, but it has rarely been so transparently obvious and cringeworthy as it is today.

And if you stray too far from facts into opinions & are successful, don't be surprised if you end up on the receiving end of proxy lawsuits:

Can we talk about how strange it is for a group of Silicon Valley startup mentors to embrace secret proxy litigation as a business tactic? To suddenly get sanctimonious about what is published on the internet and called News? To shame another internet company for not following ‘the norms’ of a legacy industry? The hypocrisy is mind bending.

The desperation is so bad news sites don't even attempt to hide it. And part of what is driving that is bot-driven content further eroding margins on legitimate publishing. Google not only ranks those advertorials, but they also promote some of the auto-generated articles which read like:

As many as 1 analysts, the annual sales target for company name, Inc. (NYSE:ticker) stands at $45.13 and the median is $45.13 for the period closed 3.

The bearish target on sales is $45.13 and the bullish estimate is $45.13, yielding a standard deviation of 1.276%.

Not more than 1 investment entities have updated sales projections on upside over the last week while 1 have downgraded their previously provided sales targets. The estimates highlight a net change of 0% over the last 1 weeks period.

Sales estimated amount is a foremost parameter in judging a firm’s performance. Nearly 1 analysts have revised sales number on the upside in last one month and 1 have lowered their targets. It demonstrates a net cumulative change of 0% in targets against sales forecasts which were given a month ago.

In latest quarterly period, 1 have revised targeted sales on upside and 1 have decreased their projections. It demonstrates change of 4.898%.

I changed a few words in each sentence of that quote to make it harder to find the source as I wasn't trying to out them specifically. But the auto-generated content was ranked by Google & monetized via inline Google AdSense ads promoting the best marijuana stocks to invest in and warning of a pending 80% stock market crash coming soon this year.

Hey at least it isn't a TOTALLY fake story!

Publishers get the message loud and clear. Tronc wants to ramp up on AI driven video content at scale:

"There's all these really new, fun features we're going to be able to do with artificial intelligence and content to make videos faster," Ferro told interviewer Andrew Ross Sorkin. "Right now, we're doing a couple hundred videos a day; we think we should be doing 2,000 videos a day."

All is well, news & information are just externalities to a search engine ad network.

No big deal.

"With newspapers dying, I worry about the future of the republic. We don’t know yet what’s going to replace them, but we do already know it’s going to be bad." - Charlie Munger

Build a Brand

Build a brand, that way you are protected from the rapacious tech platforms.

Or so the thinking goes.

But that leads back to the above image where The Book of Life is below the fold on their own branded search query because there is another interpretation Google feels is more dominant.

The big problem with "brand as solution" is you not only have to pay to build a brand, but then you have to pay to protect it.

And the number of search "innovations" to try to siphon off some late funnel branded traffic and move it back up the funnel to competitors (to force the brand to pay again for their own brand to try to displace the "innovations") will only continue growing.

And at any point in time if Disney makes a movie using your brand name as the name of the movie, you are irrelevant and need of a rebrand overnight, unless you commit to paying Google for your brand forever.

Having an offline location can be a point of strength and a point of differentiation. But it can also be a reason for Google to re-route user traffic through more Google owned & controlled pages.

Further, most large US offline retailers are doing horrible.

Almost all the offline growth is in stores selling dirt cheap unbranded imported stuff like Dollar General or Family Dollar & stores like Ross and TJ Maxx which sell branded item remainders at discount prices. And as Amazon gets more efficient by the day, other competitors with high cost structures & less efficient operations grow relatively less efficient over time.

The Wall Street Journal recently published an article about a rift between Wal-Mart & Procter & Gamble: “They sell crappy private label, so you buy Swiffer with a crappy refill,” said one of the people familiar with the product changes. “And then you don’t buy again.”

In trying to drive sales growth, P&G is resorting to some Yahoo!-like desperate measures, included meetings where "Some workers donned gladiator-like armor for the occasion."

Riding on other platforms or partners carries the same sorts of risks as trusting Google or Facebook too much.

Even owning a strong brand name and offline distribution does not guarantee success. Sears already spun out their real estate & they are looking to sell the Kenmore & Craftsman brands.

The big difference between the web and offline platforms is the marginal cost of information is zero, so they can quickly & cheaply spread to adjacent markets in ways that physically constrained offline players can not & some of the big web platforms have far more data on people than governments do. It is worth noting one of the things that came out of the Snowden leaks is spooks were leveraging Google's DoubleClick cookies for tracking users.

As desperate stores/platforms see slowing growth they squeeze for margins and seek to accelerate growth any way possible. Chasing growth ultimately leads to the promise of what differentiates them disappearing. I recently bought some "hand crafted" soaps on Etsy, which shipped from Shenzen.

I am not sure how that impacts other artisinal soap sellers, but it makes me less likely to buy that sort of product from Etsy again.

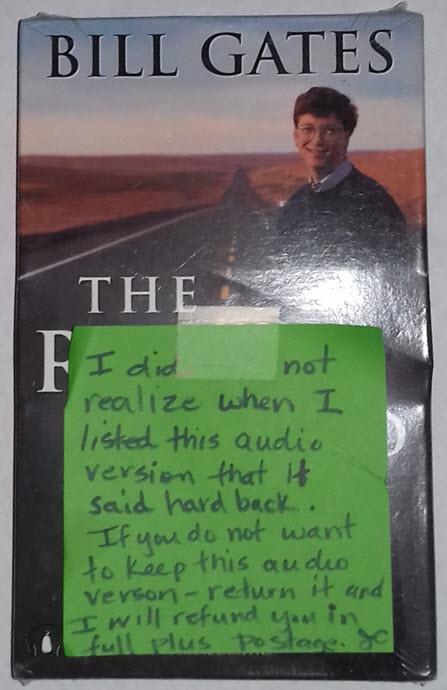

And for as much as I like shopping on Amazon, I was uninspired when a seller recently sent me this.

Amazon might usually be great for buyers & great for affiliates, but hearing how they are quickly expanding their private label offerings wouldn't be welcome news for a merchant who is overly-reliant on them for sales in any of those categories.

The above sort of activity is what is going on in the real world even among brands which are not under attack.

The domestic economic landscape is getting quite ugly:

America’s economy today is in some respects more concentrated than it was during the Gilded Age, whose excesses prompted the Progressive Era reforms the FTC exemplifies. In sector after sector, from semiconductors and cable providers to eyeglass manufacturers and hotels, a handful of companies dominate. These giants use their market power to hike prices for consumers and suppress wages for workers, worsening inequality. Consolidation also appears to be driving a dramatic decline in entrepreneurship, closing off opportunity and suppressing growth. Concentration of economic power, in turn, tends to concentrate political power, which incumbents use to sway policies in their favor, further entrenching their dominance.

And the local abusive tech monopolies are now firmly promoting the TPP: "make it more difficult for TPP countries to block Internet sites" = countries should have less influence over the web than individual Facebook or Google engineers do.

In a land of algorithmic false positives that cause personal meltdowns and organizational breakdowns there is nothing wrong at all with that!

I kept waiting. For a year and a half, I waited. The revenues kept trickling down. It was this long terrible process, losing half overnight but then also roughly 3% a month for a year and a half after. It got to the point where we couldn’t pay our bills. That’s when I reached out again to Matt Cutts, “Things never got better.” He was like, “What, really? I’m sorry.” He looked into it and was like, “Oh yeah, it never reversed. It should have. You were accidentally put in the bad pile.”

Luckily the world can depend on China to drive growth and it will save us.

Or maybe there is a small problem with that line of thinking...

Beijing’s intellectual property regulator has ordered Apple Inc. to stop sales of the iPhone 6 and iPhone 6 Plus in the city, ruling that the design is too similar to a Chinese phone, in another setback for the company in a key overseas market.

Can any experts chime in on this?

Let's see...

First, there is Wal-Mart selling off their Chinese e-commerce operation to the #2 Chinese ecommerce company & then there's this from the top Chinese ecommerce company:

“The problem is the fake products today are of better quality and better price than the real names. They are exactly the same factories, exactly the same raw materials but they do not use the names.” - Alibaba's Jack Ma