Making Untrustworthy Data Trustworthy:

In social networks there tends to be an echo chamber effect. Stories grow broader, wider, and more important as people share them. Tagging and blog citation are inevitably going to help push some stories where they don't belong. Spam will also push other stories.

RSS, the Wikipedia, Government content, press releases, and artful content remixing means automated content generation is easy. Some people are going so far as to try to automate ad generation, while everyone and their dog wants to leverage a publishing network.

What is considered worthwhile data will change over time. When search engines rely to heavily on any one data source it gets abused, and so they have to look for other data sources.

Search Engines Use Human Reviewers:

When John Battelle wrote The Search he stated:

Yahoo is far more willing to have overt editorial and commercial agendas, and to let humans intervene in search results so as to create media that supports those agendas…. Google sees the problem as one that can be solved mainly through technology–clever algorithms and sheer computational horsepower will prevail. Humans enter the search picture only when algorithms fail–and then only grudgingly.

Matt Cutts reviewed the book, stating:

A couple years ago I might have agreed with that, but now I think Google is more open to approaches that are scalable and robust if they make our results more relevant. Maybe I’ll talk about that in a future post.

Matt also states that humans review sites for spam:

If there’s an algorithmic reason why your site isn’t doing well, you can definitely still come back if you change the underlying cause. If a site has been manually reviewed and has been penalized, those penalties do time out eventually, but the time-out period can be very long. It doesn’t hurt your site to do a reinclusion request if you’re not sure what’s wrong or if you’ve checked carefully and can’t find anything wrong.

and recently it has become well known that they outsource bits of the random query evaluation and spam recognition process.

Other search engines have long used human editors. When Ask originally came out it tried to pair questions with editorial answers.

Yahoo! has been using editors for a long time. Sometimes in your server logs you may get referers like http://corp.yahoo.com/project/health-blogs/keepers. Some of the engines Yahoo! bought out were also well known to use editors.

Editors don't scale as well as technology though, so eventually search engines will place more reliance upon how we consume and share data.

Ultimately Search is About Communication:

Many of the major search and internet related companies are looking toward communication to help solve their problems. They make bank off the network effect by being the network or being able to leverage network knowledge better than the other companies.

- eBay

- has user feedback ratings

- product reviews reviews.ebay.com

- bought Shopping.com

- bought PayPal

- bought Skype

- Yahoo!

- partnered with DSL providers

- bought Konfabulator

- bought Flickr

- My Yahoo! lets users save or block sites & subscribe to RSS feeds

- offers social search, allowing users to share their tagged sites

- bought Del.icio.us

- has Yahoo! 360 blog network

- has an instant messenger

- has Yahoo! groups

- offers email

- has a bunch of APIs

- has a ton of content they can use for improved behavioral targeting

- pushes their toolbar hard

- Google

- may be looking to build a Wifi network

- has toolbars on millions of desktops and partners with software and hardware companies for further distribution

- bought Blogger & Picasa

- alters search results based on search history

- allows users to block pages or sites

- has Orkut

- has an instant messenger with voice

- has Google groups

- Google Base

- offers email

- AdWords / AdSense / Urchin allows Google to track even more visitors than the Google Toolbar alone allows

- Google wallet payment system to come

- has a bunch of APIs allowing others to search

- search history allows tagging

- MSN

- operating system

- browser with integrated search coming soon

- may have been looking to buy a part of AOL

- offers email

- has an instant messenger

- Start.com RSS aggregation

- starting own paid search and contextual ad program based on user demographics

- has a bunch of APIs

- AOL

- AIM

- AOL Hot 100 searches

- leverage their equity to partner with Google for further distribution

- Ask

- Amazon

- collects user feedback

- offers a recommending engine

- allows people to create& share lists of related products

- lists friend network

- finds statistically improbably phrases from a huge corpus of text

- allows users to tag A9 search results & save stuff with their search history

Even if search engines do not directly use any of the information from the social sharing and tagging networks, the fact that people are sharing and recommending certain sites will carry over into the other communication mechanisms that the search engines do track.

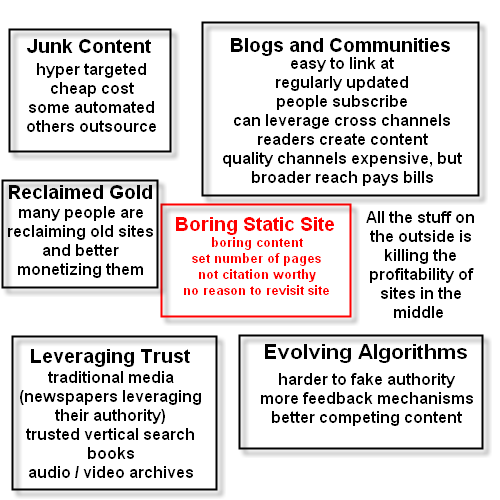

Things Hurting Boring Static Sites Without Personality:

What happens when Google has millions of books in their digital library, and has enough coverage and publisher participation to prominently place the books in the search results. Will obscure static websites even get found amongst the billions of pages of additional content?

What happens when somebody comment spams (or does some other type of spam) for you to try to destroy your site rankings? If people do not know and trust you it is going to be a lot harder to get back into the search indexes. Some will go so far as to create hate sites or blog spam key people.

What happens when automated content reads well enough to pass the Turing test? Will people become more skeptical about what they purchase? Will they be more cautious with what they are willing to link at? Will search engines have to rely more on how ideas are spreading to determine what content they can trust?

Marginalizing Effects on Static Content Production:

As the web userbase expands, more people publish (even my mom is a blogger), and ad networks become more efficient people will be able to make a living off off smaller and smaller niche topics.

As duplicate content filters improve, search engines have more user feedback, and more quality content is created static boring merchant sites will be forced out of the search results. Those who get others talk about them giving away information will better be able to sell products and information.

Good content without other people caring about it simply means to search engines its not good content.

Moving from Trusting Age to Trusting Newsworthiness:

Most static sites like boring general directories or other sites that are not so amazing that people are willing to cite them will lose market share and profitability as search engines learn how to trust new feedback mechanisms more.

Currently you can buy old sites with great authority scores and leverage that authority right to the top of Google's search results. Eventually it will not be that easy.

The trust mechanisms that the search engines use are easy to defeat and matter less if your site has direct type in traffic, subscribers, and people frequently talk about you.

Cite this Post or Subscribe to this Site:

Some people believe that every post needs to get new links or new subscribers. I think that posting explicitly with that intent may create a bit of an artificial channel, but it is a good base guideline for the types of posts that work well.

The key is that if you have enough interesting posts that people like enough to reference then you can mix in a few other posts that are not as great but are maybe more profit oriented. The key is to typically post stuff that adds value to the feed for many subscribers, or post things that interest you.

Many times just by having a post that is original you can end up starting a great conversation. I recently started posting Q and As on my blog. I thought I was maybe adding noise to my channel, but my sales have doubled , a bunch of sites linked to my Q and As, and I have got nothing but positive feedback on it. So don't be afraid to test stuff.

You wouldn't believe how many people posted about Andy Hagans post about making the SEO B list. Why was that post citation worthy? It was original and people love to read about themselves.

At the end of the day it is all about how many legitimate reasons you can create for a person to subscribe to your site or recommend it to a friend.

Man vs Machine:

For most webmasters inevitably the search algorithms will evolve to become advanced to the point where it's easier and cheaper to manipulate human emotion than to directly manipulate the search algorithms. Using a dynamic publishing format which reminds people to come back and read again makes it easier to build the relationships necessary to succeed. To quote a friend:

This is what I think, SEO is all about emotions, all about human interaction.

People, search engineers even, try and force it into a numbers box. Numbers, math and formulas are for people not smart enough to think in concepts.

Disclaimer:

All articles are wrote to express an opinion or prove a point (or to give the writer an excuse to try to make money - although this saying that SEO is becoming more about traditional public relations probably does not help me sell more SEO Books).

In some less competitive industries dynamic sites may not be necessary, but if you want to do well on the web long term most people would do well to have at least one dynamic site where they can converse and show their human nature.

Earlier articles in this series: