SEM Rush has long been one of my favorite SEO tools. We wrote a review of SEM Rush years ago. They were best of breed back then & they have only added more features since, including competitive research data for Bing and for many local versions of Google outside of the core US results: Argentina, Australia, Belgium, Brazil, Canada, Denmark, Finland, France, Germany, Hungary, Japan, Hong Kong, India, Ireland, Israel, Italy, Mexico, Netherlands, Norway, Poland, Russia, Singapore, Spain, Sweden, Switzerland, Turkey, United Kingdom.

Recently they let me know that they started offering a free 2-week trial to new users.

Set up a free account on their website & enter the promotional code located in the image to the right.

For full disclosure, SEM Rush has been an SEO Book partner for years, as we have licensed their API to use in our competitive research tool. They also have an affiliate program & we are paid if you become a paying customer, however we do not get paid for recommending their free trial & their free trial doesn't even require giving them a credit card, so it literally is a no-risk free trial. In fact, here is a search box you can use to instantly view a sampling of their data

Quick Review

Competitive research tools can help you find a baseline for what to do & where to enter a market. Before spending a dime on SEO (or even buying a domain name for a project), it is always worth putting in the time to get a quick lay of the land & learn from your existing competitors.

- Seeing which keywords are most valuable can help you figure out which areas to invest the most in.

- Seeing where existing competitors are strong can help you find strategies worth emulating. While researching their performance, it may help you find new pockets of opportunities & keyword themes which didn't show up in your initial keyword research.

- Seeing where competitors are weak can help you build a strategy to differentiate your approach.

Enter a competing URL in the above search box & you will quickly see where your competitors are succeeding, where they are failing & get insights on how to beat them. SEMrush offers:

- granular data across the global Bing & Google databases, along with over 2-dozen regional localized country-specific Google databases (Argentina, Australia, Belgium, Brazil, Canada, Denmark, Finland, France, Germany, Hungary, Japan, Hong Kong, India, Ireland, Israel, Italy, Mexico, Netherlands, Norway, Poland, Russia, Singapore, Spain, Sweden, Switzerland, Turkey, United Kingdom, United States)

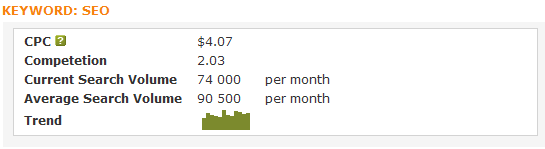

- search volume & ad bid price estimates by keyword (which, when combined, function as an estimate of keyword value) for over 120,000,000 words

- keyword data by site or by page across 74,000,000 domain names

- the ability to look up related keywords

- the ability to directly compare domains against one another to see relative strength

- the ability to compare organic search results versus paid search ads to leverage data from one source into the other channel

- the ability to look up sites which have a similar ranking footprint as an existing competitor to uncover new areas & opportunities

- historical performance data, which can be helpful in determining if the site has had manual penalties or algorithmic ranking filters applied against it

- a broad array of new features like tracking video ads, display ads, PLAs, backlinks, etc.

Longer, In-Depth Review

What is SEM Rush?

SEM Rush is a competitive research tool which helps you spy on how competing sites are performing in search. The big value add that SEM Rush has over a tool like Compete.com is that SEM Rush offers CPC estimates (from Google's Traffic Estimator tool) & estimated traffic volumes (from the Google AdWords keyword tool) near each keyword. Thus, rather than showing the traffic distribution to each site, this tool can list keyword value distribution for the sites (keyword value * estimated traffic).

As Google has started blocking showing some referral data the value of using these 3rd party tools has increased.

Normalizing Data

Using these estimates generally does not provide overall traffic totals that are as accurate as Compete.com's data licensing strategy, but if you own a site and know what it earns, you can set up a ratio to normalize the differences (at least to some extent, within the same vertical, for sites of similar size, using a similar business model).

One of our sites that earns about $5,000 a month shows a Google traffic value of close to $20,000 a month.

5,000/20,000 = 1/4 = 0.25

A similar site in the same vertical shows $10,000

$10,000 * 0.25 = $2,500

A couple big advantages over Compete.com and services like QuantCast for SEM Rush are that:

- they focus exclusively on estimating search traffic

- you get click volume estimates and click value estimates right next to each other

- they help you spot valuable up-and-coming keywords where you might not yet get much traffic because you rank on page 2 or 3

Disclaimers With Normalizing Data

It is hard to monetize traffic as well as Google does, so in virtually every competitive market your profit per visitor (after expenses) will generally be less than Google. Some reason why..

- In some markets people are losing money to buy marketshare, while in other markets people may overbid just to block out competition.

- Some merchants simply have fatter profit margins and can afford to outbid affiliates.

- It is hard to integrate advertising in your site anywhere near as aggressively as Google does while still creating a site that will be able to gather enough links (and other signals of quality) to take a #1 organic ranking in competitive markets...so by default there will typically be some amount of slippage.

- A site that offers editorial content wrapped in light ads will not convert eyeballs into cash anywhere near as well as a lead generation oriented affiliate site would.

SEM Rush Features

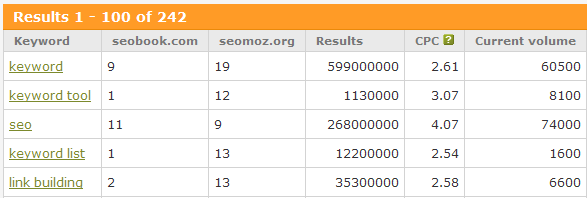

Keyword Values & Volumes

As mentioned above, this data is scraped from the Google Traffic Estimator and the Google Keyword Tool. More recently Google combined their search-based keyword tool features into their regular keyword tool & this data has become much harder to scrape (unless you are already sitting on a lot of it like SEM Rush is).

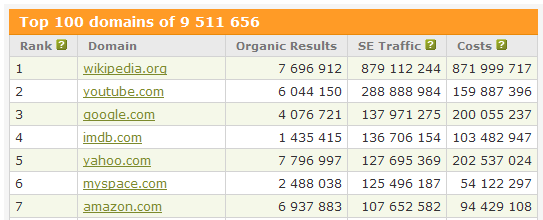

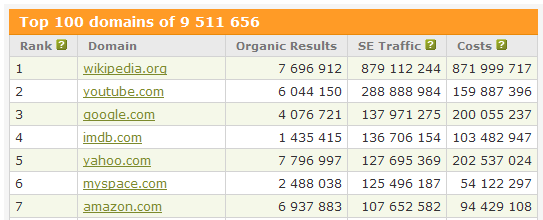

Top Search Traffic Domains

A list of the top 100 domain names that are estimated to be the highest value downstream traffic sources from Google.

You could get a similar list from Compete.com's Referral Analytics by running a downstream report on Google.com, although I think that might also include traffic from some of Google's non-search properties like Reader. Since SEM Rush looks at both traffic volume and traffic value it gives you a better idea of the potential profits in any market than looking at raw traffic stats alone would.

Top Competitors

Here is a list of sites that rank for many of the same keywords that SEO Book ranks for

Most competitors are quite obvious, however sometimes they will highlight competitors that you didn't realize, and in some cases those competitors are also working in other fertile keyword themes that you may have missed.

Overlapping Keywords

Here is a list of a few words where Seo Book and SEOmoz compete in the rankings

These sorts of charts are great for trying to show clients how site x performs against site y in order to help allocate more resources.

Compare AdWords to Organic Search

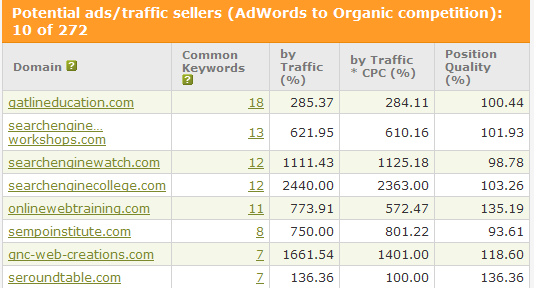

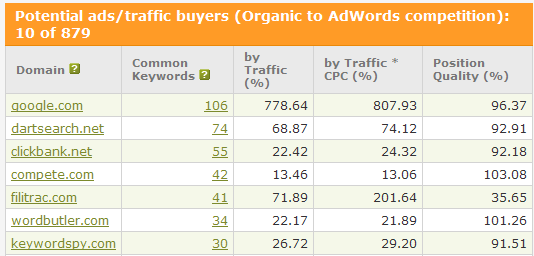

These are sites that rank for keywords that SEO Book is buying through AdWords

And these are sites that buy AdWords ads for keywords that this site ranks for

Before SEM Rush came out there were not many (or perhaps any?) tools that made it easy to compare AdWords against organic search.

Start Your Free Trial Today

SEM Rush Pro costs $79 per month (or $69 if you sign up recurring), so this free trial is worth about $35 to $40.

Take advantage of SEMRush's free 2-week trial today.

Set up a free account on their website & enter the promotional code in the image located to the right.

If you have any questions about getting the most out of SEM Rush feel free to ask in the comments below. We have used their service for years & can answer just about any question you may have & offer a wide variety of tips to help you get the most out of this powerful tool.