Recently, we did a post on the benefits of using PPC for Local SEO. In this post, we are going to go through how to structure a local PPC campaign for a small business.

This post is designed to show you how really easy it is to get a local PPC campaign up and running. If you want to streamline the campaign building process, choosing the right tools upfront can be a big help. Landing page creation can be a time-consuming and expensive task, but it doesn't have to be.

Step 1: Choosing a Landing Page Tool

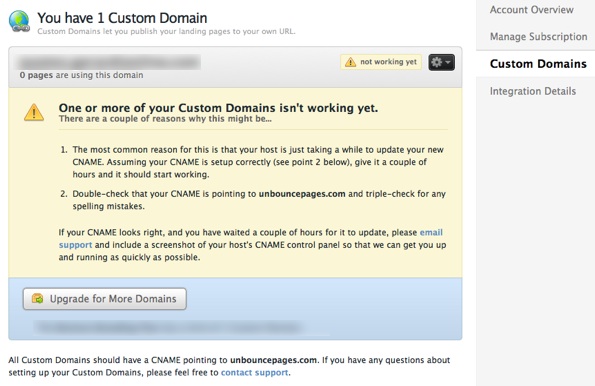

For the quick generation of nicely designed landing pages, Unbounce is tough to beat. Unbounce has a variety of paid plans available. Unbounce hosts your pages on their site. You can use a CNAME record to have these pages look like they are on your domain via sub-pages or via a sub-domain. This is done very easily through your host and you can get the instructions as well as track the status right from the dashboard:

I just set up this new account so it will be a little bit before this propagates. Once you arrive at the landing page dashboard, you just click the big shiny green button to create a new page:

You can choose from any of Unbounce's pre-designed templates (which are quite nice) or you can start with a blank template.

There are quite a few templates we can use, but since this is largely a lead generation campaign (for a local insurance agent) I'm going to grab one of the spiffy lead generation templates.

Customizing Unbounce

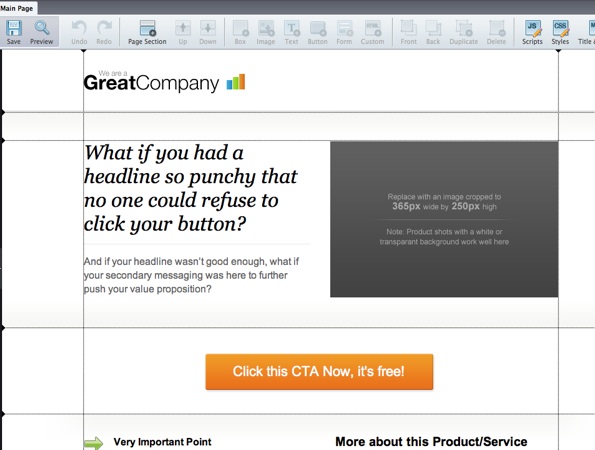

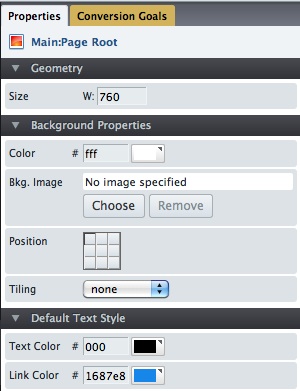

Unbounce has a Photoshop-like interface which makes changing text and imagery a breeze:

For a local insurance agent who sells maybe 3 or 4 different products to a small handful of towns, setting up his or her landing pages is really quite painless.

Alternative to Unbounce (for Wordpress Sites)

Once you start generating thousands of unique visits a month (hopefully!) Unbounce can get pricey. At that point, where you are presumably making lots of sales off of PPC, you might want to invest in having a designer start creating some custom landing pages rather than paying a hundred or hundreds of dollars per month on a service like Unbounce.

If you are using Wordpress and don't mind getting your hands into some basic coding then you should check out Premise (which is a relatively new Wordpress plugin from Copyblogger Media.

After doing some basic customizations on the look and feel of your template, you can quickly generate good looking and solid landing pages while getting copywriting advice built right in. Also, Premise comes with a ton of custom, well-designed graphics from their in-house graphic artist. The plugin works with any Wordpress theme.

Geordie did a review of Premise over at PPC Blog.

Step 2: Keyword List Generation

With local keyword research you'll most certainly run across a lack of data being returned to you by keyword tools. There are a few tips you can use when doing local keyword research to help get a better handle on a keyword list suitable for a PPC campaign.

Throughout this post we will be referring to a local insurance agency but this can apply to any campaign that is pursuing local PPC as an option.

Competing Sites

One of the first things I would do would be to check out the local sections of bigger websites. In the example of insurance there are plenty of large sites which act as lead aggregators and target local keywords (usually down to the state level).

You can very easily visit one of these sites and take a quick peak at their title tag and the on-page copy to determine what keywords they are targeting. In your market, like with insurance, there usually are related keywords that might overlap. Car insurance and auto insurance are classic examples in the insurance industry.

Looking at a few competing sites might give you an idea of whether or not most sites are pursuing keyword x versus keyword y. This can be a helpful data point to use when constructing your PPC campaign.

Google Trends

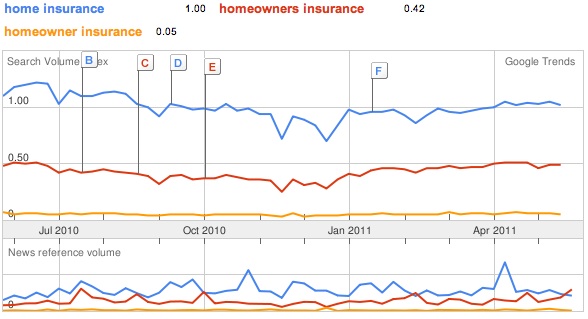

Google Trends is a nice tool to use when you are trying to discern between similar keywords and it offers trends by region, state, and city. For example, here are some charts for home insurance versus homeowners insurance versus homeowner insurance:

Here is the section where it shows trends by location (you can filter further, down to a city level)

I usually like to keep multiple variants in the PPC campaign to start just to test things out but using Trends to find which variant is appreciably higher in volume can help with choosing which keywords you should be looking to research further.

Your Own Knowledge

You know how your customers speak about products and you know the lingo of your industry best. That is how I usually would start a local keyword list. Then I would move into using Google Trends and looking at competing sites to see what the tools tell me about my initial take on a possible keyword list.

I would then further expand that list by entering those core terms into the Google AdWords Keyword Tool and the SeoBook Keyword Tool (our tool is powered by Wordtracker) and see what keywords I may be overlooking.

You can search in those tools for local terms like "boston car insurance" but the data, when you start to dig in to keyword research, gets almost non-existent for local terms past the point of a core term like "boston car insurance".

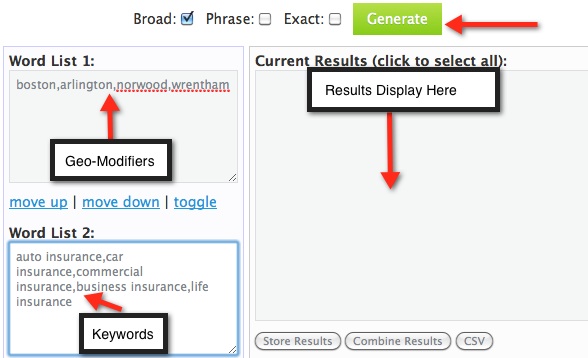

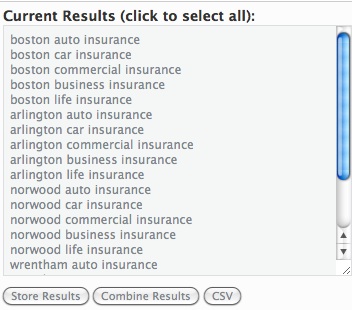

I would recommend taking the non-geo modified keywords you find with the processes mentioned above and entering them into a keyword list generator, along with the names of the towns and cities you service (and states). You can use our free keyword list generator :) .

SeoBook Keyword List Generator

This campaign happens to be in a small, rural area. So the strategy here is to get feedback ASAP to see if we are dealing with any palpable volume which would warrant further investment into a PPC campaign (outside of just testing search volume for SEO purposes).

I'm going to say that this agency sells car, home, life, and business insurance in 5 towns. There are some local PPC tools out there but for a campaign this size it won't be all that time consuming to set up.

What you can use is our free keyword list generator and export to either broad, phrase, or exact match after grouping everything together :)

Once you click "Generate" you get the results displayed like this:

Export that to CSV and you are good to go!

So basically, because the volume is small, we want to try and hit as many variations as possible. With local keywords you can have the following modifiers:

- state spelled out or abbreviated

- zip code

- town or city

- various combinations of the above 3 elements

You can choose to do your ad groups by a particular variable like city/town or product. As modifiers are playing a huge role in this campaign, and to keep things cleaner for me, I will do the ad groups by town and one for the state.

Step 3: Setting Up the Campaign

There are some initial steps you'll have to go through when you set up your AdWords account.

Choosing Local Settings

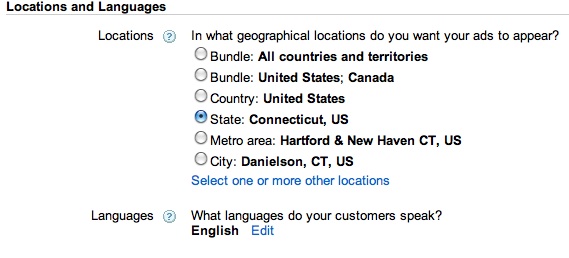

There are 2 areas for location targeting:

- Location and Languages - where you would select specific language and location settings (country, state, town, custom map, etc)

- Advanced Settings - where you can target by physical location and search intent, just physical location, or just search intent. You can also use Exclusion settings where you can exclude by physical location.

In the next section I'll show you why I chose certain settings for this specific campaign and when other options might be appropriate.

Location and Languages

With Location and Languages you get the following options:

In this campaign I am targeting one area of a particular state for a local business. There is no set rule here, preferences should be considered based on the client.

For instance, this is a local insurance agent in one part of the state with 2 offices in this particular area. It generally isn't wise to attempt to go after towns which are not within driving distance to the offices.

The selling point of a local agent is local service, a place you can drive to in order to talk with your agent about your policy, and so on. Most local agents do not have the technological ability to compete with direct writers like Geico and Progressive with respect to being able to adequately serve customers across the country. If the agency had multiple offices across the state I would reconsider my position on location targeting.

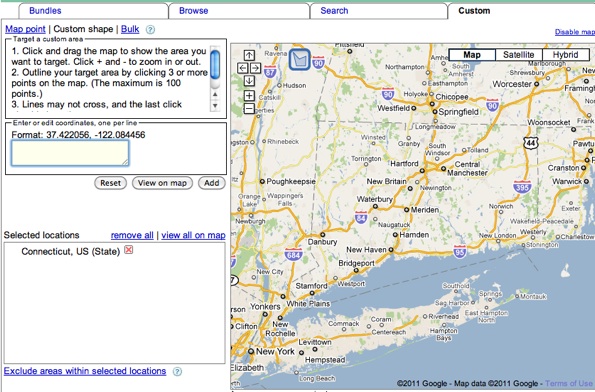

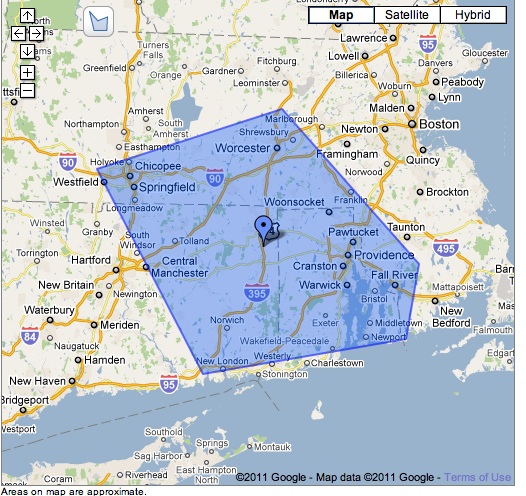

In any case, we are using broad matched geo-modified keywords and the surrounding states may have one or two overlapping town names so I'm going to use a custom map for location. The custom map works here because I can generally cover most of the areas where people who live in the area of the client either live or work.

Alternatively, I could choose (as states) Connecticut, Massachusetts, and Rhode Island but then you could run into overlapping town issues (which is the concern with just going country wide). You could solve that by introducing the state modifier (CT or Connecticut in this case) into your keywords but that defeats the goal of starting with a low effort campaign to see if there's any volume in the first place (just using city/town and keyword as a broad match)

Just click on "Select one or more locations" and click the Custom tab in the dialog box that opens up:

You can click or drag to create your custom map. I like to click (must be 3 or more clicks) because I find it to be more precise when trying to isolate a location.

I've created a custom map targeting the locations where customers likely live and work:

By their very nature most local businesses service a specific, geographical segment of a particular market. In sticking with the insurance example, let's say you have an agency in Boston, Cape Cod, Springfield, and Worcester (hitting most of the major counties in Massachusetts).

In the case of multiple locations you'd want to run multiple campaigns targeting those specific locations and perhaps an extra campaign which didn't use geo-modifiers but used just the state for targeting. This way, even if you hit on a broad keyword from another area of the state it still is likely that you service that location within driving distance.

You could easily highlight the multiple office locations on a landing page whereas a competing agency that just has a location down on the Cape would generally benefit very little, if at all, from doing any sort of broad-based geographic targeting (targeting the whole state with non-geo keywords as an example).

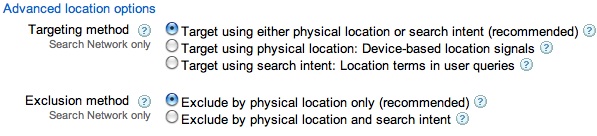

Advanced Location Options

You have five options here:

You can read more about the options here and I'm going to leave them as defaults based on my geo-only targeting.

I may come back to these options if I find the search volume is not high enough to warrant continued PPC investment. In this market, non-geo modified keywords are brutally priced for a small, local agent so I think starting a bit more cautiously is a good idea.

Google makes a good point regarding the usage of these options

These targeting methods might conflict with location terms in keywords. You should only use either the advanced targeting options or use keywords to accomplish your campaign goals, but not both methods.

So if you've got a campaign which is exclusively using geo-modified keywords and a specific locale you could really mess things up if add another layer of targeting on top of that (which would be unnecessary anyway).

Businesses that service a community wouldn't benefit as much, if at all, from using these options but a small or local business which does business all over the country, or promotes travel to the local area, or ships products to different locations can benefit from these kinds of options as described in the example Google gives on Napa Valley Wine.

Say you are a chocolatier in Vermont and you want to run multiple campaigns for Vermont (exclusively with location matching and geo-modified keywords) but then you want to run other campaigns targeting Canada and maybe one for the US as well, while excluding Vermont and/or geo modified searches. You can do these sort of things with the variety of location options AdWords provides.

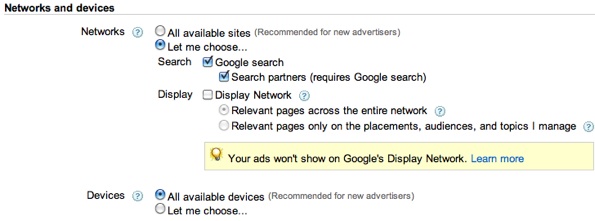

Network and Devices

By default Google opts you in to the search network and display network. I would avoid the display network at this point and just focus on the search network for these particular keywords:

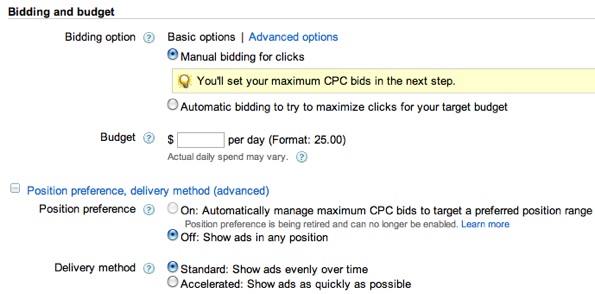

Bidding and Budget

For a basic starter campaign like this, and for many campaigns quite frankly, you can skip some of the advanced bidding options and just use manual bidding and "show ads evenly over time".

Generally I recommend that you should be comfortable assuming somewhere in the high hundreds to a couple thousand dollars worth of AdWords spend being lost due to testing and such (based on whatever your cost per click is). Even though it's a much more targeted way of advertising than say a local newspaper ad, you still have to go in expecting to lose a little bit upfront in order to find that sweet spot in your approach within your market.

I would say, if you could, budget $100.00 per day for a month and take a peek at it each day just to make sure things are running smoothly. You'll have to pay a bit more upfront on a brand new account while your account gains trust in the eyes of Google. The beauty of a daily budget is that you can change it at any time. After a month or so you should have a pretty good idea of what is going on unless your business is in its offseason.

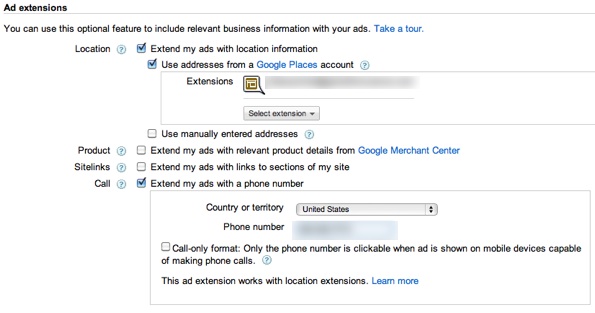

Location Extensions

I would use both the address extension (from Google Places, and if your a local small business you absolutely should be in Google Places) and the phone extension. This helps your ad stand out against the other insurance ads from large companies with no local presence and insurance affiliates with no location at all.

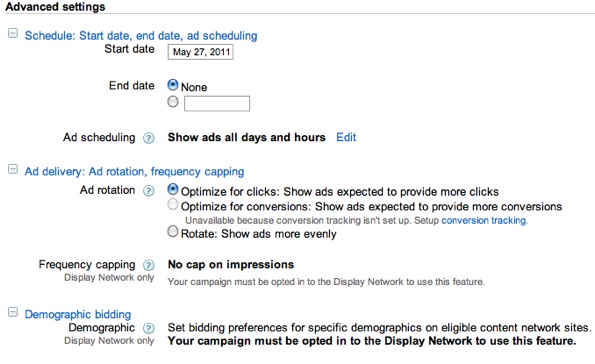

Advanced Settings

Starting off, I would recommend leaving these as default and come back to them later to adjust if you start to see things like conversions being heavily weighted to weekends versus weekdays or days and nights. With a local campaign though, chances are you might not have enough data for awhile to make those kinds of data-driven decisions.

Some of the options are N/A anyway because we are not using the content network.

Step 4: Ad Targeting Options

The examples given above were for keywords being geo-modified because the advertiser is mainly a local business servicing a very specific area of the state and the non-geo modified keywords for this market are brutally priced.

For most local businesses, especially to start, I recommend using a geo-modified keyword campaign and then moving into setting up another campaign which is more of a keyword driven campaign rather than a geographically driven campaign (from a keyword standpoint). Why? Mainly for the examples mentioned above and because starting out with a huge AdWords campaign can be overwhelming to a new user, which can lead to poor management and a poor account quality score right off the bat.

Once you get comfortable with AdWords and you are seeing some early success (or failure, like obvious lack of volume for instance) I would begin to consider moving into setting up that second campaign. By failure I specifically mean a lack of volume early on. If you are consistently showing in the top 1-5 spots in AdWords but are getting very, very little traffic then that means you need to broaden your campaign. If you are getting traffic but aren't converting then you need to tweak and test elements on your landing page and maybe consider other keywords you haven't bid on yet.

I would suggest launching the geo-targeted campaign first and do the initial steps for a broader, non-geo campaign in the background (keyword research, building landing pages, thinking about ad copy and ad group structure, and so on). Obviously this is quite a bit different than a company that happens to be located in Anytown, USA but mainly sells nationally with little or no local presence.

Step 5: Setting Up the Ad Groups

Eventually you may find yourself adding campaigns for non-geo modified keywords while utilizing the targeting options mentioned above, or maybe you want to target just the Display Network. In this case, especially for small business owners who are working with a campaign for the first time, simplicity is preferred in the face of the many options provided within the AdWords System.

The idea with ad groups is to align them as tightly as possible with specific keywords. Using the town or city as the main grouping variable, followed by the product, we can indeed have very tight ad groups. This will also allow us the ability to create a landing page which is super targeted to the keyword being bid on. For example, let's take the idea of two towns and two keywords (auto insurance, home insurance)

I would set up the ad groups as follows:

- (ad group) Town 1, (keywords) Town 1 auto insurance | Town 1 car insurance

- (ad group) Town 1, (keywords) Town 1 home insurance | Town 1 homeowner insurance *as well as other closely related terms like home owner, home owners, condo, renters, and tenants

- (ad group) Town 2, (keywords) Town 1 auto insurance | Town 1 car insurance

- (ad group) Town 2, (keywords) Town 1 home insurance | Town 1 homeowner insurance *as well as other closely related terms like home owner, home owners, condo, renters, and tenants

- (ad group) State/State Abbreviation, (keywords) State (Massachusetts) auto insurance | State Abbreviation (MA) auto insurance (and so on)

I would repeat this process for as many towns, states, and product variations as needed. You can mix in copy and imagery to speak to similar words like auto and car, as well as to speak to similar products like homeowners, condo, and renters insurance

Since we targeted a specific area on the map, we can target just state level searches without worrying about someone searching from an area we cannot service.

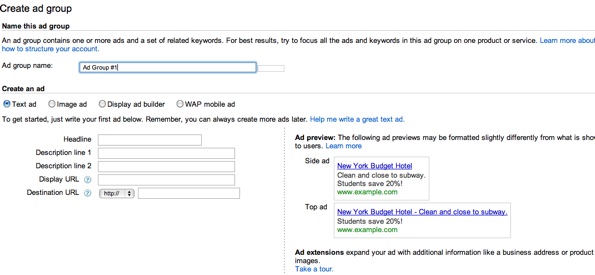

Step 6: AdWords Copy

When you first set up the campaign you get the page where you can name the first ad group and set up the sales copy, with helpful ad previews on the right:

Here, you will enter:

- Headline

- Description line 1

- Description line 2

- Display URL

- Destination URL

These are fairly self-explanatory and ideally you want your headline to contain your targeted (or most of your targeted) keyword as it will be bolded when the ad shows to the user.

I usually use the first description line to describe the features or benefits of what I'm or the client is selling, and the second description line as a strong call to action

Your display URL is what the user sees and can be customized to speak more to your offer, while the destination URL is URL Google actually sends the user too (this would be your landing page URL).

So for example, if you were selling insurance in Boston and your domain was massachusettsinsurance.com you could add /Boston to that in your display URL so it appears more relevant to the user (they do not see the destination URL)

Step 7: Install Analytics

AdWords offers PPC specific reports but installing, if you don't already have it, an analytics package is must if you want to really track your campaigns at deep levels. You'll want to be able to track conversions and typically that requires a multi-step process (even if you are just collecting emails) and having an analytics package can really take your data analysis to the next level.

You can use the AdWords Conversion Tracker for some conversion metrics but a full-featured analytics package gives you more options and data points to utilize. Some of the more popular and affordable analytics packages are:

AdWords offers built-in integration with Google Analytics, so for simplicity you might want to give that a shot upfront. Even though it's free, Google Analytics is a fully featured analytics provider suitable for large and small sites.

Step 8: Test, Tweak, and Adjust

Local campaigns can sometimes take a bit of time to return an appropriate amount of data needed to analyze and adjust to trends in your account. So, be careful not to make wholesale changes to a campaign off of a small amount of data. Try to be consistent for a bit and see what the data tells you over time.

Some tips on account maintenance would be:

- If you get low relevancy messages or "ads not showing" messages on a keyword, isolate it in its own ad group with a super-targeted ad and landing page

- Never let your credit card expire :) You can also prepay AdWords if you'd prefer'

- Try different ad text copies from time to time, pointing out different benefits and using different calls to action

- Try different elements on your landing page (maybe a click-thru button instead of an opt-in form, maybe a brief video, etc)

- When adding new keywords keep the same tight ad group structure that you started out with

- Use the Search Terms report to find exact keywords that triggered an ad click on your broad or phrase matched keywords

- Use the keywords found in your Search Terms report as additions to your current PPC program and SEO planning, rinse and repeat

The more targeted your keywords are (and landing pages) the fewer clicks you should need to determine an appropriate level of feedback from your data. Over time you should pay attention to your conversion rates as they stabilize and look at feedback that way. In other words, if you typically convert at 30% and all of a sudden you go 0-100 on your next 100 clicks, something might be up. Where as if you convert at 5%, you'll need more clicks to determine anything from that data.

I'd peg the click amount to be somewhere in the hundreds when first setting up the account (assuming the keyword/landing page is super targeted). On a really targeted local campaign, I'd like to see a couple hundred clicks or so before I made any decisions on that particular keyword. Since local keywords are usually more trial and error upfront, pay close attention to the aforementioned Search Term report to find those really targeted keywords.

A local campaign is generally smaller in nature so it's a bit easier to have really tight ad group structuring (one or a few keywords per ad group). This makes it a bit easier on the local business owner because upfront set up is quicker and maintenance and tracking are both a bit more streamlined.

A local business that combines SEO and PPC can really clean up in the SERPS. To help get you started, you can pick up a 75$ AdWords credit below and if you are an agency you should check out the Engage program which gives you a generous amount of coupons for your clients.

Free Google AdWords Coupons

Google is advertising a free $75 coupon for new AdWords advertisers, and offers SEM firms up to $2,000 in free AdWords credits via their Engage program.