SEO Spyglass is one of the 4 tools Link-Assistant sells (individually) and as a part of their SEO Power Suite.

We did a review of their Rank Tracker application a few months ago and we plan to review their other 2 tools in upcoming blog posts.

Update: Please note that in spite of us doing free non-affiliate reviews of their software, someone spammed the crap out of our blog promoting this company's tools, which is at best uninspiring.

Key Features of SEO Spyglass

The core features of SEO Spyglass are:

- Link Research

- White Label Reporting

- Historical Link Tracking

As with most software tools there are features you can and cannot access, or limits you'll hit, depending on the version you choose. You can see the comparison here.

Perhaps the biggest feature is their newest feature. They recently launched their own link database, a couple of months early in beta, as the tool had been largely dependent on the now dead Yahoo! Site Explorer.

The launch of a third or fourth-ish link database (Majestic SEO, Open Site Explorer, A-Href's rounding out the others) is a win for link researchers. It still needs a bit of work, as we'll discuss below, but hopefully they plan on taking the some of the better features of the other tools and incorporating them into their tool.

After all, good artists copy and great artists steal :)

Setting Up a Project for a Specific Keyword

One of my pet peeves with software is feature bloat which in turn creates a rough user experience. Link-Assistant's tools are incredibly easy to use in my experience.

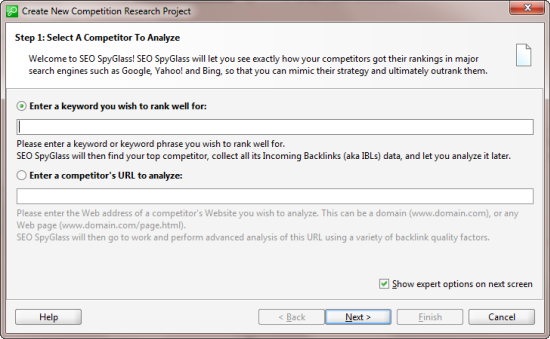

Once you fire up SEO Spyglass you can choose to research links from a competing website or links based off of a keyword.

Most of the time I use the competitor's URL when doing link research but SEO Spyglass doubles as a link prospecting tool as well, so here I'll pick a keyword I might want to target "Seo Training".

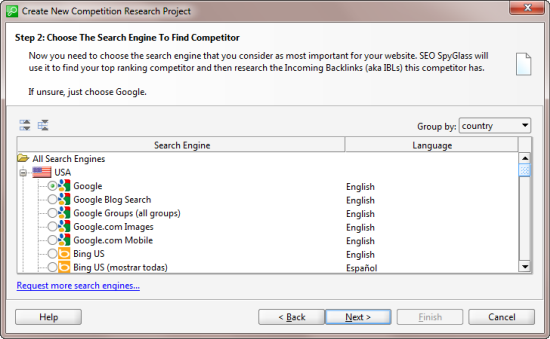

The next screen is where you'll choose the search engine that is most relevant to where you want to compete. They have support for a bunch of different countries and search engines and you can see the break down on their site.

So if you are competing in the US you can pull data the top ranking site off of the following engines (only one at a time):

- Google

- Google Blog Search

- Google Groups

- Google Images

- Google Mobile

- YouTube

- Bing

- Yahoo! (similar to Bing of course)

- AOL

- Alexa

- Blekko

- And some other smaller web properties

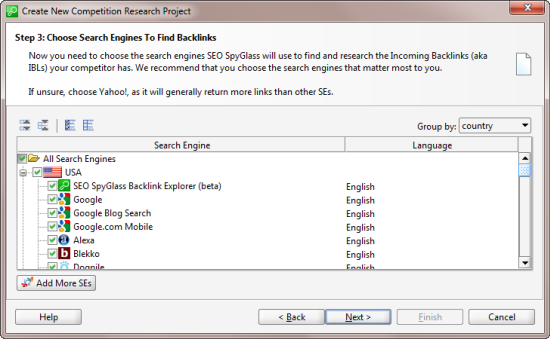

I'll select Google and the next screen is where you select the sources you want Spyglass to use for grabbing the links of the competing site it will find off of the preceding screen:

So SEO Spyglass will grab the top competitor from your chosen SERP will run multiple link sources off of that site (would love to see some API integration with Majestic and Open Site Explorer here).

This is where you'll see their own Backlink Explorer for the first time.

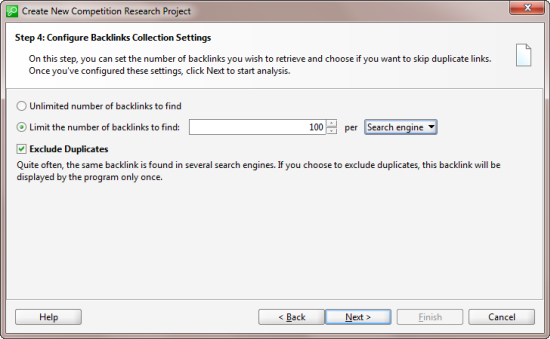

Next you can choose unlimited backlinks (Enterprise Edition only) or you can limit it by

Project or Search Engine. For the sake of speed I'm going to limit it to 100 links per search engine (that we selected in a previous screen) and exclude duplicates (links found in one engine and another) just to get the most accurate, usable data possible:

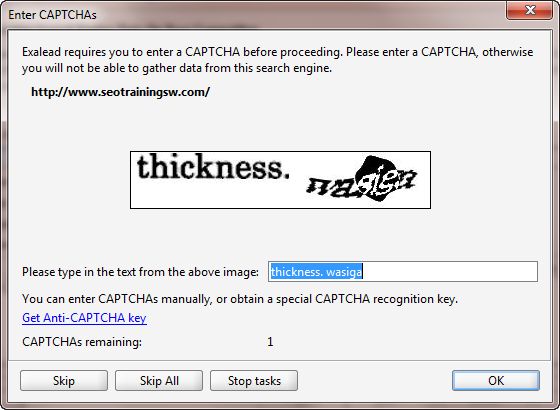

When you start pinging engines, specifically Google in this example, you routinely will get captcha's like this:

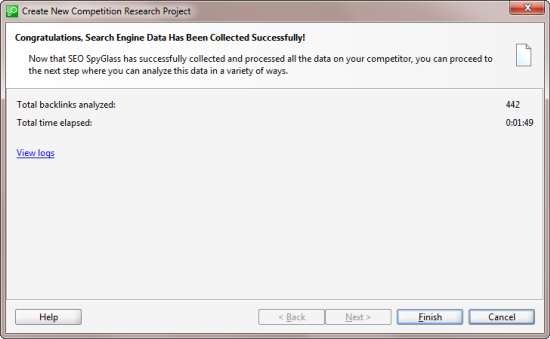

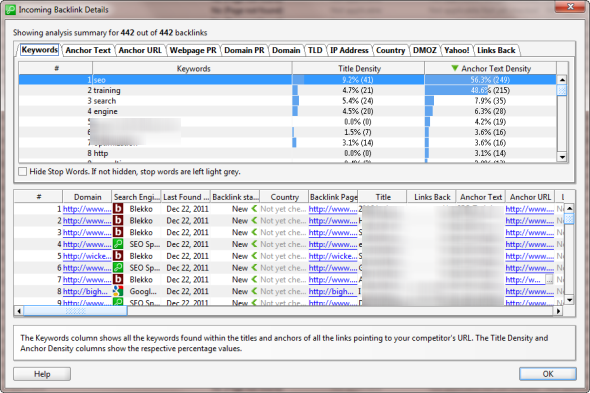

On this small project I entered about 8 of them and the project found 442 backlinks (here is what you'll see after the project is completed):

One way around captchas is to either pay someone to run this tool for you and manually do it, but for large projects that is not ideal as captcha's will pile up and you could get the IP temporarily banned.

Link-Assistant offers an Anti-Captcha plan to combat this issue, you can see the pricing here.

Given the size of the results pane it is hard to see everything but you are initially returned with:

- an icon of what search engine the link was found in

- the backlinking page

- the backlinking domain

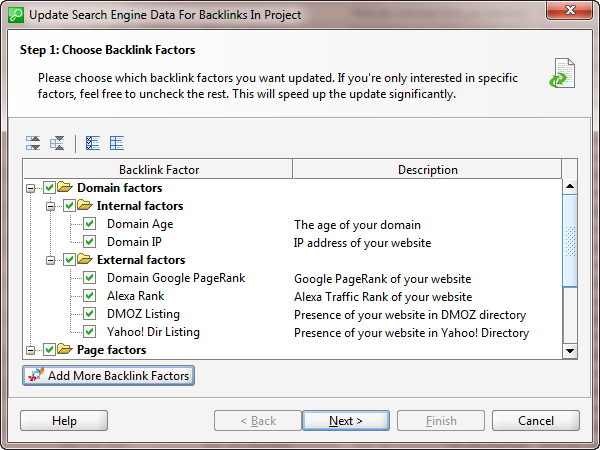

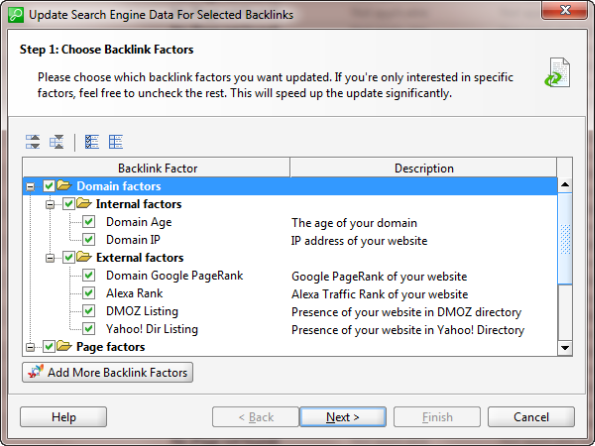

Spyglass will then ask you if you want to update the factors associated with these links.

Your options by default are:

- domain age

- domain ip

- domain PR

- Alexa Rank

- Dmoz Listing

- Yahoo! Directory Listing

- On-page info (title, meta description, meta keywords)

- Total links to the page

- External links to other sites from the page

- Page rank of the page itself

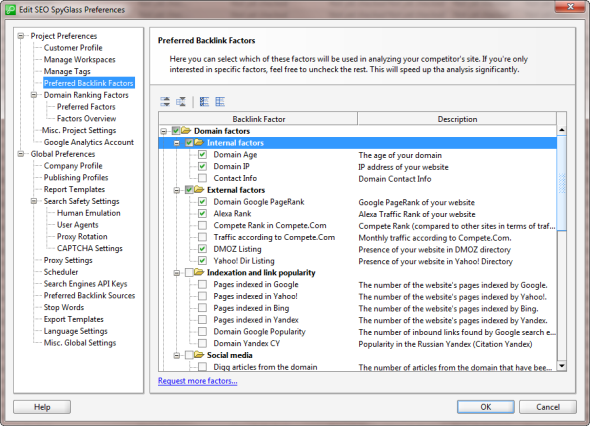

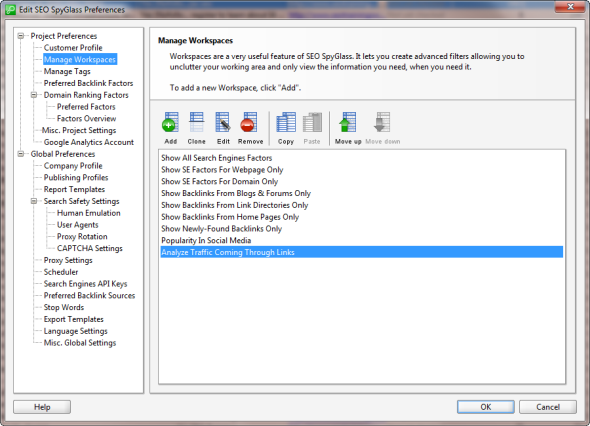

You can add more factors by clicking the Add More button. You're taken to the Spyglass Preferences pane where you can add more factors:

You can add a ton of social media stuff here including popularity on Facebook, Google +, Page-level Twitter mentions and so on.

You can also pick up bookmarking data and various cache dates. Keep in mind that the more you select, especially with stuff like cache date, you are likely to run into captcha's.

SEO Spyglass also offers Search Safety Settings (inside of the preferences pane, middle of the left column in the above screenshot) where you can update human emulation settings and proxies to both speed up the application and to help avoid search engine bans.

I've used Trusted Proxies with Link-Assistant and they have worked quite well.

You can't control the factors globally, you have to do it for each project but you can update Spyglass to only offer you specific backlink sources.

I'm going to deselect PageRank here to speed up the project (you can always update later or use other tools for PageRank scrapes).

Working With the Results

When the data comes back you can do number of things with it. You can:

- Build a custom report

- Rebuild it if you want to add link sources or backlink factors

- Update the saved project later on

- Analyze the links within the application

- Update and add custom workspaces

These options are all available within the results screen (again, this application is incredibly easy to use):

I've blurred out the site information as I see little reason to highlight the site here. But you can see where the data has populated for the factors I selected.

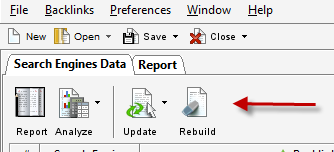

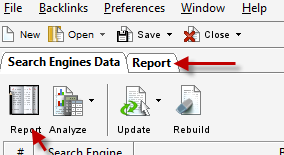

In the upper left hand corner of the applications is where you can build the report, analyze the data from within the application, update the project, or rebuild it with new factors:

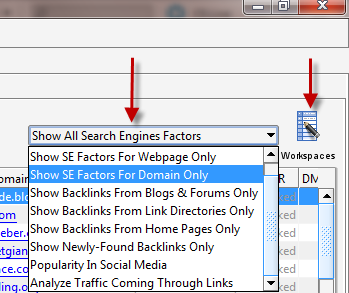

All the way to the right is where you can filter the data inside the application and create a

new workspace:

Your filtering options are seen to the left of the workspaces here. It's not full blown filtering and sorting but if you are looking for some quick information on specific link queries, it can be helpful.

Each item listed there is a Workspace. You can create your own or edit one of the existing ones. Whatever factors you include in the Workspace is what will show in the results pane as factors

So think of Workspaces as your filtering options. Your available metrics/columns are

- Domain Name

- Search Engine (where the link was found)

- Last Found Date (for updates)

- Status of Backlink (active, inactive, etc)

- Country

- Page Title

- Links Back (does the link found by the search engine actually link to the site? This is a good way of identifying short term, spammy link bursts)

- Anchor Text

- Link Value (essentially based on the original PageRank formula)

- Notes (notes you've left on the particular link). This is very limited and is essentially a single Excel-type row

- Domain Age/IP/PR

- Alexa Rank

- Dmoz

- Yahoo! Directory Listing

- Total Links to page/domain

- External links

- Page-level PR

Most of the data is useful. I think the link value is overvalued a bit based on my experience finding links that often had 0 link value in the tool but clearly benefited the site it ended up linking to.

PageRank queries in bulk will cause lots of captcha's and given how out of date PR can be it isn't a metric I typically include on large reports.

Analyzing the Data

When you click on the Analyze tab in the upper left you can analyze in multiple ways:

- All backlinks found for the project

- Only backlinks you highlight inside the application

- Only backlinks in the selected Workspace

The Analyze tab is a separate window overlaying the report:

You can't export from this window but if you just do a control/command-a you can copy and paste to a spreadsheet.

Your options here:

- Keywords - keywords and ratios of specific keywords in the title and anchor text of backlinks

- Anchor Text - anchor text distribution of links

- Anchor URL - pages being linked to on the site and the percentages of link distribution (good for evaluating deep link distribution and pages targeted by the competing site as well as popular pages on the site...content ideas :) )

- Webpage PR

- Domain PR

- Domains linking to the competing site and the percentage

- TLD - percentage of links coming from .com, net, org, info, uk, and so on

- IP address - links coming from IP's and the percentages

- Country breakdown

- Dmoz- backlinks that are in Dmoz and ones that are not

- Yahoo! - same as Dmoz

- Links Back - percentages of links found that actually link to the site in question

Updating and Rebuilding

Updating is pretty self-explanatory. Click the Update tab and select whether or not to update all the links, the selected links, or the Workspace specific links:

(It's the same dialog box as when you actually set up the project)

Rebuilding the report is similar to updating except updating doesn't allow you to change the specified search engine.

When you Rebuild the report you can select a new search engine. This is helpful when comparing what is ranking in Google versus Bing.

Click Rebuild and update the search engine plus add/remove backlink factors.

Reporting

There are 2 ways to get to the reporting data inside of Spyglass

There is a quick SEO Report Tab and the Custom Report Builder:

Much like the Workspaces in the prior example, there are reporting template options on the right side of the navigation:

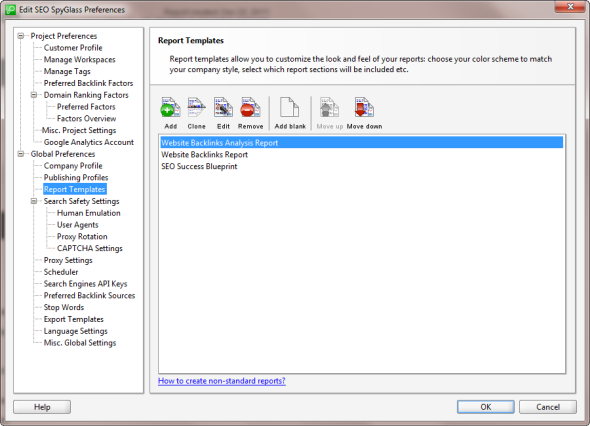

It functions the same way as Workspaces do in terms of being able to completely customize the report and data. You can access your Company Profile (your company's information and logo), Publishing Profiles (delivery methods like email, FTP, and so on), as well as Report Templates in the settings option:

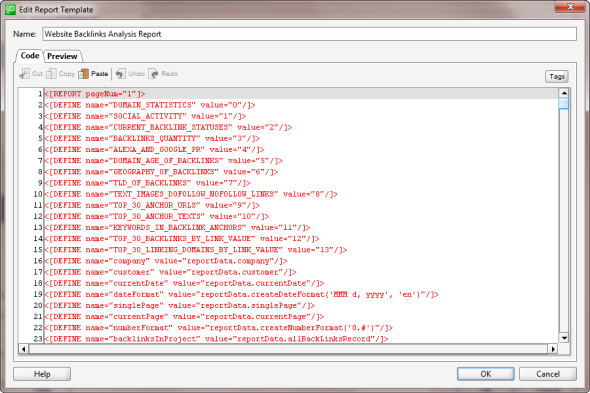

You can't edit the ones that are there now except for playing around with the code used to generate the report. It's kind of an arcane way to do reporting as you can really hose up the code (below the variables in red is all the HTML):

You can create your own template with the following reporting options:

- Custom introduction

- All the stats described earlier on this report as available backlink factors

- Top 30 anchor URLs

- Top 30 anchor texts

- Top 30 links by "link value"

- Top 30 domains by "link value"

- Conclusion (where you can add your own text and images)

Overall the reporting options are solid and offer lots of data. It's a little more work to customize the reports but you do have lots of granular customization options and once they are set up you can save them as global preferences.

As with other software tools you can set up scheduled checks and report generation.

Researching a URL

The process for researching a URL is the same as described above, except you already know the URL rather than having SEO Spyglass find the top competing site for it.

You have the same deep reporting and data options as you do with a keyword search. It will be interesting to watch how their database grows because, for now, you can (with the Enterprise version) research an unlimited number of backlinks.

SEO Spyglass in Practice

Overall, I would recommend trying this tool out. If nothing else, it is another source of backlinks which pulls from other search engines as well (Google, Blekko, Bing, etc).

The reporting is good and you have a lot of options with respect to customizing specific link data parameters for your reports.

I would like to see more exclusionary options when researching a domain. Like the ability to filter redirects and sub-domain links. It doesn't do much good if we want a quick, competitive report but a quarter or more of the report is from something like a subdomain of the site you are researching.

SEO Spyglass's pricing is as follows:

- Purchase a professional option or an enterprise option (comparison)

- 6 months of their Live Plan for free

- Purchase of a Live Plan required after 6 months to continue using the tool's link research functionality.

- Pricing for all editions and Live Plans can be found here

In running a couple of comparisons against Open Site Explorer and Majestic SEO it was clear that Spyglass has a decent database but needs more filtering options (sub-domains mainly). It's not as robust as OSE or Majestic yet, but it's to be expected. I still found a variety of unique links from its database that I did not see on other tools across the board.

You can get a pretty big discount if you purchase their suite of tools as a bundle rather than individually