Comparing Backlink Data Providers

Since Ayima launched in 2007, we've been crawling the web and building our own independent backlink data. Starting off with just a few servers running in our Directory of Technology's bedroom cupboard, we now have over 130 high-spec servers hosted across 2 in-house server rooms and 1 datacenter, using a similar storage platform as Yahoo's former index.

Crawling the entire web still isn't easy (or cheap) though, which is why very few data providers exist even today. Each provider makes compromises (even Google does in some ways), in order to keep their data as accurate and useful as possible for their users. The compromises differ between providers though, some go for sheer index size whilst others aim for freshness and accuracy. Which is best for you?

This article explores the differences between SEOMoz's Mozscape, MajesticSEO's Fresh Index, Ahref's link data and our own humble index. This analysis has been attempted before at Stone Temple and SEOGadget, but our Tech Team has used Ayima's crawling technology to validate the data even further.

We need a website to analyze first of all, something that we can't accidentally "out". Search Engine Land is the first that came to mind, very unlikely to have many spam links or paid link activity.

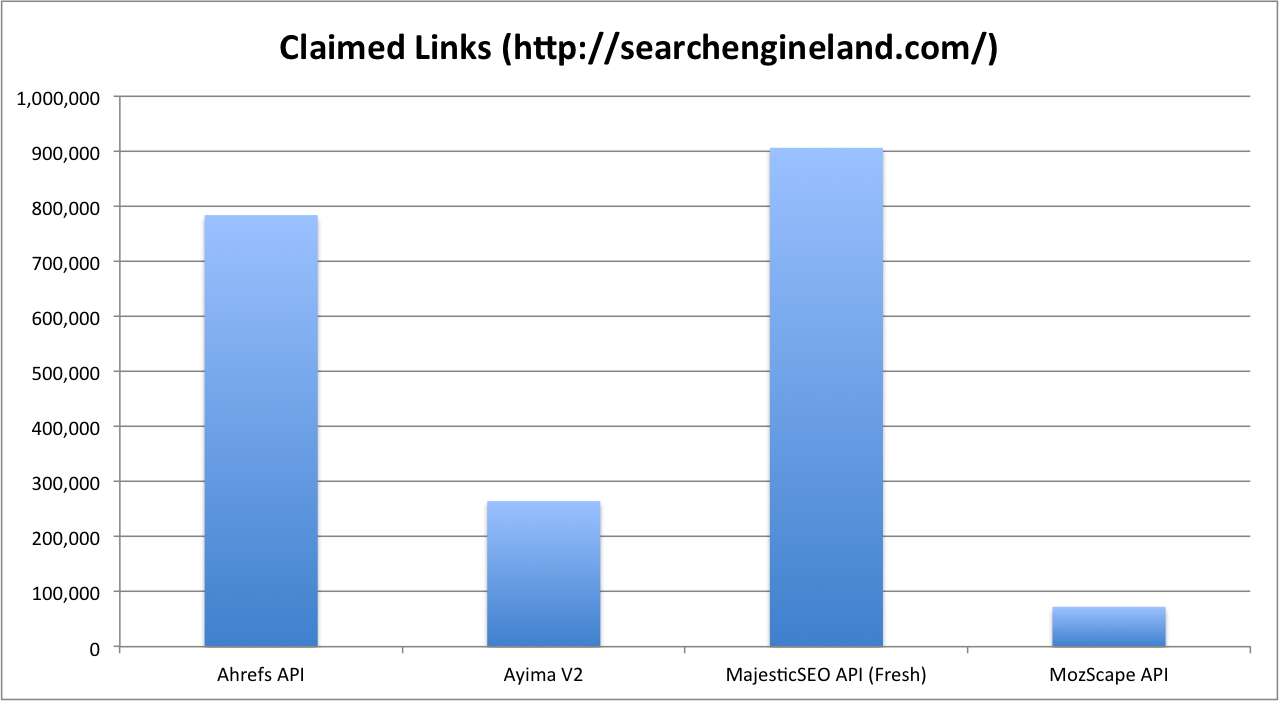

So let's start off with the easy bit - who has the biggest result set for SEL?

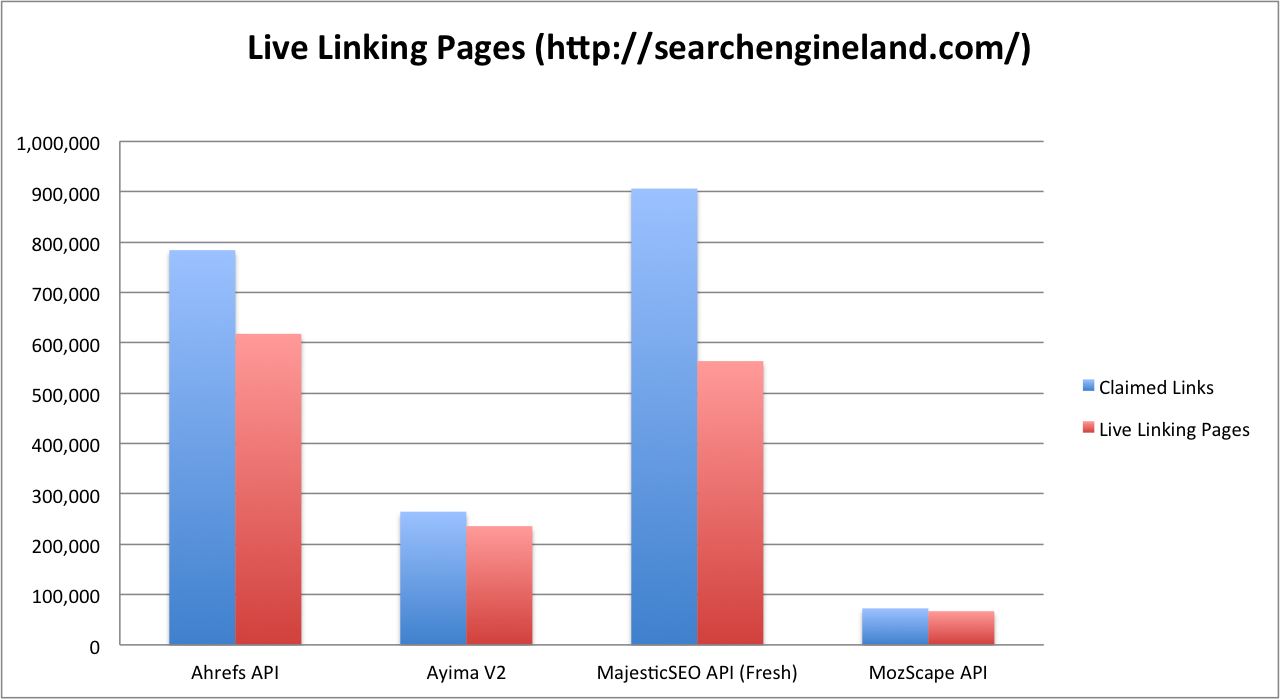

The chart above shows MajesticSEO as the clear winner, followed by a very respectable result for Ahrefs. Does size matter though? Certainly not at this stage, as we only really care about links which actually exist. The SEOGadget post tried to clean the results using a basic desktop crawler, to see which results returned a "200" (OK) HTTP Status Code. Here's what we get back after checking for live linking pages:

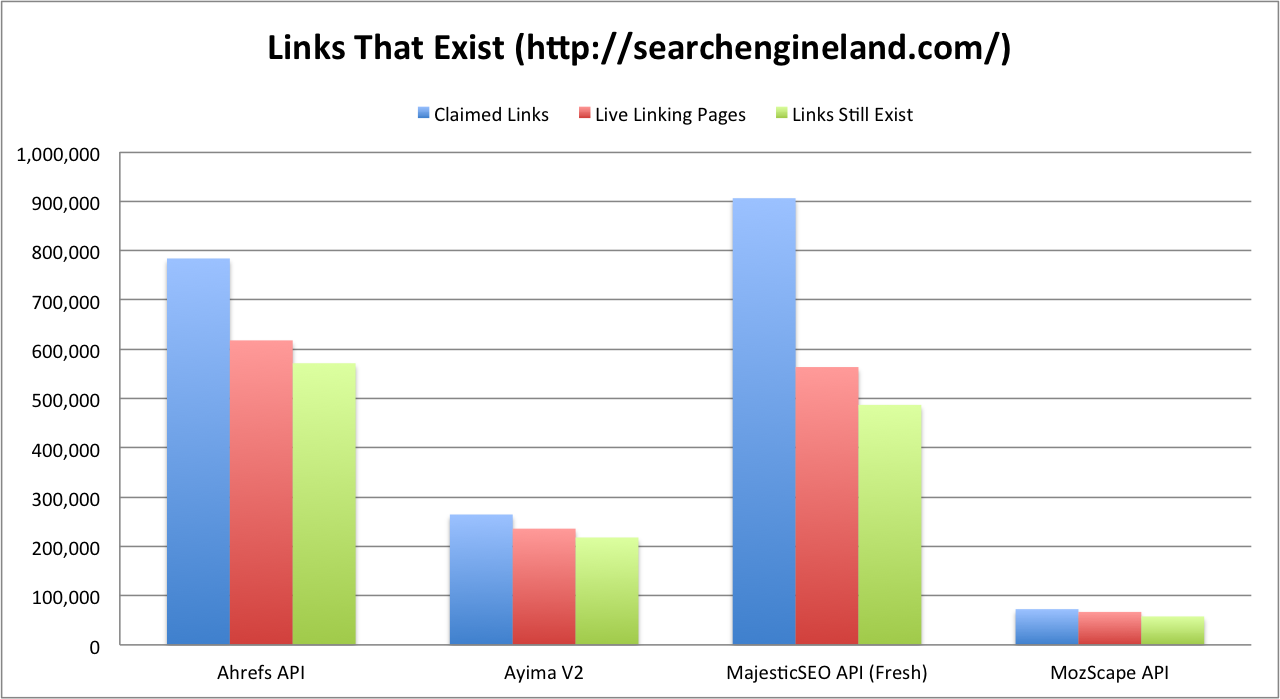

Ouch! So MajesticSEO's "Fresh" index has the distinct smell of decay, whilst Mozscape and Ayima V2 show the freshest data (by percentage). Ahrefs has a sizeable decay like MajesticSEO, but still shows the most links overall in terms of live linking pages. Now the problem with stopping at this level, is that it's much more likely that a link disappears from a page, than the page itself disappearing. Think about short-term event sponsors, 404 pages that return a 200, blog posts falling off the homepage, spam comments being moderated etc. So our "Tenacious Tim" got his crawler out, to check which links actually exist on the live pages:

Less decay this time, but at least we're now dealing with accurate data. We can also see that Ayima V2 has a live link accuracy of 82.37%, Mozscape comes in at 79.61%, Ahrefs at 72.88% and MajesticSEO is just 53.73% accurate. From Ayima's post-crawl analysis, our techies concluded that MajesticSEO's crawler was counting URLs (references) and not actual HTML links in a page. So simply mentioning http://www.example.com/ somewhere on a web page, was counting as an actual link. Their results also included URL references in JavaScript files, which won't offer any SEO value. That doesn't mean that MajesticSEO is completely useless though, I'd personally use it more for "mention" detection outside of the social sphere. You can then find potential link targets who mention you somewhere, but do not properly link to your site.

Ahrefs wins the live links contest, finding 84,496 more live links than MajesticSEO and 513,733 more live links than SEOmoz's Mozscape! I still wouldn't use Ahrefs for comparing competitors or estimating the link authority needed to compete in a sector though. Not all links are created equal, with Ahrefs showing both the rank-improving links and the crappy spam. I would definitely use Ahrefs as my main data source for "Link Cleanup" tasks, giving me a good balance of accuracy and crawl depth. Mozscape and Ayima V2 filter out the bad pages and unnecessarily deep sites by design, in order to improve their data accuracy and showing the links that count. But when you need to know where the bad PageRank zero/null links are, Ahrefs wins the game.

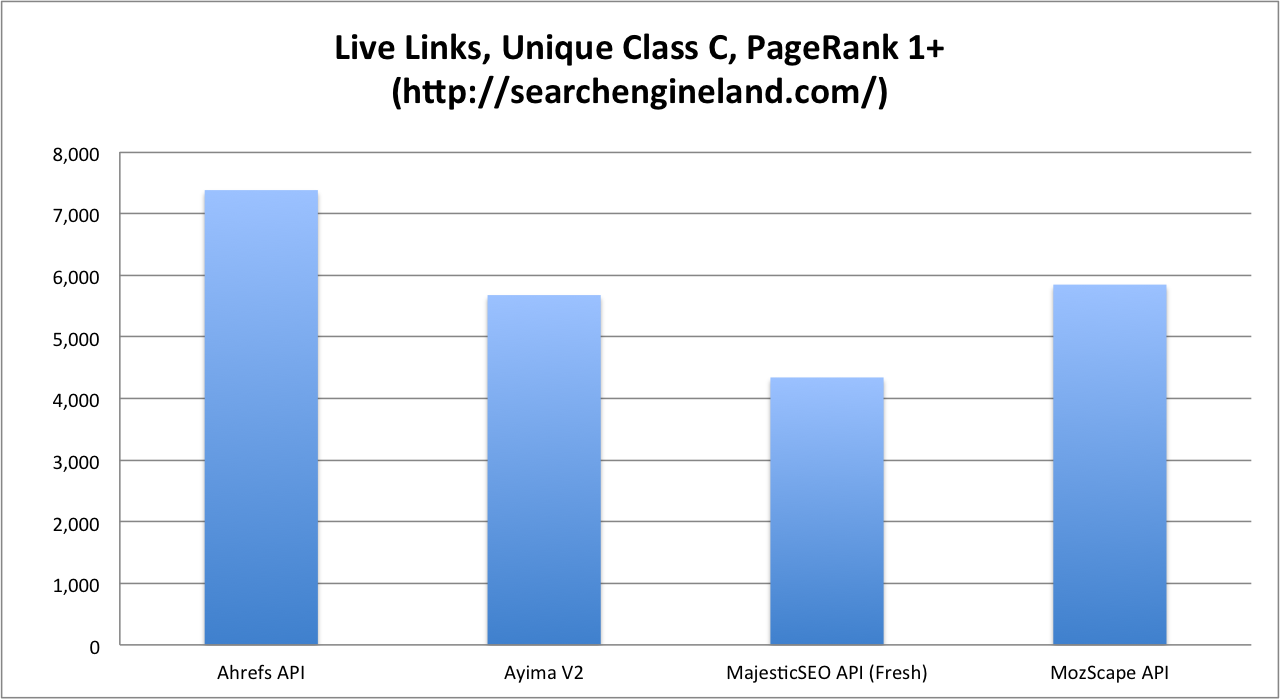

So we've covered the best data for "mentions", the best data for "link cleanup", now how about the best for competitor comparison and market analysis? The chart below shows an even more granular filter, removing dead links, filtering by unique Class C IP blocks and removing anything below a PageRank 1. By using Google's PageRank data, we can filter the links from pages that hold no value or that have been penalized in the past. Whilst some link data providers do offer their own alternative to PageRank scores (most likely based on the original Google patent), these cannot tell whether Google has hit a site for selling links or for other naughty tactics.

Whilst Ahrefs and MajesticSEO hit the top spots, the amount of processing power needed to clean their data to the point of being useful, makes them untenable for most people. I would therefore personally only use Ayima V2 or Mozscape for comparing websites and analyzing market potential. Ayima V2 isn't available to the public quite yet, so let's give this win to Mozscape.

So in summary

- Ahrefs - Use for link cleanup

- MajesticSEO - Use for mentions monitoring

- Mozscape - Use for accurate competitor/market analysis

Juicy Data Giveaway

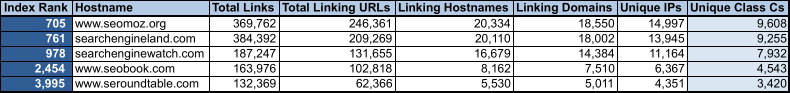

One of the best parts of having your own index, is being able to create cool custom reports. For example, here's how the big SEO websites compare against each other:

"Index Rank" is a ranking based on who has the most value-passing Unique Class C IP links across our entire index. The league table is quite similar to HitWise's list of the top traffic websites, but we're looking at the top link authorities.

Want to do something cool with the data? Here's an Excel spreadsheet with the Top 10,000 websites in our index, sorted by authority: Top 10,000 Authority Websites.

Rob Kerry is the co-founder of Ayima, a global SEO Consultancy started in 2007 by the former in-house team of an online gaming company. Ayima now employs over 100 people on 3 continents and Rob has recently founded the new Ayima Labs division as Director of R&D.

Comments

linkresearchtools is my fav now as far as just links go

I didn't think that they crawled the web themselves, so didn't include them.

...as they are some combination of data from multiple providers & the data would have had more variables to it (rather than just seeing where 1 is in their cycle it would be a mix of a number of them). I also believe their data licensing has some depth limits when compared against the original sources (or at least whenever we have licensed other such SEO data there were depth limits associated with it).

Few remarks:

1. I wouldn't judge any tool based on the results from one site. Even though SEL is an important site, it is still only one. I have done a similar analysis for several websites and the winners and losers varied, with Majestic and Ahrefs being clear winners for the majority of sites, even after accounting for the link decay. Not saying your analysis doesn't say otherwise, just that it will probably change from site to site and niche to niche (even older pages vs. newer pages on the same site. Here is the analysis I did: http://www.rankabove.com/news/seo-research/backlink-data-provider-all/

2. MajesticSEO has a built in option to exclude the mentions, in their Report Analysis options. They also allow you to exclude the deleted links. With those two options used, I have found the level of link decay in their Fresh Index to be the absolute lowest. On the other hand the Historic Index decay levels are enormous. Not sure how you would exclude them via the API but it is not a bug, it is a feature :)

3. Not sure about the cutoff point of PR 1, especially when it comes to fresh links, which news sites like SEL have plenty. And also the regular mantra of TBPR being delayed crap and everything. Add to that a lot of links that can come from crap sites and hurt sites with a low backlink profile, while helping to sites with good profiles and you get a complicated picture which makes the clear PR cut off point impossible.

It is quite an undertaking to build your own crawler, I can just imagine all the juicy insights you get from it.

1. As you can imagine, we've done this analysis extensively across many markets and countries, this is one of such studies.

2. I'm not sure if that option is available in the API, we made the comparison as fair and balanced as possible.

3. It's whether those fresh links attract authority later on though, but I agree that fresh links/pages are important.

Hi Rob,

It's very kind of you to say that Majestic SEO is not completely useless (thanks for that!), but if you did not want to see "mentions" in the data that we return then you'd just filter them out. By default our reports/top backlink command does that and our API reference gives all details necessary to do that. I am sure that "Tenacious Tim" is fully capable of using API parameters to return custom data that is required... and if not then our support is always happy to assist with such queries.

It seems to me that you *may* have made a pretty serious mistake in your validation crawl of data to check if links are still live. It appears from your post that you "the SEOGadget post tried to clean the results using a basic desktop crawler, to see which results returned a "200" (OK) HTTP Status Code.", is that what you actually did? Well, if so then it seems rather odd that you would ignore valid HTTP redirect 3xx respones - our system might have more of them than others because it removes trailing /'s to reduce duplication of data, that does not mean the link isn't there because redirects will lead directly to it.

So, if you've *only* used HTTP 200 to determine validity of link theni in my view you were wrong to discount 301/302 redirects and this can affect greatly ratio of links that our system reports as live.

Since you've only published information regarding one domain (searchengineland.com), which is highly likely to attract a lot of links from blogs that would typically have trailing / (slash) that we remove (hence more redirects than usual when recrawling our data), then it seems rather important to know exact crawl details that you had as it might dramatically affect results of your test.

When talking about freshness as the main factor I think it's only fair to ask you to disclose crawl dates in your own index before the comparison was made. This is important because if your index was just created with fresh crawl then it won't be a fair comparison - you have not disclosed how regularly you plan to update your index and if it's just one crawl per month then your comparison is very much artificial.

You are probably aware that our system allows retrieval of backlinks based on date when they were crawled - typically highest ranked data is recrawled more frequently so it's trivial to focus only on recently checked links in our system if the user wishes to do so. If your main objective was to get links that were recently checked by our crawlers then you'd do just that - our API supports date ranges to suit this sort of queries.

I am looking forward to the day when you launch your own index publicly and I can promise you that in the event of us making public results of our internal testing we'll publish all relevant details to allow 3rd party verification of our findings.

cheers,

Alex

P.S. We will recrawl all backlinks for that domain in the next few days to check if links are still valid - I'll post results here.

Thanks for your thoughts Alex. With regards to links 301'ing, these naturally wouldn't get picked up by checking for 200 responses, but would when checking for the link's existence (our crawler follows 301's).

Good post Rob. We've been playing with Searchmetrics link data lately, any thoughts on the quality of this?

Hi fusionunlimited, I wasn't aware that Search Metrics had their own index of the web, but could be wrong. There are of course many companies who resell the data of other providers, or take multiple data feeds and merge them into one.

I've been using many SEO tools. I want to try Searchmetrics and see if this will work with my site aerials-castleford.co.uk

edit: screw off for that link drop shitbag. your account has been banned.

So...Majestic-SEO found something like 6 times as many live, still existing links as MozScape...but because it found 12 times as many total links, it's less accurate?

Clearly Majestic needs to tighten that up (i..e if half the links are no good, that's a problem), but I think looking at the "accuracy" measurement (live, still existing links divided by all links found, I think you mean right?), while certainly helpful for understanding how one should work with data from these services, it's not helpful at all in comparing *between* services.

A chart comparing all the stats would be more useful I think.

If you have the technical capability to clean the MajesticSEO data and find those links that really still exist, you may of course prefer to use their data. This comparison assumes that people will do a quick search using the basic tools/settings of each service, with little technical resource/ability to validate.

Rob, our users can just select date range for the data they want to get only recently checked backlinks - it's as simple as that. It would be better if you could focus on what your upcoming unreleased product *can* do rather than make false claims about our product that has been peer reviewed many times already.

Recrawling data in range of ~1 mln links requires pretty good technical capability which we happen to have - yesterday we've recrawled ALL unique backlinks pointing to searchenigneland.com and we have received totally different results compared to your test. Below you can find step by step explanation with links to actual data files that can be verified:

Step 1: Search for searchengineland.com in Site Explorer

Step 2. Create Advanced Report for searchengineland.com in fresh index using our system, download all data using default settings to exclude known deleted links, mentions but allowing links marked as nofollow (we've created download file with data here: http://tinyurl.com/majesticseotestfile1 - 25 MB compressed )

Step 3. Extract unique backlinks from that big file (download: http://tinyurl.com/majesticseotestfile2 ) - 824355 unique backlinks were chosen for recrawl, note that this number is fairly close to the one shown in comparison chart, but a bit less - most likely because links marked by our system as deleted were chosen by you for the test of ... live links?!?! I guess it was an innocent mistake on your part.

Step 4. This is where one needs to recrawl nearly 1 mln urls, parse HTML correctly, build backlinks index to extract data again in order to check how many links still present - competent level of TECHNICAL EXPERTISE REQUIRED! :)

Step 5: We used our newly build index with this data to create same CSV file as in step 1 (download file here: http://tinyurl.com/majesticseotestfile3 )

Step 6: count number of unique ref pages in the file above (download results here - http://tinyurl.com/majesticseotestfile4 ) - 753920 unique ref pages in the file.

Final ratio of unique LIVE links still present - 753920 / 824355 = 91.5%!

Wow, we've just beaten unreleased Ayima V2 and we did not even need to do anything for it, just recrawl the data using our well established crawler that can follow redirects, if only all challenges were as easy as this :)

By the way number of ref pages actually live (but without links to searchengineland.com) is higher than 91.5%, but this number is pretty good for our fresh index that keeps track of 2 months worth of data? Higher ranked pages are recrawled more often, low ranked links are often more likely to disappear but that's not big loss.

----------------

My conclusion - your test of Majestic SEO data is FATALLY FLAWED and our data is actually much better quality than you claim.

You now have 2 options in my view:

1) check what I posted and admit you were wrong, update blog post with charts and withdraw your allegations, specifically but not limited to ""Fresh" index has the distinct smell of decay".

2) insist that you are right and release FULL details of your test including data you've crawled for full verification to find out what exactly you did wrong (based on our crawl stats and your own words I think you just did not follow 301/302s and most likely included backlinks clearly marked as deleted in our system).

I realise that neither of those choices are particularly great for you, but doing neither is likely to be even worse choice.

Alex

Option #3:

Take into account everything Majestic has explained about their index, and do test over on a completely different domain that hasn't been recrawled recently and post results with data.

Alex, in Majestic dashboard the latest number for SEL is over 1.9 million links. Showing 295,816 as "deleted". What other links were subtracted from the latest recrawl to get down to the 824,355 links?

It states 283K are image links. Were those included or excluded? And what are "mentions" is that when the url is just in the text of the page but not acually hyperlinked?

Just curious which links were excluded to get the number of links reported reduced by over half.

All this has my simple mind confused.

If there are 824,355 unique links, why is it showing 1.9 million in the dashoboard?

This is very important to understand because I would think the vast majority of users of your service (and ahrefs and OSE) are going to just perform basic research at first using the numbers reported in the main dashboard.

I hope you can see how this might look from my perspective. 1.9 million links repoted. And after your recrawl and verification there are 753,920 live links????

That does not sound good....I must have reread your post a dozen times to see what I'm obviosuly missing...it's late and I've been in the car all day with my 3 and 1 year olds. I promised myself I would just check my email before hitting the sack and now find myself sucked into this discussion :-)

Hi Jeremy,

Testing other domains are fine, but I think we need to get to the bottom of the test methodology that was used in the first place since the difference in results is much higher than a few percentages.

1.9 mln you mention is a *sum* of links pointing to all URLs on a given root domain (this number includes known deleted links), actual number of *rows* of data can be higher because often same page links with different anchor text or link type (say text + image), you can see those lines in the first data file that was released - it excludes links marked as deleted in our system (this is default setting for reports).

The number of *unique referring pages* can therefore be smaller than total number of links (it was 824,355 in this case after removing known deleted links) - for the crawling purposes I believe we've picked correct unique pages as the number of fairly close to that used in Rob's test (it would be almost exact if I included links known to be deleted but it would be silly to do so when it's known they are removed).

After our recrawl 753,920 unique referring pages contained at least one link to searchengineland.com, in reality many linked to more than one URL on that domain which is why there is a higher link number reported by our system.

Our test results that published above clearly show that we've got higher number of unique referring pages, what we dispute is how many of them contained live link - I'd prefer to limit questions only to that rather than ask about other functionality of our system that I'd be happy to answer via our support system or via email.

Forgot to add - image type links were included in our crawl but mentions were NOT included (by default they are filtered out in Advaned Reports), details on what they are can be found in our glossary (not posting link here to avoid accusations of trying to advertise our site).

Today I crawled most of Majestic SEO data for searcheningeland.com:

Majestic reported in site explorer:

1,883,355 urls

39,509 domains

25,523 ips

15,779 subnets

After filtering alt text, mentioned, deleted:

1,545,335 urls

37,490 domains

24,650 ips

15,335 subnets

After taking unique urls:

823,418 urls sceduled for crawl (unique)

719,854 urls crawled (I stopped crawl before finished to avoid to many request at same IP/domain)

247,032 urls found 30x and sceduled for crawl (unique)

188,354 urls crawled found 30x (I stopped crawl before finished to avoid to many request at same IP/domain)

908,208 urls crawled total (719,854 + 188,354)

Live without status 30x urls:

10,852 subnets

15,543 ips

22,822 domains

27,634 hostnames

361,315 urls

Live with status 30x urls:

13,798 subnets

21,822 ips

31,149 domains

36,656 hostnames

510,106 urls

Result:

823,418/908,208 urls - 61.95%/56.17% live

37,490 domains - 83.09% live

24,650 ips - 88.53% live

15,335 subnets - 89.98% live

Without finishing crawling 162,242 urls I get already higher live urls then this test result (when I included 30x redirect). I operate a private backlink db simulair size as Ayima V2, in most compares I did between backlink tools, Majestic SEO has most unique domains. Be not fooled by Ahrefs showing hostname counts as domain counts and Seomoz has much more backlinks as your test show, but maybe they limit their API.

Overall I welcome this tests, I know they are a lot of work :-)

thanks for that :)

1. Have you made almost the same research same day for Ahrefs and Seomoz?

2. What did you mean by "Ahrefs showing hostname counts as domain counts"?

1. No, for searchengineland with so many backlinks I have to limited access to their services to get the full list of backlinks. I was interested in the difference between following 30x and not, because I follow them only one deep and also percentage status 40x what was very low:

Result first crawl status codes:

20x = 60.95%

30x = 37.12%

40x = 1.64%

50x = 0.28%

Result second crawl (30x) status code:

20x = 97.07%

30x = 2.38%

40x = 0.07%

50x = 0.49%

2. backlinks from sub domains as blog.domain.tld and forum.domain.tld and beta.domain.tld count as 3 hostnames but only as 1 domain. A lot of backlink tools show only the hostname counts (with different naming) what can't be compared with domain counts because hostname counts are almost always higher.

2. Have you checked Ahrefs before you made this statement about hostnames?

I know that Ahrefs shows domains and I can not understand what makes you say otherwise. Could you explain please?

Yes I check it.

You don't need to be a rocket scientist, Ahrefs wrote already in their ref domains url path the word 'subdomains':

http://ahrefs.com/site-explorer/refdomains/subdomains/domains.tld

You click ref domains (count or tab) but they show subdomains also, subdomains are hostnames. Two or more different hostnames can have the same domain. To show a clean ref domain list a domain name must be unique in the list.

It's not true.

Let me explain what does "subdomains" mean in this URL http://ahrefs.com/site-explorer/refdomains/subdomains/domains.tld .

Ahrefs Site Explorer has 4 different modes: subdomains, domains, prefix, exact. These modes define scope of analysis.

For example if your type this URL http://www.seobook.com/comparing-backlink-data-providers and click "Explore Links" Ahrefs will show you 4 modes : "URL", "URL/*", "domain/*" and "*.domain/*".

"*.domain/*" means "I want to see all backlinks for www.seobook.com and all its subdomains, such as sub1.www.seobook.com" thats why this mode is named "subdomains".

"domain/*" means "I want to see all backlinks for www.seobook.com. Just this domain. Ignore subdomains please." thats why this mode named "domain".

"URL/*" means "I want to see all backlinks for pages which starts from http://www.seobook.com/comparing-backlink-data-providers"

"URL" means "I want to see all backlinks for this exact URL http://www.seobook.com/comparing-backlink-data-providers"

So, subdomains in this URL http://ahrefs.com/site-explorer/refdomains/subdomains/domains.tld is about scope of analysis.

Regardless of what mode you choose Ahrefs always shows unique domains on Referring Domains count and tab.

Thanks I was aware of the options.

You wrote "Regardless of what mode you choose Ahrefs always shows unique domains on Referring Domains count and tab." When I type some domains in the URL I gave at the place of "domains.tld", I get sometimes a list where sub domains are grouped with a "+" before the domain and counted only once, but other times I get a list where sub domains are not grouped and listed separated and counted each.

I found and fixed some bugs in my code and checked again the already crawled data and get now better results:

Live backlinks found in crawled pages with status 30x followed (one deep) urls included:

581,037 urls

40,135 hostnames

33,302 domains

23,324 ips

14,593 subnets

This also improved the percentage live backlinks, this time the right urls crawled value is used:

Result:

823,418 urls sceduled/719,854 urls crawled - 70.56%/80.72% live

37,490 domains - 88.83% live

24,650 ips - 94.62% live

15,335 subnets - 95.16% live

I was interested to see what domains had also backlinks from the crawled pages and made a top 25 ordered by ref domains:

1 searchengineland.com - 33,302

2 twitter.com - 21,631

3 google.com - 19,987

4 facebook.com - 19,021

5 blogspot.com - 13,503

6 youtube.com - 12,884

7 wikipedia.org - 8,961

8 yahoo.com - 8,787

9 linkedin.com - 8,281

10 feedburner.com - 8,203

11 wordpress.org - 7,716

12 digg.com - 7,388

13 wordpress.com - 6,636

14 techcrunch.com - 6,544

15 flickr.com - 6,022

16 mashable.com - 5,975

17 seomoz.org - 5,785

18 nytimes.com - 5,744

19 reddit.com - 5,286

20 icio.us - 5,166

21 searchenginewatch.com - 5,079

22 amazon.com - 4,949

23 stumbleupon.com - 4,117

24 typepad.com - 3,999

25 wsj.com - 3,825

I have been using these tools for carringtonstanley.com/seo and I am amazed how it makes look so good and my clients think I am amazing. you are amazing!

edit: screw off for that link drop shitbag. your account has been banned.

Great insight into not so common issue dealt with.

A q's - Read somewhere (And didn't test yet) that google is able to read & take Into account domains mentioned in text, not as active hyperlinks ... Thus & if true, shouldn't ur filtering be adjusted?

And in any case, rn't we now days dealing w/ a more user/social approach by Google and thus 'mentioning' should have a more central place in ur analysis?

... like local search, having the same NAP data across many sites validates that it is more likely to be true, correct & current information. There might be some bleed in the semantic understanding of words at some search engines due to co-citations (years ago MSN Search ranked Traffic-Power.com for "Aaron Wall" because of the co-citation around the bogus lawsuit Traffic Power they threw at me). But generally speaking search engines wouldn't want to put a lot of weight on unlinked messages on web pages because it is very easy for those to get past spam filters and such. A person could create thousands of them (perhaps millions even) on a very low budget via comment spamming and such, and since they are unlinked they are less likely to get nuked by the owners of those blogs for being spam.

Just took a look and OSE reports SEL having 253K links with 238K being external yet the graphs used seem to indicate SEL has less than 100k links according to OSE

We could really do with a conclusion to all this.

...thus there will never be any longstanding written in stone conclusion of sorts.

It is hard to win on freshness, depth & cleanliness all at the same time. To some degree one wins on one of those by sacrificing another (for example, a shallower database will generally have a far higher % of active links, as it will pick up less noise & churn + it will be cheaper to recrawl faster). And whoever wins one month might not win the next.

All these companies are competing for customers, growing quickly & reinvesting profits to fuel further growth. There are probably nearly a dozen companies competing in this space offering link data. Recently even the search engine Blekko put their hat in the ring. And Bing also started offering link data in their webmaster tools (surely Microsoft has boatloads more money to crawl the web than all the SEO tool companies combined, probably by a factor of over 10,000, though Bing is still lacking many of the features these tools have, since Bing's link tool is free & other companies sell the link data as a service).

Hm,

Rather I think we were pretty much spot on, and that's despite using a "desktop crawler".

If you're evaluating a link based crawl product (and I did say in my study you should be combining and de-duping data from all of them), you can learn a lot about the crawl tech from metrics such as crawl depth per domain (the # of pages crawled per domain). This study doesn't really go into that. You've also not looked at exact duplicate row %'s, index composition (200 vs 301 vs 404 vs 302 vs 5xx etc etc) for each tool, whether or not there were chunks of data missing (we performed exports over a period of days in our work and compared the differences on MajFresh).

I'd love to know a few things about Ayima V2 - specifically:

1) What was the seed source of the link data? Is it based upon (and then expanded upon) Majestic, Mozscape etc?

2) Are you monitoring a small pool of domains or indexing the web?

3) How are you managing quality of data vs quantity of URLs crawled? It sounds like you're deliberately disregarding low quality links to preserve bandwidth? I could be wrong there - this doesn't sound like something you'd want to ignore.

4) What is your opinion on Google Webmaster Tools link data (I found it to have the highest root domain diversity out of all of the tools for our target domain)? - appreciate this is a comparison of 3r party tools, but given you're only looking at a single domain, it might be justifiable to use GWMT as a fair benchmark.

I'd expect Majestic's main index to be significantly more diverse compared to their Fresh index. Actually, that's about the same size (or thereabouts) as Mozscape.

Thanks,

Richard Baxter

Since Majestic added the new tabs today, i can't see any reason for using anything else. Used to find Ahrefs useful for anchors, new, and lost links. But now Majestic has them.

So probably you're asking yourself, leaving aside my one is bigger than yours, what's the betst tool of the bunch to use? The answer to that is either all or them or none of them depending on your budget.

To those with budget These tools help you get a quick sense of just how far up the arms you will need to roll your sleeves when taking on a client and rendering their competitors below you. But bear in mind that lots of the web aged, and that was also in terms of the way people viewed it. Forums, blogs and other pre-social networks just nuked with Facebook. So you're going to be analyzing traffic sources that could well and truly be dead and were only actually useful at the time, not for the link, but because they actually had readers!

With these tools you can get a quick sense of just how good or smelly their SEO practice is and that can work in your favour if you play it out right. It's far better to just start from the position of where do I need to be seen, and what are the most important places that will get the search engines to give me extra credit.

If you have the niche keywords, you can pretty much pull back an entire linking strategy with some advanced operators in Google, Alexa and and an Excel spreadsheet.

G.

Can you confirm where in ahrefs you get the information you mention above "Not all links are created equal, with Ahrefs showing both the rank-improving links and the crappy spam". Where exactly does ahrefs show this info? or are you working it out from the reports

Thanks

Niko

Yes, i was looking for this sort of article, finally found and i like to say thank so much Rob for this excellent work on these to let us know the whole things what are things we get from the ones or not. Being an expert, we should have a tool that features all functions which include to carry our tasks effectively and efficiently .. ! thumbs up!

I think Ahrefs is a good option to go for

Add new comment