Whenever Google does a major algorithm update we all rush off to our data to see what changed in terms of rankings, search traffic, and then look for the trends to try to figure out what changed.

The two people I chat most with during periods of big algorithmic changes are Joe Sinkwitz and Jim Boykin. I recently interviewed them about the Penguin algorithm.

Topics include:

- what it is

- its impact

- why there hasn't been an update in a while

- how to determine if issues are related to Penguin or something else

- the recovery process (from Penguin and manual link penalties)

- and much, much more

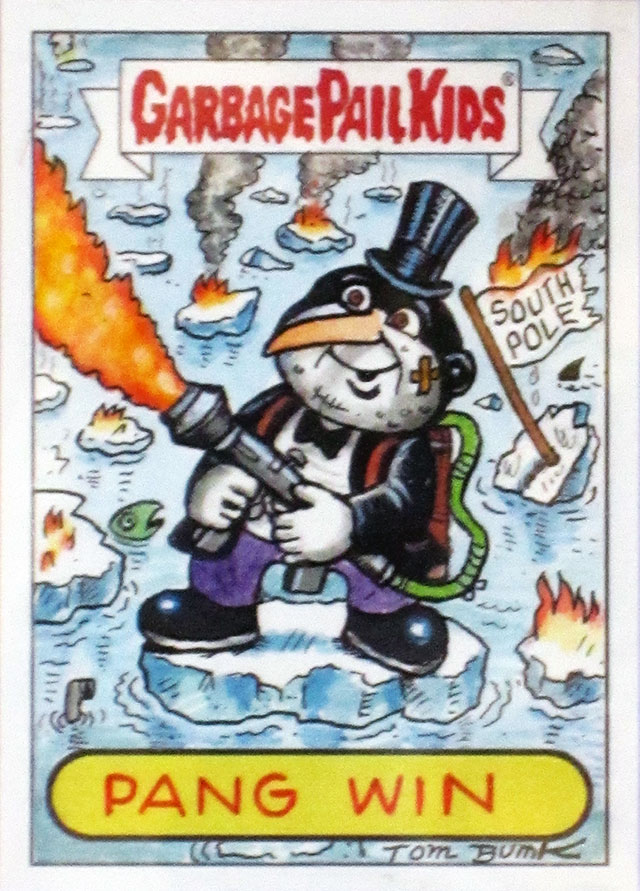

Here's a custom drawing we commissioned for this interview.

Want to embed this image on your website?

To date there have been 5 Penguin updates:

- April 24, 2012

- May 25, 2012

- October 5, 2012

- May 22, 2013 (Penguin 2.0)

- October 4, 2013

There hasn't been one in quite a while, which is frustrating many who haven't been able to recover. On to the interview...

At its core what is Google Penguin?

Jim: It is a link filter that can cause penalties.

Joe: At its core, Penguin can be viewed as an algorithmic batch filter designed to punish lower quality link profiles.

What sort of ranking and traffic declines do people typically see from Penguin?

Jim: 30-98%. actually, seen some "manual partial matches" some, where traffic was hardly hit...but that's rare.

Joe: Near total. I should expand. Penguin 1.0 has been a different beast than its later iterations; the first one has been nearly a fixed flag whereas later iterations haven't been quite as severe.

After the initial update there was another one about a month later & then one about every 6 months for a while. There hasn't been one for about 10 months now. So why have the updates been so rare? And why hasn't there been one for a long time?

Jim: Great question. We all believed there'd be an update every 6 months, and now it's been way longer than 6 months...maybe because Matt's on vacation...or maybe he knew it would be a long time until the next update, so he took some time off...or perhaps Google wants those with a algorithmic penalty to feel the pain for longer than 6 months.

Joe: 1.0 was temporarily escapable if you were willing to 301 your site; after 1.1 the redirect began to pass on the damage. My theory on why it has been so very long on the most recent update has to do with maximizing pain - Google doesn't intend to lift its boot off the throats of webmasters just yet; no amount of groveling will do. Add to that the complexity of every idiot disavowing 90%+ of their clean link profiles and 'dirty' vs 'clean' links is difficult to ascertain on that signal.

Jim: Most people disavow some, then the disavow some more...then next month they disavow more...wait a year and they may disavow them all :)

Joe: Agreed.

Jim: Then Google will let them out...hehe, tongue in cheek...a little.

Joe: I've seen disavow files with over 98% of links in there, including Wikipedia, the Yahoo! Directory, and other great sources - absurd.

Jim: Me too. Most of the people are clueless ... there's tons of people who are disavowing links just because their traffic has gone down, so they feel they must have been hit by penguin, so they start disavowing links.

Joe: Yes; I've seen a lot of panda hits where the person wants to immediately disavow. "whoa, slow down there Tex!"

Jim: I've seen services where they guarantee you'll get out of a penguin penalty, and we know that they're just disavowing 100% of the links. Yes, you get your manual penalty removed that way, but then you're left with nothing.

Joe: Good time to mention that any guarantee of getting out of a penalty is likely sold as a bag of smoke.

Jim: or as they are disavowing 100% of the links they can find going to the site.

OK. I think you mentioned an important point there Jim about "100% of the links they can find." What are the link sources people should use & how comprehensive is the Google Webmaster Tools data? Is WMT data enough to get you recovered?

Joe: Rarely. I've seen where the examples listed in a manual action might be discoverable on Ahrefs, Majestic SEO, or in WMT, but upon cleaning them up (and disavowing further of course) that Google will come back with a few more links that weren't initially in the WMT data dump. I'm dealing with a client on this right now that bought a premium domain as-is and has been spending about a year constantly disavowing and removing links. Google won't let them up for air and won't do the hard reset.

Jim: well first...if you're getting your backlinks from Google, be sure to pull your backlinks from the www and the non www version of your site. You can't just use one: you HAVE to pull backlinks from both, so you have to verify both your www and your non www version of your site with Google Webmaster Tools.

We often start with that. When we find big patterns that we feel are the cause, we'll then go into OSE, Majestic SEO, and Ahrefs, and pull those backlinks too, and pull out those that fit the patterns, but that's after the Google backlink analysis.

Joe, you mentioned people getting hit by Panda and mistakenly going off to the races to disavow links. What are the distinguishing characteristics between Penguin, Panda & manual link penalties?

Joe: Given they like to sandwich updates to make it difficult to discern, I like this question. Penguin is about links; it is the easiest to find but hardest to fix. When I first am looking at a URL I'll quickly look at anchor % breakdowns, sources of links, etc. The big difference between penguin and a manual link penalty (if you aren't looking on WMT) is the timing -- think of a bomb going off vs a sniper...everyone complaining at once? probably an algorithm; just a few? probably some manual actions. For manual actions, you'll get a note too in WMT. With panda I like to look first at the on-page to see if I can spot the egregious KW stuffing, weird infrastructure setups that result in thin/duplicated content, and look into engagement metrics and my favorite...externally supported pages - to - total indexed pages ratios.

Jim: Manual, at least you can keep resubmitting and get a yes or no. With an algorithmic, you're screwed....because you're waiting for the next refresh...hoping you did enough to get out.

I don't mind going back and forth with Google with a manual penalty...at least I'm getting an answer.

If you see a drop in traffic, be sure to compare that to the dates of Panda and Penguin updates...if you see a drop on one of the update days, then you can know if you have Panda or Penguin....and if you're traffic is just falling, it could be just that, and no penalty.

Joe: While this interview was taking place an employee pinged me to let me know a manual action that was denied, with an example URL being something akin to domain.com/?var=var&var=var - the entire domain was already disavowed. Those 20 second manual reviews by 3rd parties without much of an understanding of search doesn't generate a lot of confidence for me

Jim: Yes, I posted this yesterday to SEOchat. Reviewers are definitely not looking at things.

You guys mentioned that anyone selling a guaranteed 100% recovery solution is likely selling a bag of smoke. What are the odds of recovery? When does it make sense to invest in recovery, when does it make sense to start a different site, and when does it make sense to do both in parallel?

Jim: Well, I'm one for trying to save a site. I haven't once said "it's over for that site, let's start fresh." Links are so important, that if I can even save a few links going to a site, I'll take it. I'm not a fan of doing two sites, causes duplicate content issues, and now your efforts are on two sites.

Joe : It depends on the infraction. I have a lot more success getting stuff out of panda, manual actions, and the later iterations of penguin (theoretically including the latest one once a refresh takes place); I won't take anyone's money for those hit on penguin 1.0 though...I give free advice and add it to my DB tracking, but the very few examples I have where a recovery took place that I can confirm were penguin 1.0 and not something else, happened due to being a beta user of the disavow tool and likely occurred for political reasons vs tech reasons.

For churn and burn, redirects and canonicals can still work if you're clever...but that's not reinvestment so much as strategy shift I realize.

You guys mentioned the disavow process, where a person does some, does some more over time, etc. Is Google dragging out the process primarily to drive pain? Or are they leveraging the aggregate data in some way?

Joe: Oh absolutely they drag it out. Mathematically I think of triggers where a threshold to trigger down might be at X%, but the trigger for recovery might be X-10%. Further though, I think initially they looooooved all the aggregate disavow data, until the community freaked out and started disavowing everything. Let's just say I know of a group of people that have a giant network where lots of quality sites are purposefully disavowed in an attempt to screw with the signal further. :)

Jim: pain :) ... not sure if they're leveraging the data yet, but they might be. It shouldn't be too hard for Google to see that a ton of people are disavowing links from a site like get-free-links-directory.com, for Google to say, "no one else seems to trust these links, we should just nuke that site and not count any links from there."

we can do this ourselves with our own tools we have..I can see how many times I've seen a domain in my disavows, and how many times I disavowed that...ie, If I see spamsite.com in 20 disavows I've done, and I'd disavowed it all 20 times I saw it, I can see this data... or if I've seen goodsite.com 20 times, and never once disavowed it, I can see that too. I'd assume Google must do something like this as well.

Given that they drag it out, on the manual penalties does it make sense to do a couched effort on the first rejection or two, in order to give the perception of a greater level of pain and effort as you scale things up on further requests? What level of resources does it make sense to devote to the initial effort vs the next one and so on? When does recovery typically happen (in terms of % of links filtered and in terms of how many reconsideration requests were filed)?

Joe: When I deliver "disavow these" and "say this" stuff, I give multiple levels, knowing full well that there might be deeper and deeper considerations of the pain. Now, there have been cases where the 1st try gets a site out, but I usually see 3 or more.

Jim: I figure it will take a few reconsideration requests...and yes, I start "big" and get "bigger."

but that's for a sitewide penalty...

We've seen sitewides get reduced to a partial penalty. And once we have a partial penalty, it's much easier to identify this issues and take care of those, while leaving links that go to pages that were not effected.

A sitewide manual penalty kills the site...a partial match penalty usually has some stuff that ranks good, and some stuff that no longer ranks...once we're at a partial match, I feel much more confident in getting that resolved.

Jim, I know you've mentioned the errors people make in either disavowing great links or disavowing links when they didn't need to. You also mentioned the ability to leverage your old disavow data when processing new sites. When does it make sense to DIY on recovery versus hiring a professional? Are there any handy "rule of thumb" guidelines in terms of the rough cost of a recovery process based on the size of their backlink footprint?

Joe: It comes down to education, doesn't it? Were you behind the reason it got dinged? You might try that first vs immediately hiring. Psychologically it could even look like you're more serious after the first disavow is declined by showing you "invested" in the pain. Also, it comes down to opportunity cost. What is your personal time worth divided by your perceived probability of fixing

Jim: We charge $5000 for the analysis, and $5000 for the link removal process...some may think that's expensive...but removing good links will screw you, and not removing bad links will screw you...it's a real science, and getting is wrong can cost you a lot more than this...of course I'd recommend seeing a professional, as I sell this service...but I can't see anyone who's not a true expert in links doing this themselves.

Oh...and once we start work for someone, we keep going at no further cost until they get out.

Joe: That's a nice touch Jim.

Jim: Thank you.

Joe, during this interview you mentioned a reconsideration request rejection where the person cited a link on a site that has already been disavowed. Given how many errors Google's reviewers make, does it make sense to aggressively push to remove links rather than using disavow? What are the best strategies to get links removed?

Joe: DDoS

Jim: hehe

Joe: Really though, be upfront and honest when using those link removal services (which I'd do vs trying to do them one-by-one-by-one)

Jim: Only 1% of the people will remove links anyways; it's more to show Google that to you really tried to get the links removed.

Joe: Let the link holder know that you got hit with a penalty, you're just trying to clean it up because your business is suffering, and ask politely that they do you a solid favor.

I've been on the receiving end of a lot of different strategies given the size of my domain portfolio. I've been sued before (as a first course of action!) by someone that PAID to put a link on my site....they never even asked, just filed the case.

Jim: We send 3 removal requests..and ping the links too..so when we do a reconsideration request we can show Google the spreadsheet of who we emailed, when we emailed them, and who removed or no followed the links...but it's more about "show" to Google.

Joe: Yep, not a ton of compliance; webmasters have link removal fatigue by now.

This is more of a business question than an SEO question, but ... as much as budgeting for the monetary cost of recovery, an equally important form of budgeting is dealing with the reduced cashflow while the site is penalized. How many months does it typically take to recover from a manual penalty? When should business owners decide to start laying people off? Do you guys suggest people aggressively invest in other marketing channels while the SEO is being worked on in the background?

Jim: manual penalty typically take 2-4 months to recover. Recover is a relative term. Some people get "your manual penalty has been removed" and thier recovery is a tiny blip -up 5%, but still down 90% from what is was prior. Getting a "manual penalty removed" is great. IF there's good links left in your profile...if you've disavow everything, and your penalty is removed...so what...you've got nothing....people often ask where they'll be once they "recover" and I say "it depends on what you have left for links"...but it won't be where you were.

Joe: It depends on how exposed they are per variable costs. If the costs are fixed, then one can generally wait longer (all things being equal) before cutting. If you have a quarter million monthly link budget *cough* then, you're going to want to trim as quickly as possible just in order to survive.

Per investing in other channels, I absolutely wholeheartedly cannot emphasize how important it is to become an expert in one channel and at least a generalist in several others...even better, hire an expert in another channel to partner up with. In payday one of the big players did okay in SEO but even with a lot of turbulence was doing great due to their TV and radio capabilities. Also, collect the damn email addresses; email is still a gold mine if you use it correctly.

One of my theories for why there hasn't been a penguin update in a long time was that as people have become more afraid of links they've started using them as a weapon & Google doesn't want a bunch of false positives caused by competitors killing sites. One reason I've thought this versus the pain first motive is that Google could always put a time delay on recoveries while still allowing new sites to get penalized on updates. Joe, you mentioned that after the second Penguin update penalties started passing forward on redirects. Do people take penalized sites and point them at competitors?

Joe: Yes, they do. They also take them and pass them into the natural links of their competitors. I've been railing on negative SEO for several years now...right about when the first manual action wave came out in Jan 2012; that was a tipping point. It is now more economical to take someone else's ranking down than it is to (with a strong degree of confidence) invest in a link strategy to leapfrog them naturally

I could speak for days straight in a congressional filibuster on link strategies used for Negative SEO. It is almost magical how pervasive it has become. I get a couple requests a week to do it even...by BIG companies. Brands being the mechanism to sort out the cesspool and all that.

Jim: Soon, everyone will be monitoring they backlinks on a monthly basis. I know one big company that submits an updated disavow list every week to google.

That leads to a question about preemptive disavows. When does it make sense to do that? What businesses need to worry about that sort of stuff?

Joe: Are you smaller than a Fortune 500? Then the cards are stacked against you. At the very least, be aware of your link profile -- I wouldn't go so far as to preemptively disavow unless something major popped up.

Jim: I've done a preemptive disavow for my site. I'd say everyone should do a preemptive disavow to clean out the crap backlinks.

Joe: I can't wait to launch an avow service...basically go around to everyone and charge a few thousand dollars to clean up their disavows. :)

Jim: We should team up Joe and do them together :)

Joe: I'll have my spambots call your spambots.

Jim: saving the planet from penguin penalties. cleaning up the links of the web for Google.

Joe: For Google or from Google? :) The other dig, if there's time, is that not all penalties are created equal because there are several books of law in terms of how long a penalty might last. If I take an unknown site and do what RapGenius did, I'd still be waiting, even after fixing (which rapgenius really didn't do) largely because Google is not one of my direct or indirect investors.

Perhaps SEOs will soon offer a service for perfecting your pitch deck for the Google Ventures or Google Capital teams so it is easier to BeatThatPenalty? BanMeNot ;)

Joe: Or to extract money from former Googlers...there's a funding bubble right now where those guys can write their own ticket by VCs chasing the brand. Sure the engineer was responsible for changing the font color of a button, but they have friends on the inside still that might be able to reverse catastrophe.

Outside of getting a Google investment, what are some of the best ways to minimize SEO risk if one is entering a competitive market?

Jim: Don't try to rank for specific phrases anymore. It's a long slow road now.

Joe: Being less dependent on Google gives you power; think of it like a job interview. Do you need that job? The less you do, the more bargaining power you have. If you have more and more income coming in to your site from other channels, chances are you are also hitting on some important brand signals.

Jim: You must create great things, and build your brand...that has to be the focus...unless you want to do things to rank higher quicker, and take the risk of a penalty with Google.

Joe: Agreed. I do far fewer premium domaining + SEO-only plays anymore. For a while they worked; just a different landscape now.

Some (non-link builders) mention how foolish SEOs are for wasting so many thought cycles on links. Why are core content, user experience, and social media all vastly more important than link building?

Jim: links are still the biggest part of the Google algorithm - they can not be ignored. People must have things going on that will get them mentions across the web, and ideally some links as well. Links is #1 still today... but yes, after links, you need great content, good user experience, and more.

Joe: CopyPress sells content (please buy some content people; I have three kids to feed here), however it is important to point out that the most incredible content doesn't mean anything in a vacuum. How are you going to get a user experience with 0 users? Link building, purchasing traffic, DRIVING attention are crucial not just to SEO but to marketing in general. Google is using links as votes; while the variability has changed and evolved over time, it is still very much there. I don't see it going away in the next year or two.

An analogy: I wrote two books of poetry in college; I think they are ok, but I never published them and tried to get any attention, so how good are they really? Without promotion and amplification, we're all just guessing.

Thanks guys for sharing your time & wisdom!

About our contributors:

Jim Boykin is the Founder and CEO of Internet Marketing Ninjas, and owner of Webmasterworld.com, SEOChat.com, Cre8asiteForums.com and other community websites. Jim specializes in creating digital assets for sites that attract natural backlinks, and in analyzing links to disavow non-natural links for penalty recoveries.

Joe Sinkwitz, known as Cygnus, is current Chief of Revenue for CopyPress.com. He enjoys long walks on the beach, getting you the content you need, and then whispering in your ear how to best get it ranking.