Background / Intro

One of my longtime friends who was Internet marketing long before I was hit me up on Skype about a week ago praising Jasper.ai. I have to think long and hard about any other time he has really pitched or recommended something like that & really I just can't think of any other time where he did that. The following day my wife Giovanna mentioned something to me and I was like "oh you should check out this thing my buddy recommended yesterday" and then I looked and realized they were both talking about the same thing. :D

I have a general heuristic that if people I trust recommend things I put them near the top of the "to do" list and if multiple people I trust do that I pull out the credit card and run at it.

Unfortunately I have been a bit burned out recently and launched a new site which I have put a few hundred hours into, so I haven't had the time to do too much testing, BUT I have a writer who works for me who has a master's degree in writing, and figured she could do a solid review. And she did. :D

She is maybe even a bit more cynical than I am (is that even possible?) and a certified cat lady who loves writing, reading, poetry and is more into a soft sell versus aggressive sales.

Full disclosure...the above link and the one at the end of this post are affiliate links, but they had zero impact on the shape or format of the review. The reviewer was completely disconnected from the affiliate program and I pulled out my credit card to pay for the software for her to test it out.

With that tiny bit of a micro-introduction, the rest of the post from here on out is hers. I may have made a couple minor edits for clarity (and probably introduced a few errors she will choke me for. :D) but otherwise the rest of this post is all her ...

An In-depth Review of the Conversion.ai Writing Software

Considering the possibilities of artificial intelligence (AI), we picture robots doing tasks autonomously like humans. With a computer’s brain power, productivity is accelerated significantly. We also expect AI programs to have the capability to evolve intelligently the longer they are used. These types of AI employ “machine learning,” or deep learning to solve problems.

AI technology can be leveraged by various industries, especially with writing. Recently, I learned about the Conversion.ai copywriting tool. It uses machine learning which claims to write “high converting copy” for websites, ads, landing pages, emails, etc. The software is geared towards writers, marketers, entrepreneurs, and agencies that benefit from creating engaging and effective copy. To date, companies such as Hubspot, Shopify, and Salesforce are known to use the software. Currently, it’s offering a 7-day free trial with 20,000-word credits.

To give you the lowdown on Conversion.ai, I wrote an in-depth review of how this software works. I’ll go through its various features and show examples of how I used them. I’ll include the advantages of using Conversion.ai’s Jasper (that’s what it’s called) in writing scenarios. More importantly, I’ll discuss challenges and specific limitations this tool might present.

Assistance in Creating High Conversion Copy

As a writer doing web copy for 10 years, including the time I took a post-grad creative writing degree, I grabbed the opportunity to try this AI software. For starters, it struck me how Conversion.ai claims to provide “high converting copy” for increased conversion and higher ROI. Such claims are a tall order. If you’ve been in the marketing or sales industry, you’d know conversion depends on so many other factors, such as the quality of the actual product, customer support, price, etc. It’s not just how well copy is written, though it’s a vital part. But anyway, upon more research, I learned the app generates copy based on proven high conversion sales and marketing messages.

To be honest, I have mixed feelings about this conversion strategy. I believe it’s a double-edged sword. This is not to undermine facts or measurable data. Basing content creation on “proven content” means you’re likely using the same phrases, techniques, and styles already used by successful competitors. This serves as a jumping board for ideas of course, so you know what’s already there. However, it can be an echo chamber. Marketers must not forget that execution must still be fresh. Otherwise, you’ll sound like everyone else.

Next, while it seems sustainable, it also sounds pretty safe. If your product or service is not that distinct, you must put extra effort to create content that stands out. This applies to all aspects of the marketing strategy, not just in writing content. It’s a crucial principal I learned after reading Purple Cow by Seth Godin (thanks for the book suggestion, Aaron!).

Depending on your product or service, Conversion.ai will generate copy that most consumers keep going back to. Based on the samples it generated, I’d say it really does come up with engaging copy, though it needs editing. If your business must rewrite product descriptions for extensive inventories, Conversion.ai can cut the time in half. It can help automate description rewriting without hiring more writers. That saves money and time, so businesses need fewer writers and editors.

What did I learn? Conversion.ai can make writing and editing faster, yes, especially for low-level content focused on descriptions. It can also inform the strength of your ideas for more creative campaigns. However, it still takes solid direction and creativity to drive good marketing copy forward. That said, it’s only as good as the writer utilizing this app. As a content creator, you cannot rely on it solely for creativity. But as an enhancer, it will significantly help push ideas forward, organize campaigns, and structure engaging copy effectively.

When you use this app, it offers many different features that help create and organize content. It also customizes copy for various media platforms. Beyond rewriting , it even has special brainstorming tools designed to help writers consider various idea angles. This can add more flavor and uniqueness into a campaign.

At the end of the day, what will set your copy apart is the strength of your ideas and your communication strategy. How you customize content for a business is still entirely up to you. AI writing tools like Conversion.ai can only help enhance your content and the ideas behind it. It’s a far cry from creating truly unique concepts for your campaign, but it definitely helps.

Conversion.ai Writing Features & How They Work

This AI writing app comes with plenty of “writing templates” that are customized to help you write with a specific framework or media platform in mind. Currently, Conversion.ai offers 39 different writing templates or content building blocks that deliver results. We’ll provide details for how each one works.

For company or product descriptions, Conversion.ai has a Start Here step by step guide, which says users should alternate between the Product Description and the Content Improver template until they have found the right mix they’re looking for. But for this review, I just focused on how to use the templates for different writing projects. The app comes with video instructions as well as a live training call if you need further assistance on how to use it.

Each template asks you to input a description or what you want to write about. This is limited to 600 characters. Writing the description is the sole basis for how Jasper will generate ways to write or expand your content. It also helps you brainstorm and structure ideas for an article or campaign.

But as an issue, I find the 600-character limit can hinder reposting the full content generated by the AI back into the template for improvement. Yes, it churns out marketing copy of more than 600 characters. If you want to post the improved copy again, you might have to do this in two batches. In any case, Jasper can generate as many improved writing samples as you need.

To give you a better idea, here are different Conversion.ai templates and how they work. This is going to take a while, so have your coffee ready.

Long-form Assistant

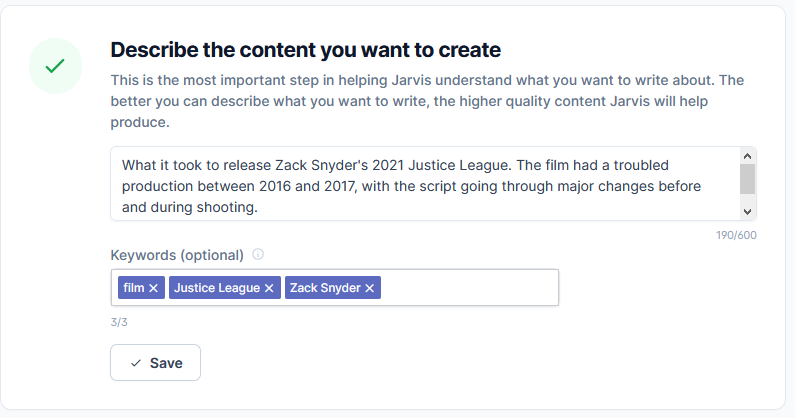

This is for longer articles, emails, scripts, and stories. It’s also suggested for writing books. It has two modes, a blank document where you can start typing freely and an assistant workflow. The blank document also lets you access the rest of the other writing templates vertically. On the other hand, the long-form assistant workflow is where the app asks you to describe the content you want to create. Consider this carefully. The better you can articulate your topic, the higher quality content Jasper can help generate.

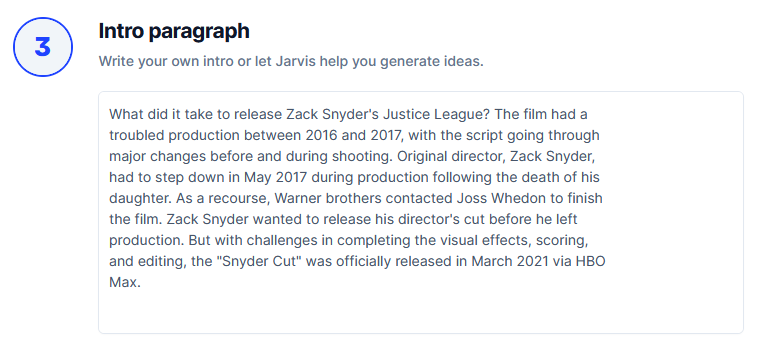

For the example, suppose I want to write about what it took to finally release Zack Synder’s 2021 Justice League. I want to write this feature article for my film and culture website.

Jasper asks for a maximum of three keywords. It’s optional, but I presume adding keywords will help Jasper generate more relevant content. Next, it prompts you to write a working title and start an introductory paragraph. Once you write your initial title, it will generate other title ideas.

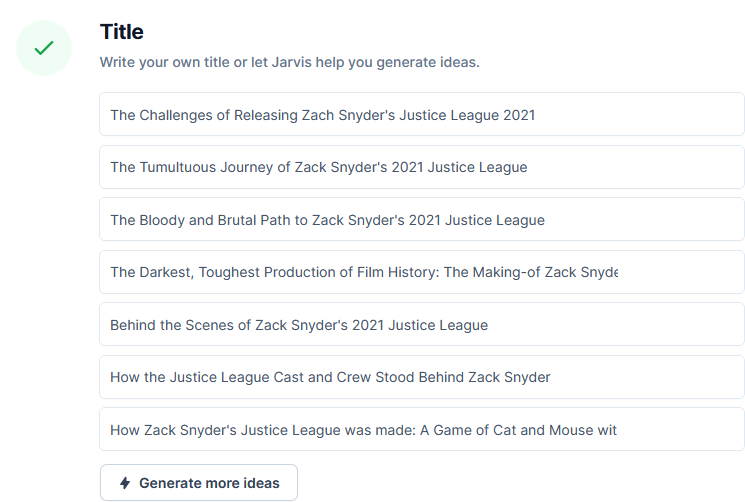

For the title, I wrote: The Challenges of Releasing Zach Snyder’s Justice League 2021

It generated the following suggestions:

Jasper generated a long list of appropriate and catchy titles. I personally liked “The Tumultuous Journey of Zack Snyder’s Justice League 2021,” though I’d shorten it a bit. I also liked “The Darkest, Toughest Production in Film History,” which basically tells audiences how hard it was to produce the version of the film. Though it’s an exaggeration, it can be qualified in the article. The other suggestions with “Behind the Scenes” and “Towards a Better Script” were more specific angles. I like that I could explore those topics too. Generally, I think it’s definitely a great tool to make title writing faster.

So far, so good, right? But when I got to content suggestions, it got a bit messy. It was mainly because the suggested content was too general. It also tended toward suggesting expansions that were incoherent to the main topic. This did not sound very promising. Moreover, the AI added bits of information such as dates and other related information. I had to check if these details were used accurately. In a previous test I did (and even for this example), it completely got a date wrong. So I advise writers to always double check the details suggested by Jasper.

Take a look below. The top paragraph was the one I wrote, while the ones below are suggested by Jasper.

Content suggestions from Jasper:

1) You might not be able to see Zack Snyder’s Justice League on the big screen, but you can catch it online in 2021. It was released digitally by Warner Bros. on November 27th 2020 and we’ve been watching it slowly climb the ranks of IMDB since then. The film had a troubled production between 2016 and 2017 with the script going through major changes before and during shooting. But now that all is said and done, what did people think? Let’s find out!

2) Justice League is a film that was released on November 17, 2017. The movie had been preceded by Batman v Superman: Dawn of Justice and Wonder Woman. It’s also the fifth installment in the DC Extended Universe (DCEU). Despite being generally well-received by critics, it was considered to be a disappointment at the box office. In this blog post, we’ll take you behind the scenes and show you what went wrong with Zack Snyder’s 2021 Justice League film production!

3) Director Zack Snyder’s Justice League film was one of the most anticipated films of 2017. Unfortunately, the film had a troubled production and was not released until 2021. That didn’t stop it from becoming one of the highest-grossing films of all time. What does this have to do with SEO? When you release content on your website, be sure to include keywords that are relevant to your business and industry!

At first glance, the introduction actually looks appealing to Justice League fans. Many people have been anticipating it on the big screen. But upon further reading, compared to the paragraph I wrote, the AI content had so many problems. Besides being too general, it had issues with imprecise details. Though you can argue these problems are small and editable, it adds time to your work. It can even derail you from focusing on your topic.

The first AI suggestion wrote that Snyder Cut was released digitally by Warner Bros. in November 27, 2020. Upon further research, I found no such confirmation of this. However, there was a YouTube video “speculating” it’s release in November 2020. But from the looks of it, this did not pan out. Officially, Zack Snyder’s Justice League was released in March 18, 2021 by HBO Max via streaming platform, according to Rotten Tomatoes. And yes, it has been climbing the ranks since its digital release.

If you’re not careful about fact-checking, you might end up with misleading information. And frankly, I feel as if some of the other suggestions may tend towards fluff. However, what you can do is choose the best suggestions and put them together into one coherent paragraph. The first suggestion ended the introduction with “But now that all is said and done, what did people think? Let’s find out!” While it’s something I want to touch on eventually, it is not the main focus of my introduction. The AI was not sensitive enough to sense this follow up was out of place. I’d rather get to the details of the challenging production. If I use this suggestion, I’ll have to edit it into “Let’s take a look at what it took to deliver the highly anticipated Snyder Cut,” or something to that effect.

The second example, on the other hand, was quite a miss. It started by talking about the 2017 Justice League film. While it’s good to expound on the history of the project started, it got lost in discussing the 2017 version. Worse, it did not transition the topic smoothly into the 2021 Snyder Cut. If I read this introduction, I’d be confused into thinking the article was about the 2017 Justice League. Finally, it awkwardly ended the paragraph with “we’ll take you behind the scenes and show you what went wrong with Zack Snyder’s 2021 Justice League film production!” Besides the wordy sentence, suddenly it’s talking about the 2021 Justice League out of nowhere. I would not phrase the production’s challenges as something that went wrong. That’s unnecessary hype. It’s confusing, and just an example of bad writing. Again, while it can be fixed with editing, I feel better off writing on my own.

Finally, the third example actually started okay. But then it started talking about SEO out of nowhere. I don’t know where that came from or why the AI did that, but I’ll count it as a totally unusable suggestion from the app. I reckon there might be more of those glitches if I generate more content suggestions from Jasper.

SIDEBAR FROM AARON: COUGH. SEO IS EVERYTHING. HOW DO I REEEEECH DEZ KIDZ

I noticed these were nuances the AI was not able to catch. It’s probably even based on trending articles at the time, which had a tendency towards hype and dated showbiz information. And though the suggestions were interesting, they were mostly too general or against the direction I needed. If the usage of the information is not accurate, imagine what that would mean for health or political articles. But too be fair, it did generate other usable suggestions with less serious edits. It’s worth looking into those.

However, by this time, I felt I was better off writing the feature without the app, at least for this example. I guess it’s really a hit or miss. Even with so many content suggestions, I think you can still end up with inappropriate samples even if you find good ones. But at least you got a good title already. Personally, I’d rather go straight to researching on my own.

Framework Templates

Conversion.ai allows you to write copy based on marketing frameworks that have been used by professionals for years. It’s ideal for brands, products, and services you need to promote. This features includes the following templates:

- AIDA Framework: The AIDA template stands for Attention, Interest, Desire, and Action. This basically divides your copy into sections drawing attention from consumers and piquing their interest. The suggested copy also includes content that appeals to the consumer’s desire, then ends with a call to action.

- PAS Framework: The PAS template is structured by generating copy which highlights the consumer’s Problem, Agitate, and Solution. It’s focused on how a particular product will help solve a consumer’s problem.

- Bridge-After-Bridge Framework: Also known as BAB framework, this copywriting structure revolves around the idea of getting a consumer from a bad place to a better one. It shows the before and after scenario after benefitting from a product.

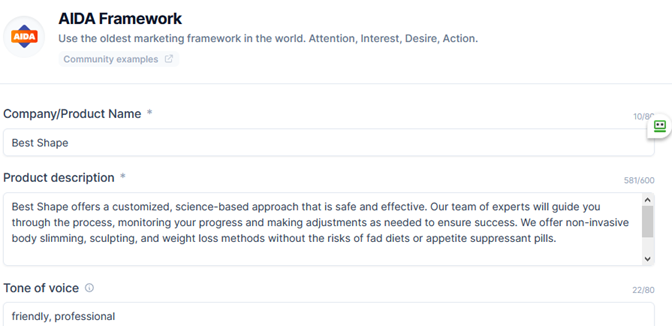

For this example, I used the AIDA template for an imagined non-invasive weight loss service company. The new company promotes fitness and advocates against fad diets. It performs non-surgical weight loss procedures, such as wraps and thermomagnetic massages.

Again, Jasper asks for a description. It also requires you to specify the tone of the copy. I placed “friendly” and “professional” under the box. See my input below.

Here’s the first suggestion from Jasper:

Based on this example, I’d say the AI-generated content is quite engaging. It tried to have a personal touch by letting the customer know they’re here to help. The writing empathizes with consumers who have a hard time losing weight. However, since this is for a new company, the introduction “We have helped thousands of people lose weight and get in shape,” does not apply. So as a writer, I simply have to remove it. This can be replaced with the intent to help more people lose weight and get in shape.

I actually pulled out at least 6 different content suggestions. From these, writers could get the best parts and edit them into one strong copy description. On it’s own, the content would still benefit from a lot of editing. Here are some issues you might encounter while generating copy suggestions:

- Hard Sell Copy. The sample content can be hard sell, even if you specify a professional tone of voice. It tends to use exclamation marks (!) per sample. I believe this depends on the product or service you are writing about. Certain products or services may sell more with the hard sell approach, so the AI suggests this strategy. It may also appear like the “proven” way to communicate to consumers. But if you’re going against this direction, it’s a nuance the AI tool might miss. If your business or client specifically avoids exclamation marks your copy, be ready to make the necessary edits.

- Can be Wordy, Long, Redundant. In terms of style, here’s where you can’t rely on Jasper to write the entire thing. If you happen to input a long and detailed product description, the AI has a tendency to generate wordy variations of the copy. If you notice, some details are also redundant. In copywriting standards, this needs tightening. Conciseness can be an issue, most notably if you’re not used to brevity. Thus, I believe this tool will best benefit writers and editors who have considerable experience in crafting straightforward copy.

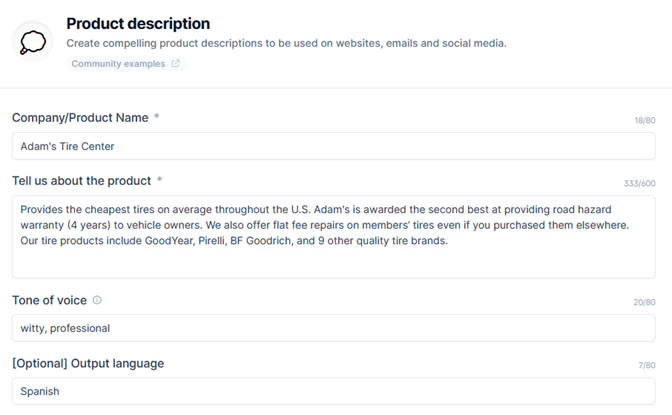

Product Description

The app comes with a special template for creating product descriptions. If you have a large inventory of product information for rewriting, this is the right tool to use. It even comes with an optional language output translation feature, which is available in other templates too.

However, the language feature is limited. I tried putting Thai, Italian, and Japanese and it generated few suggestions, some mixed with English. Same thing with Punjabi and Vietnamese. In other templates, they just keep making English suggestions. Filipino is also not recognized by the AI, which likely means it cannot translate a bunch of other languages. This feature obviously needs development. But it’s not the main feature, so I doubt they’ll do a lot of improvements.

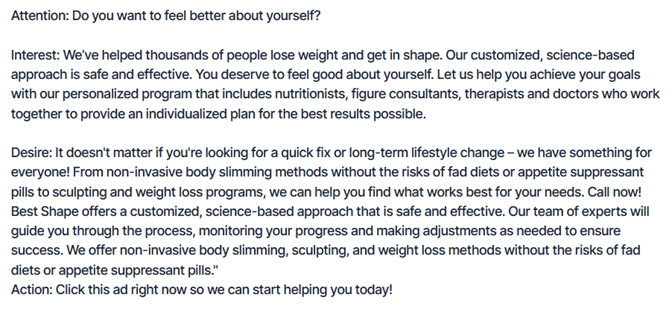

For this example, I used an imagined tire center that offers products and services throughout the U.S. I specifically wrote that it’s the second most affordable tire center in the country. I asked for a professional and witty tone. I’m not at all fluent in Spanish, but I placed Spanish under the output language box.

Below is the first suggested copy in Spanish:

When translated through Google, it reads:

“Don’t think twice, Adam’s Tire Center is your best option because it offers the largest range of products for cars and wagons. Join our satisfied customers and insure your tires with the Road Hazard Warranty service. Call or visit our sales center in Miami, FL, where we are honored to help you.”

Obviously, I can’t comment much on the accuracy of the translation. Though certainly, I have doubts for how writing in another language can capture certain styles and tones. But right now, what I’m more concerned with is the tendency to use superlative descriptions that might not accurately fit the brand. Things like “we offer the largest range of products” should probably be tweaked to “we offer a wide range of products…” If your tire center does not offer the largest inventory, you should not be writing that. It also assumed a specific location, which prompts the writer to include the actual business location (this is a good suggestion). Again, the AI copy would benefit from fine-tuning to make it specific to your product or service.

Now, back to English. Here are three other content samples generated by Jasper:

The English AI-generated samples are not so bad. But in the last sample, there is a tendency for hard sell terms like “unmatched in quality,” that you need to watch out for. You can get the best parts and put them into one solid brand description. But again, these tend to be wordy and long. It would help to use the Hemingway app or Grammarly to make the descriptions tight and concise.

Content Improver

Using the Content Improver template will help you tweak the product or service descriptions you came up with. To show you how it works, I placed the content I wrote based on the edited tire center descriptions Jasper generated.

For this example, I placed professional and witty under tone of voice.

Suggested content from Jasper:

Based on the sample suggestion, I’d say the first two can pretty much stand on their own. These are straightforward copies that address consumer needs with a direct call to action. Though the first one may sound a bit informal, it might fit the type of consumer demographic you are targeting. Finally, the last example gets a bit wordy but can be fixed with a couple of edits. The major issue is the number (555-5555), which the AI mistook for an address.

Marketing Angles

Besides churning out copy suggestions, Conversion.ai has a brainstorming tool. This basically takes your product or service and comes up with various campaign ideas to promote it. If you’re running out of concepts for promotion, Jasper leverages on your product’s features and strengths. I appreciate that it tried to come up with benefit-driven copy based on the example I put.

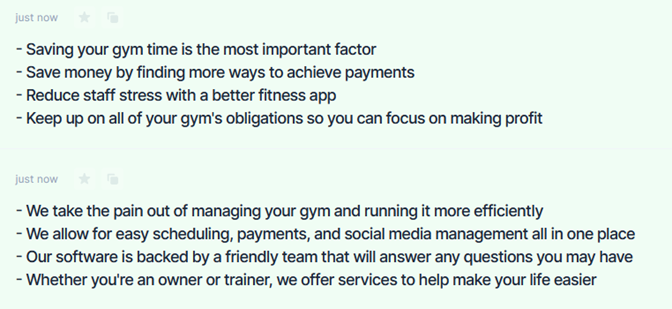

For this example, the product I used is a gym management software. It helps gym owners manage activities, schedules, and handle payments. The software aims to run gyms more efficiently.

I personally find the following suggestions helpful in pushing the strengths of a product. I would definitely use this tool for brainstorming ideas. Here’s what Jasper generated:

Unique Value Propositions

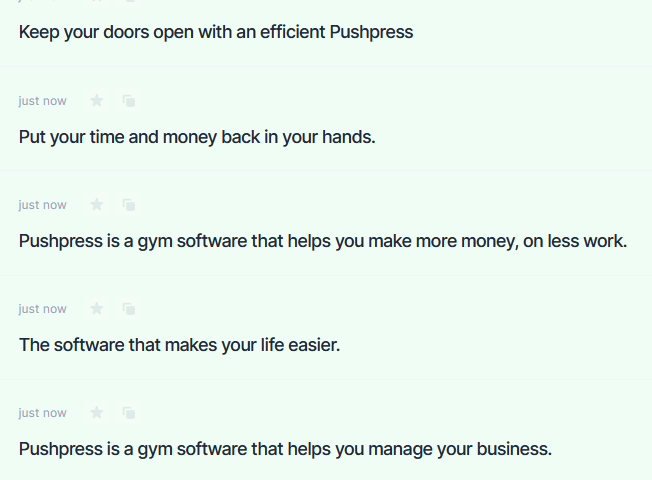

Another intriguing feature is the unique value propositions (UVP ) template. UVP is essentially a clear statement that describes the benefit your product offers. It also captures how you address your customer’s needs and what distinguishes you from the competition.

If you have a product or service, It claims to generate copy that describes your product’s unique advantage in a remarkable way. To test how this works, I used the previous example, which is the gym software. It came up with several statements that emphasized the product’s benefits. See Jasper’s suggestions below. Personally, I like the idea of software that helps me make more money with less work.

Feature Benefit

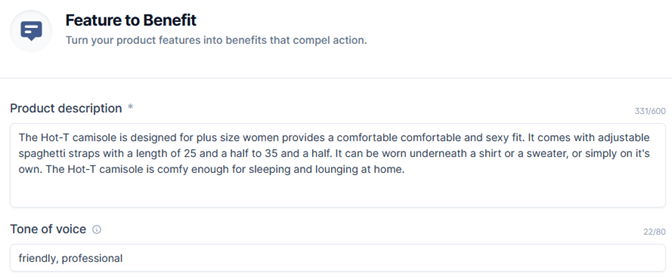

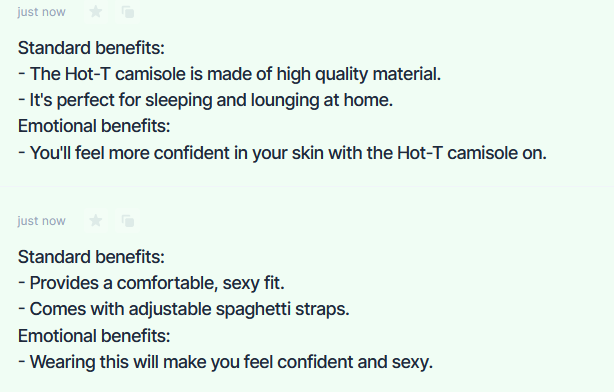

The feature benefit template comes up with a list of advantages offered by your product. For this example, the product is a camisole for plus size women. You’ll see how it took the paragraph details and made bulleted benefits based on those features. It’s a useful tool if you want to break down your product’s unique selling points so you can further emphasize them in your campaign.

Persuasive Bullet Points

Another related function is the persuasive bullet points template. This is very similar to the feature benefit template. Personally, I think it’s either you use this or the feature benefit template if you want to highlight product advantages in bullet points. On the other hand, this template doesn’t categorize benefits as emotional or standard advantages.

Copy Headline and Sub-headline Templates

Conversioan.ai also comes with copy headline and sub-headline templates. They claim the AI is “trained with formulas from the world’s best copywriters.” It also guaranteed to create “high-converting headlines” for businesses. At this point, the only way to know if it does have high conversion is to see actual results. Right now, my review can’t prove any of that. But it would be interesting to know from companies who have been using this software for results.

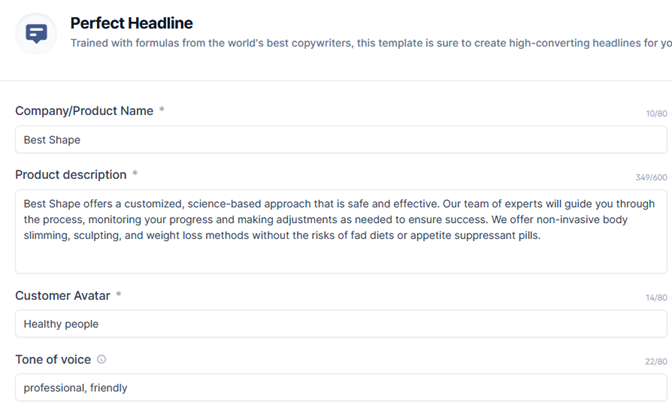

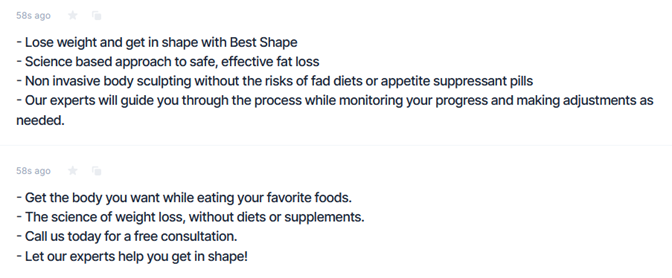

- Perfect Headline: For this template, I used an earlier example that provides non-invasive weight loss services. You’ll see the product description I used, followed by the suggestions made by Jasper. I specifically liked the headline: Science-based approach to safe, effective fat loss. It’s right concept I was going for.

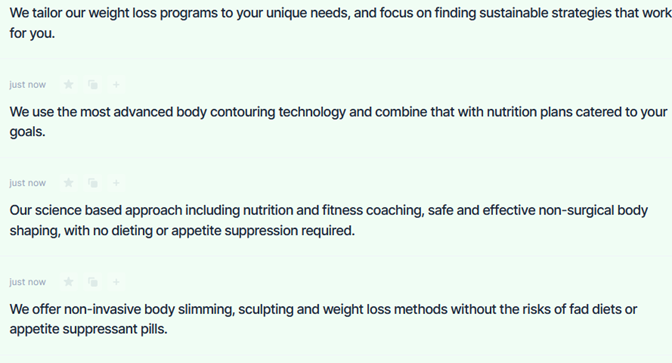

- Website Sub-headline: I used the same product description for the sub-headline. I also used the suggested headline generated by Jasper, which is “Science-based approach to safe, effective fat loss.” Based on Jasper’s suggestions, I liked the last one, which emphasizes non-invasive slimming. It also tells consumers the procedure is safe. Though it tends to be wordy, I appreciate it provides different ways you can get your message across.

Sentence Expander

Another interesting feature is the sentence expander. It claims to make your sentence longer and more creative. I guess it should help you get to another thought if you caught writer’s block. But I’m wary what kind of suggestions it might give. When I tried it, it’s just another way to rewrite your sentence in a longer, more detailed way.

In any case, see my sentence below.

Here’s what Jasper generated:

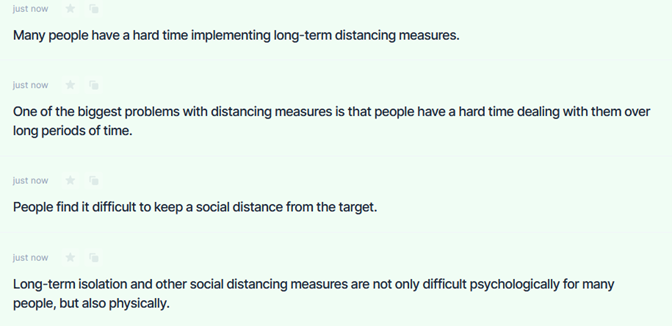

I’m actually not a fan of long-winded sentences. However, I do appreciate the extra details added by the AI. I can use these suggestions if I make further edits on them. But realistically, if I’m writing an article, I’d skip this and go directly to what I’m trying to say. That would save me time. If I want to talk about the negative psychological effects of social distancing, I’d write that point per point. My idea of expansion is moving an argument forward, not merely adding more details to what was already said.

Creative Story

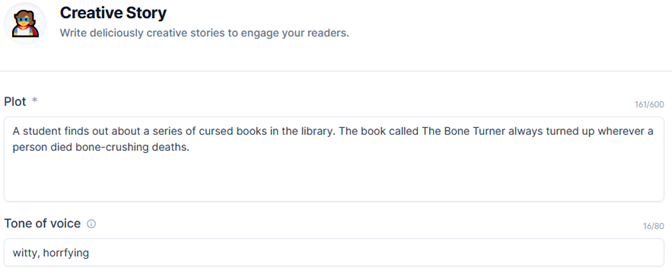

Here’s an interesting template I was curious to try. I wonder how Jasper would develop a two sentence plot. It’s fascinating to see how an AI that uses “proven high conversion data” would suggest story development.

For my example, I took a horror story plot inspired by the Bone Turner from a popular horror podcast called The Magnus Archives. See my plot description and the suggestions made by Jasper.

Story suggestions by Jasper:

I have to say, these are very interesting ideas for an introduction. It’s also funny how it used the name “Jonathon,” because the actual name of main character in the Magnus Archives is “Jonathan.” I kind of think that was on purpose, since the AI probably knows the Bone Turner is from a popular online show.

In any case, I particularly liked the second suggestion. With some editing and fine-tuning, you could fix the details to fit the story in your head. On the other hand, I’m wary authors might rely too much on this to bridge plot gaps. While it’s amusing, it’s more compelling to read plot twists and resolutions that are not forced. At this stage, I’m still not convinced the AI can make a story without contrived plot twists.

Email Subject Lines

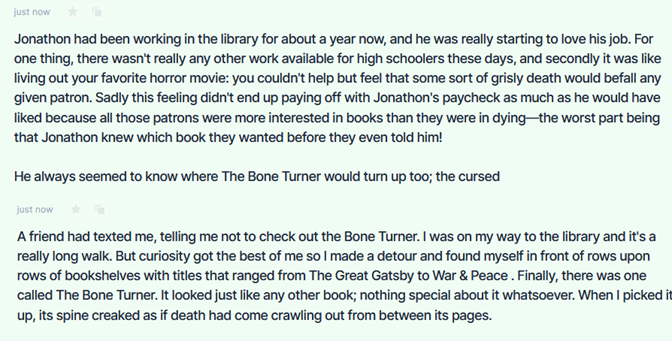

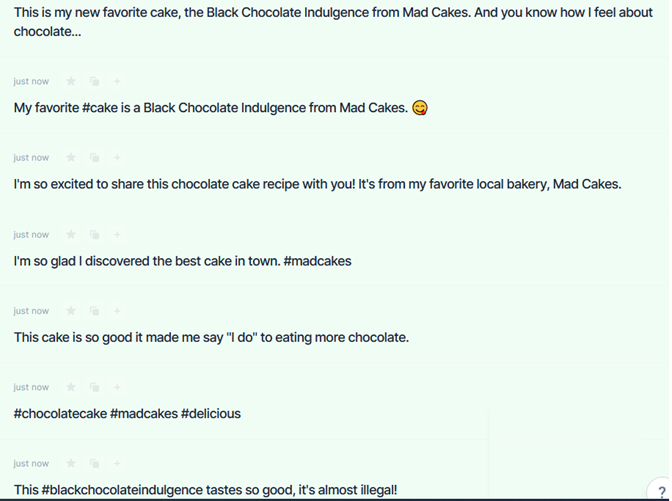

Besides creative writing tools, Coversion.ai also has templates for email marketing. This feature is made for businesses or individuals who want to promote products and services via email. The app claims to come up with catchy subject lines that draw consumers to open your email. In this example, I used an imaginary cake shop that delivers throughout LA. I thought Jasper came up with a long list of creative subject lines. These were spot on for the example. Since I am a cake person, I’d likely read this kind of email.

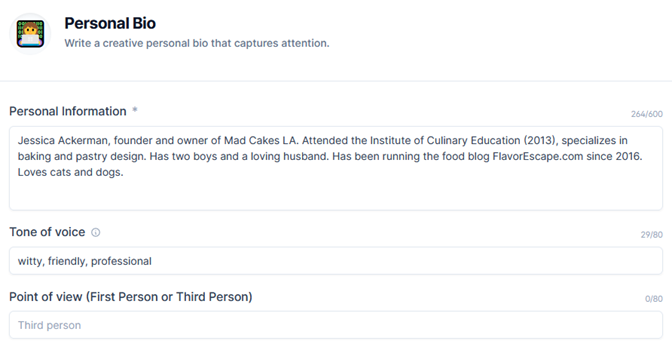

Personal and Company Bio

You can also generate creative personal and company bios through Jasper. If you’re running a personal blog or website, Jasper generates personal bios in first person or third person POV, whichever you are more comfortable with. I’m actually pleased with what the AI suggested. It’s a good start, because I find it hard writing about myself.

The example below is not me, of course. I made up Jessica Ackerman as the founder of Mad Cakes in LA.

Here’s what Jasper generated:

It does sound like a personalized bio. Especially with the detail about cuddling with cats and dogs. Again, I’d edit it to be more particular about details. Other than that, I think it’s a good tool to use.

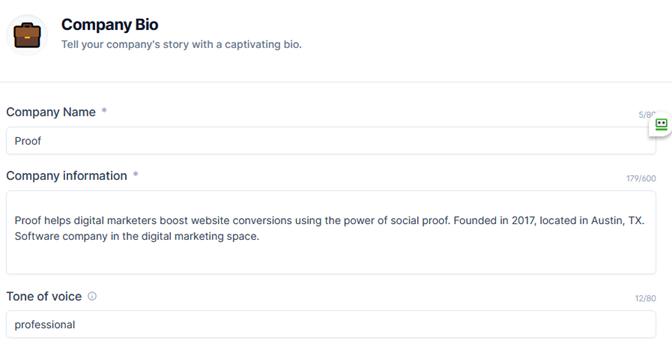

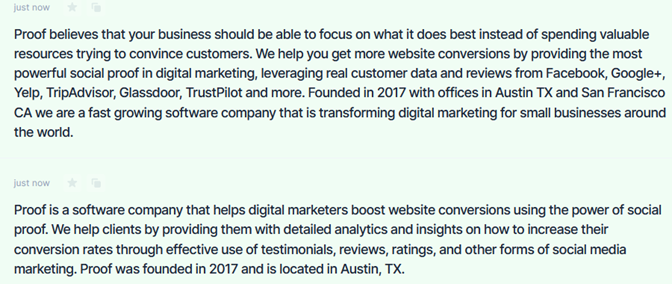

Next, Jasper also generates company bios that sound professional. I put a three-sentence info about a company that boosts website conversion for businesses. I was surprised how long the suggestions were. It also presumed the names of clients the company has serviced (TripAdvisor, Yelp, etc.). Again, for particular information like this, it’s important to edit or remove them. Otherwise, you might publish copy with misleading details.

Suggestions from Jasper:

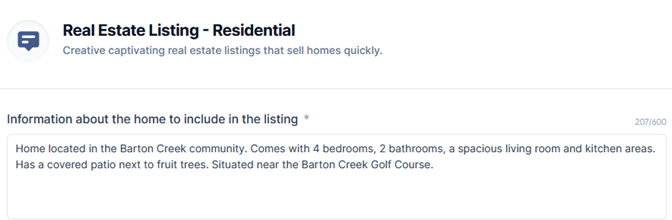

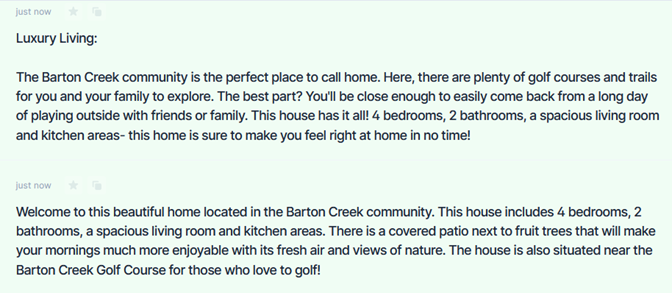

Real Estate Listing – Residential

You can utilize this template to create creative and descriptive residential listings. It’s helpful for real estate agents and people who are planning to sell their property. The following shows information about a house for sale, followed by listing suggestions by Jasper.

Suggestions from Jasper:

It’s interesting how the suggested content appeals to the consumer’s idea of a perfect home. It tries to paint a picture of affluent living just based on the golf course description I supplied. But again, for accuracy, these added details should be edited by the writer.

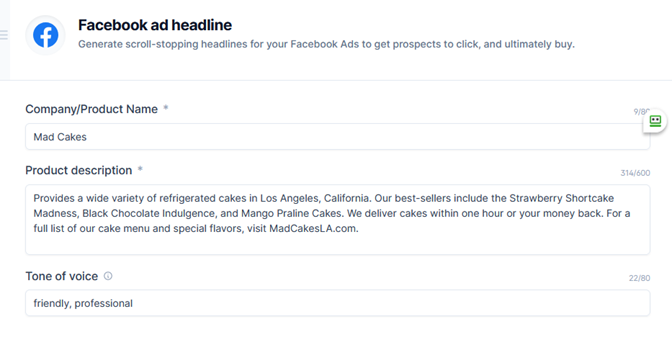

Templates for Specific Online Platforms

Besides articles and product or brand descriptions, expect Conversion.ai to provide special writing features for online platforms. This includes Facebook, Instagram, YouTube, Google, and Amazon accounts. The AI’s content suggestions are based on posts and ads that have generated high traffic on these platforms. I think this a good tool to use if you want an edge over what already sells.

- Facebook Ad Headline: Makes catchy headlines for FB ads, claims to increase chances of clicks that lead to sales.

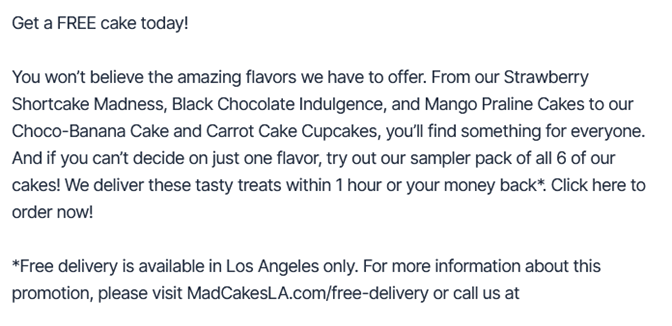

- Facebook Ad Primary Text: Claims to generate high converting copy for FB ad’s primary text section.

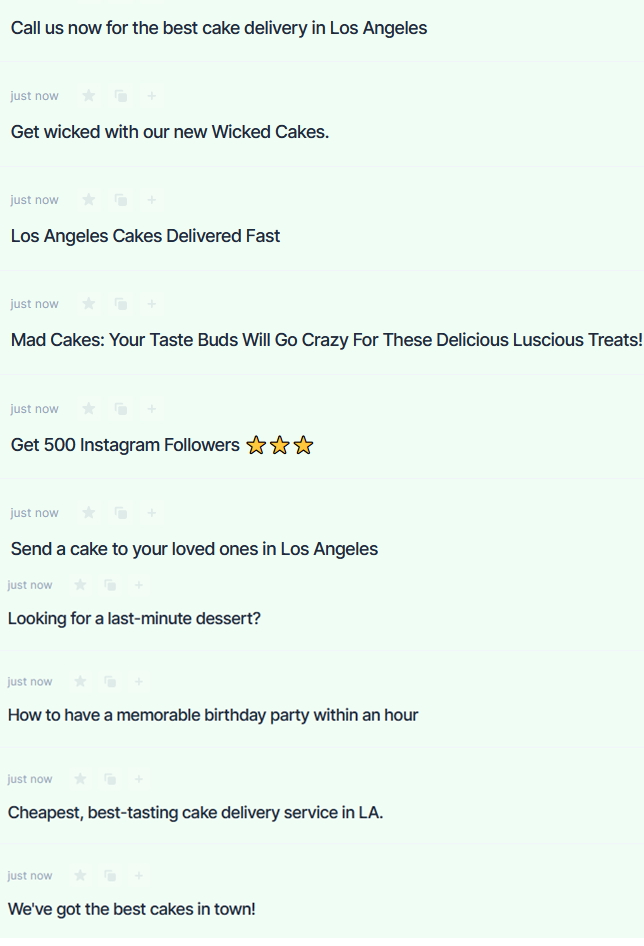

For the Facebook ad headline, my example is a cake shop that delivers a wide assortment of cakes in Los Angeles. It specifically mentions delivering cakes “within an hour or your money back.” Here’s the example and Jasper’s suggested content.

AI ad sample headlines:

I must say these sound like fun and friendly FB headlines. I personally would like a last minute dessert. And if I don’t have time to pick up cake, I’d certainly like one delivered. Just not sure about “Get 500 Instagram Followers,” the suggestion is out-of-place. I’d use this tool for a fresh and exciting FB headline.

Here’s the AI sample for Facebook ad primary text:

Based on the FB text sample, the AI instantly suggested to give away free cake. Most of the generated samples headed toward this direction. It didn’t just generate engaging copy, it likely showed you what other cake shops do to draw more customers. I think it’s a great marketing strategy to have promos and free cake. I also like that it suggested catchy hashtags. But again, I’d fix the wordy and adjective-ridden descriptions. With a little editing, the samples should read more smoothly. Other than that, it’s a fast way to come up with social media copy.

Photo Post Captions for Instagram

You can use the app for a company or store’s IG accounts. Here are some samples based on a Mad Cakes Black Chocolate Indulgence photo. If you need ideas for your IG post, this tool can suggest copy that’s simple and straightforward for IG. Depending on your product or service, it suggests content that typically targets your customers base.

Video Writing Templates for YouTube

Next, Conversion.ai offers specialized templates for videos, specifically for platforms such as YouTube. But I also think you can use the content similarly if you’re posting on other video sites. However, the suggestions are based on content with high traffic on YouTube. It includes the following features:

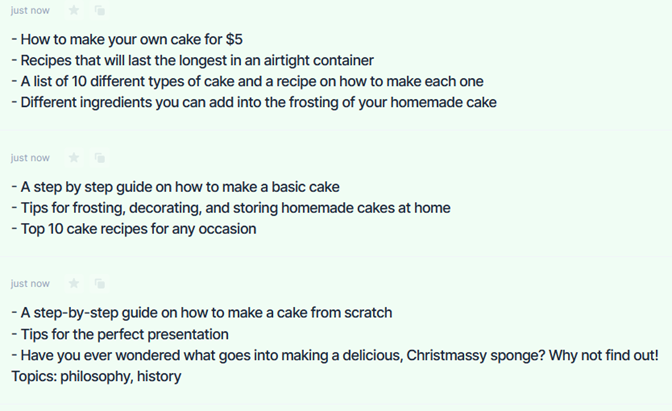

Video Topic Ideas: For brainstorming video content concepts that rank well on YouTube. For example, your initial topic is baking homemade cake. It’s a useful tool for letting you know what people are actually interested in. It gives you an idea what to work on right off the batt. Here are the AI’s suggestions. It mentions concepts for cake baking videos many people look for:

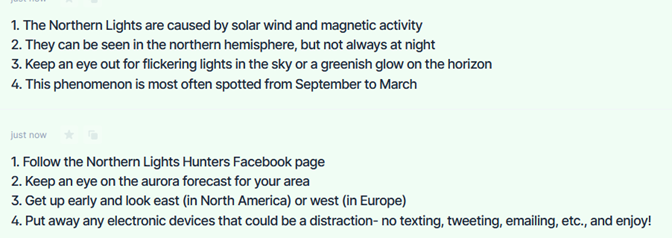

Video Script Outline: Helps make script outlines for any topic. However, this works more suitably for how-to and listicle type videos, not the ones with a narrative. The example below for how to spot aurora borealis or Northern lights. From the AI suggestions, you can choose the best strategies to come up with your own outline. I noticed many suggestions can be too general, besides the more specific ones I posted below. It’s still best to do your own research to make your video content more nuanced and unique. Otherwise, you may just parrot what other content creators have already done.

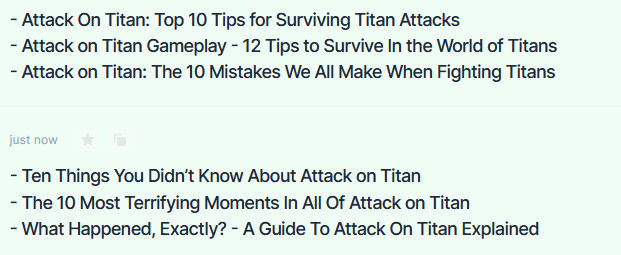

Video Titles: Like the other templates, there’s also a video title feature. As an example, many users on YouTube like to create content about shows or films. Suppose you want to write a feature about the anime Attack on Titan. For the suggestion, the AI actually came up with pretty awesome titles and topics you can start researching on. While this is based on high-traffic fan search, what you can do is watch what’s already there. This will help you come up with more unique insights about the show that has not been tackled. Again, try to focus on what would set your content apart from what’s already there.

Blog Post Templates

Conversion.ai provides templates that help you conceptual blog posts for your brands. It has tools to help you brainstorm topic ideas and outline your content. These suggestions are all based on high ranking topics on Google. It also comes with features that help compose blog post introductions and conclusions.

- Blog Post Topic Ideas

- Blog Post Outline

- Blog Post Intro Paragraph

- Blog Post Conclusion Paragraph

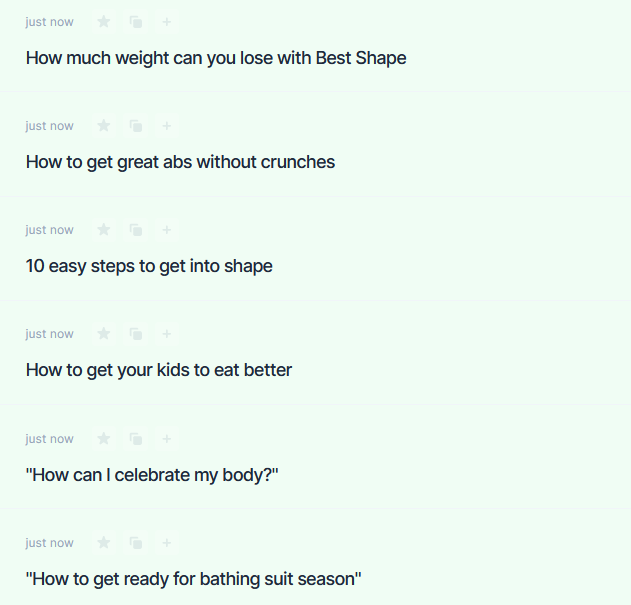

For the example, let’s focus on the topic template. I used the earlier example, Best Shape, which is an imaginary non-invasive weight loss service. See the AI’s suggestions below.

Jasper’s results show topics that trend around non-invasive weight loss methods. Trending topics around your market is always good to know. For ideas on blog topics, I think Conversion.ai will really be a useful tool. If you need help structuring your outline, I think it’s worth using it especially if you’re having trouble with organization.

Personally, after getting different topics, you can start writing your post without the app. You won’t need it especially if you already have an idea what to write. It’s still better to do proper research than rely on the app to add information on your post. As you’ve noticed, it has a tendency to supply the incorrect information, which you must diligently edit.

Would I Recommend This Software?

After crash testing Conversion.ai, I would recommend this tool to agencies or individuals that deal with extensive online copywriting and product rewrites. They will benefit the most by eliminating the time-consuming process of doing product descriptions. I would also recommend it for businesses that run social media campaigns, including Google and Amazon ads. This will help generate and organize copy ideas faster, especially if you have a lot of products and services to promote. And because the AI suggestions are based on high-ranking topics, you have a better idea of what your client base is also looking for. It can also enhance messaging concepts and help brainstorm new campaign ideas for a product or brand. Just remember to always edit the content suggestions.

On the other hand, I would not recommend this app for long-form writing. I do not think it is ideal for any writing that requires a lot of research. Because the AI suggestions tend towards incorrect information, you’re better off researching current data on your own. It’s an interesting tool for wring stories, but I also worry authors might be too reliant on the app for plot ideas. There is a difference between carefully worded prose versus long-winded sentences composed by this app. Human writing is still more precise with expression, which the AI has yet to learn.

While it’s a good tool to have, the bottom line is, you still need to edit your content. It will help you structure your outline and compose your post. However, the impetus for writing and the direction it will take is still on you, the writer. My verdict? AI writing technology won’t fully replace humans anytime soon.

Update: This article was updated in May of 2022 to reflect Conversion.ai's AI writing bot name changed from Jarvis to Jasper. No other changes have been made since the original publication of this article.