Ad Networks - "Partners" Hoarding Publisher Data For Profit

Are the big networks trying to lock up their data?

It would appear that some big players are trying to muscle in between the user and the webmaster by limiting the webmasters access is to valuable statistical data.

The excellent SmackDown blog has a post about Google reportedly testing Ajax results in the main SERPs.

Sounds innocuous enough, right?

Trouble is, what happens to existing tools? Plugins? Rank checkers? Stats and other referral tracking packages? All tools that rely on Google passing data in order to work.

Many tool vendors would likely adapt, but as Michael points out, what happens if all the referral data shows as coming from Google.com i.e. no keyword data is passed?

Browsers do not include that data in the referrer string, and it is never sent to the server. Therefore, all referrals from a Google AJAX driven search currently make it look as if you are getting traffic from Google’s homepage itself. Now, while this kind of information showing up in your tracking programs might be quite a boost to the ego if you don’t know any better, and will work wonders for picking up women in bars (”guess who links to me from their homepage, baby!”), for actual keyword tracking it is of course utterly useless.

Perhaps the only place you'll be able to get this data is Google Analytics? Is this the next step - a lock-in?

It has happened before.

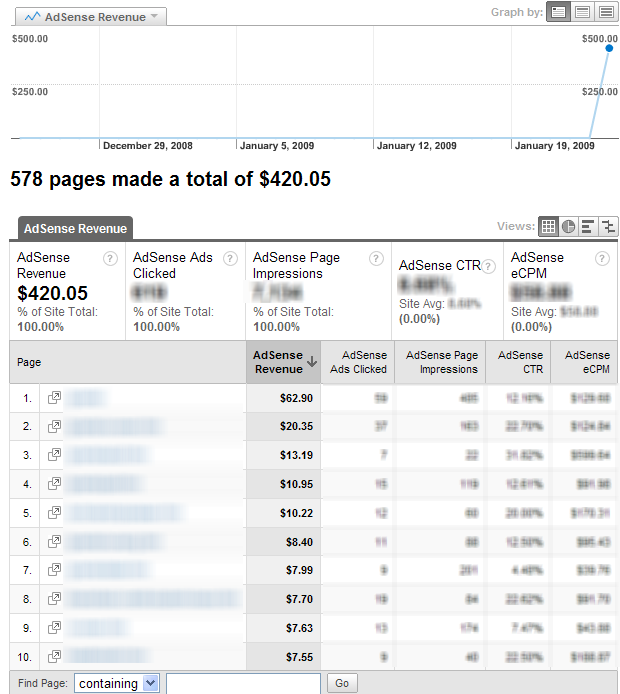

Remember the changes to Adsense? Google introduced a new form of tracking code that can't be tracked by third party tools. However, that data is available within Google Analytics.

This obviously puts other tracking vendors at a competitive disadvantage, and signals to the webmaster community just where the ownership of that data lies.

Data Lock In

There appears to be an emerging trend, of late, whereby networks are leveraging their power against the interests of individual webmasters in terms of data ownership. Having been locked out themselves for a few years, the middle men are trying to squeeze their way back in again.

Take a look at the new contracts of GroupM, the worlds largest buyer of online media, as detailed in GroupM Revises Terms For All Online Ad Buys, Claims Data Is 'Confidential' on MediaPost:

The wording in GroupM's new T&Cs, which are attached to all the insertion orders and contracts it submits to online publishers beginning this year, amends the current industry standard by adding, the following: "Notwithstanding the foregoing or any other provision herein to the contrary, it is expressly agreed that all data generated or collected by Media Company in performing under this Agreement shall be deemed 'Confidential Information' of Agency/Advertiser......Experts familiar with online advertising contracts say the term is a smoking gun, because it raises a broader industry debate over who actually owns the data generated when an advertiser serves an ad on a publisher's page. Is it the advertiser's data? Is it the agency's data? Is it the publisher's data? Under the current industry standard, the data is considered "co-owned" by all sides of the process, but some believe the new GroupM wording seeks to shift the rights over data ownership exclusively to the advertiser and the agency.

The article also suggests that other ad providers may follow suit. What this may mean is that your can't leverage data in other ways. You might not even be able to collect it.

Whilst this issue has popped up again of late, it is nothing new. There has long been a battle for consumer data because it is so valuable. The ad networks can create a lot of valuable data as a by-product of their advertising placement, because they can leverage network effects and scale in the way the individual webmaster cannot. Naturally the next step is to lock it up and protect it.

The cost of protecting that data may come at the webmasters expense. As the MediaPost article says, who does the data belong to? The publisher or the ad network? Both?

Traditionally, it's been both. But that might be about to change, if the above contract is anything to go by.

Forced Partnerships

Incidentally, other contracts really push the boat out when it comes depriving webmasters of control. Techcrunch reported that the Glam Network, a large ad provider made up of advertising affiliates, includes this little clause in their contract:

10. Right of First Refusal

a. Notice. If at any time Affiliate proposes to sell, license, lease or otherwise transfer all or any portion of its interest in any of the Affiliate Websites, then Affiliate shall promptly give Glam written notice of Affiliate’s intention to sell....

Essentially, if you want to sell your website, and you've agreed to these terms, then Glam have first right of refusal on the sale! Nice.

What this all might lead to is less ownership, less control, and less flexibility for the individual webmaster when dealing with big networks.

Or perhaps, in the case of Google, they're going to find other ways to pass data and just haven't outlined how yet.

One to keep a close eye on, methinks...

Comments

Peter, not sure if you knew this, but when you say:

The "right of refusal" in that contract just means that they get the chance to match the buyers' price and purchase the site themselves if they want. Much less insidious than trying to exclusively own tracking data generated on your own site, imo. :)

But the problem with first right of refusal is that many companies will not try to buy websites that are under such condition for fear of having that website bought out by a competing company, and potential legal issues with buying the site.

If I have first right of refusal on a site then I would probably buy about 99% of those because I know that there is another buyer at that price, and I could always buy it...test how well I could monetize it, and then if it does not work out as well as hoped try to flip it for a small profit or break even.

In aggregate it is an opportunity at near risk-free testing.

I looked at the Glam network at one point, as someone I encountered was using it happily, but as I read the fine print, too many things struck me, like the right of first refusal on purchase, and as best as I could tell the application itself was a binding ageeement - on my part, and I was signing up for a year of their traffic - whether or not it worked for me.

Too many things seemed one sided in the contract.

As it was 1am and I was tired when I was reading it, I decided not to proceed just then - and never felt inclined to go back.

Maybe its just b/c this is happening and the MSN issues seem far away. Seems like Google has way tooo much power. Any ideas on how to prop up MSN search and make them competitive? Perhaps another idea would be for Websites to create technology that can pull the refer source through or a plugin to a browser. Ugh....

If you want to make Live Search more competitive then you can...

This just increases the value of capturing data via your own on-site search. The referrer is only one way to discover what people are looking for, and it can be denied by individual surfers, or in this case by the use of Ajax. Get rid of Google custom search, and find a solution that gives you access to all of that query data whilst denying it to any over-mighty search engines.

If Google roll this out on a large scale, it's only a matter of time before many other engines follow suit. I don't see a flight to MSN or Yahoo! as a long-term alternative.

"Remember the changes to Adsense? Google introduced a new form of tracking code that can't be tracked by third party tools. However, that data is available within Google Analytics."

Hey Aaron, do you have a link that gives more info about that? I hadn't heard of that issue before.

Hi Matt

Long time no comment :)

The old AdSense code used to have a bunch of lines in it...but the new AdSense code has much fewer lines and can't easily be tracked by 3rd party tools like SiteSuperTracker...to use third party tools people have to know how to generate the old AdSense code.

For Analytics, Google gives people the opportunity to generate legacy code...but people have to use third party tools to do it with AdSense.

Matt,

I love AJAX, and I sincerely hope that this potential shift doesn't have ulterior motives.

But if it does, and you guys want to force people to use Google Analytics to get invaluable keyword data, then Google has gotten completely off track.

Want more analytics market share in a way you can be proud of? Then innovate - make it better than anything else out there. That's what you guys did with search, and it worked, right?

This seems to be inevitable, but also good for webmasters in the long run. It validates the data, first of all, which supports a market for it. The data is highly current, so as soon as the webmaster turns off third party access, that third party begins to suffer. How long will Glam last if webmasters decide en-masse that Glam is not a great choice?

Google has done the best job of trading value for data by offering Google Analytics. Still, webmasters are learning that all the fancy UI controls have finite value (mostly bundled into the convenience factor). As the market prices user data, Google will clearly have to keep adding value. I like that.

Of course Google knows this... hence knol and other research into hosting content on Google.

Funny to see this here today as I was just thinking of this topic after chatting with some data mining folks today.

It occurred to me that the response to the trend towards data commodification would be people bringing friction into the process. I envisioned folks creating scripts to make scraping their sites uneconomical (e.g. some kind of bot trap that used a lot of the scraper's server resources), but here we have two other approaches that are more clever.

Which goes back to my earlier post on data commodification and suggested takeaways - people building businesses around data treatment, verification and related value-adds will likely see a lot of growth here. I think Compete are moving in that direction and I wouldn't be surprised to see Spyfu upsell folks on an data consulting service.

Update: It appears the IT field/data warehousing business already sells data quality software and services. How long till that reaches the mktg dep't?

I think selling data is getting harder and harder though...Google and Microsoft are giving away a lot for free, and SEM Rush is an AMAZING service at the current pricepoint.

What are they thinking? They are not that great at search to begin with, so they should tone down their ego a bit. I'm in the process of writing a book titled "1,001 things I HATE about Google" and this is going to top the list.

for a company whose motto is "don't be evil", Google sure is BAD.

Add new comment