Google Says "Let a TRILLION Subdomains Bloom"

Search is political.

Google has maintained that there were no exceptions to Panda & they couldn't provide personalized advice on it, but it turns out that if you can publicly position their "algorithm" as an abuse of power by a monopoly you will soon find 1:1 support coming to you.

The WSJ's Amir Efrati recently wrote:

In June, a top Google search engineer, Matt Cutts, wrote to Edmondson that he might want to try subdomains, among other things.

We know what will happen from that first bit of advice, in terms of new subdomains: billions trillions served.

What are the "among other things"?

We have no idea.

All we know is that it has been close to a half-year since Panda has been implemented, and in spite of massive capital investments virtually nobody has recovered.

A few years back Matt Cutts stated Google treats subdomains more like subfolders. Except, apparently that only applies to some parts of "the algorithm" and not others.

My personal preference on subdomains vs. subdirectories is that I usually prefer the convenience of subdirectories for most of my content. A subdomain can be useful to separate out content that is completely different. Google uses subdomains for distinct products such news.google.com or maps.google.com, for example. If you’re a newer webmaster or SEO, I’d recommend using subdirectories until you start to feel pretty confident with the architecture of your site. At that point, you’ll be better equipped to make the right decision for your own site.

Even though subdirectories were the "preferred" default strategy, they are now the wrong strategy. What was once a "best practice" is now part of the problem, rather than part of the solution.

Not too far before Panda came out we were also told that we can leave it to GoogleBot to sort out duplicate content. A couple examples here and here. In those videos (from as recent as March 2010) are quotes like:

- "What we would typically do is pick what we think is the best copy of that page and keep it, and we would have the rest in our index but we wouldn't typically show it, so it is not the case that these other pages are penalized."

- "Typically, even if it is consider duplicate content, because the pages can be essentially completely identical, you normally don't need to worry about it, because it is not like we cause a penalty to happen on those other pages. It is just that we don't try to show them."

- I believe if you were to talk to our crawl and indexing team, they would normally say "look, let us crawl all the content & we will figure out what parts of the site are dupe (so which sub-trees are dupe) and we will combine that together."

- I would really try to let Google crawl the pages and see if we could figure out the dupes on our own.

Now people are furiously rewriting content, noindexing, blocking with robots.txt, using subdomains, etc.

Google's advice is equally self-contradicting and self-serving. Worse yet, it is both reactive and backwards looking.

You follow best practices. You get torched for it. You are deciding how many employees to fire & if you should simply file bankruptcy and be done with it. In spite of constantly being lead astray by Google, you look to them for further guidance and you are either told to sit & spin, or are given abstract pablum about "quality."

Everything that is now "the right solution" is the exact opposite of the "best practices" from last year.

And the truth is, this sort of shift is common, because as soon as Google openly recommends something people take it to the Nth degree & find ways to exploit it, which forces Google to change. So the big problem here is not just that Google gives precise answers where broader context would be helpful, but also that they drastically and sharply change their algorithmic approach *without* updating their old suggestions (that are simply bad advice in the current marketplace).

It is why the distinction between a subdirectory and subdomain is both 100% arbitrary AND life changing.

Meanwhile select companies have direct access to top Google engineers to sort out problems, whereas the average webmaster is told to "sit and spin" and "increase quality."

The only ways to get clarity from Google on issues of importance are to:

- ignore what Google suggests & test what actually works, OR

- publicly position Google as a monopolist abusing their market position

Good to know!

Comments

I have a feeling you're just doing it for effect, but saying things like "Everything that is now "the right solution" is the exact opposite of the "best practices" from last year." is a bit extreme and not helping the cause.

For one, it's not true in the slightest and two, it's the kind of bad advice the freaks out people who know just enough about SEO to be dangerous.

In reality and in its most basic form, SEO's rules haven't changed much over the years. I know forums like SEOBook and SEOmoz and the like all love to sit around and squabble over the bizarre little details of SEO, but in reality, I get my clients 99% of the way there by just following the basics of good content, strong architecture, and targeted, organic inbound links... the rest are just the details.

I know, it would be easy to say, "but, but, the devil is in the details" and that can be true for some sites that are in amazingly competitive marketplaces where everybody is an SEO master, but most of the time, it's just overkill.

When I perform audits for my clients, they all want to believe that their SEO problems stem from some deep issue that can only be discovered by magical incantations and an expert with a Ph. D., only to discover that they don't get much organic traffic because they don't have any inbound links or content of any sort.

So, when you say that everything we know about SEO is dead and we have to start and test all over again, I'm going to have to call you out as a bit of a drama queen and send you back to the fundamentals of the craft.

Jeff @ Fang Digital

Subfolders were pushed as being superior to subdomains. Indeed right now we are being told is the *exact* opposite.

In the past we were also told that you wouldn't get penalized for duplicate content and if we had site structural issues that generally we could trust GoogleBot to sort it out. That exact same situation now causes sites to get torched.

Maybe you do not operate at the strategic level, and thus do not understand how strategic and fundamental the above issues are, or how drastic an arbitrary 50% or 60% plunge in search traffic FOR A HALF YEAR is to a business, but to those who are not *actually ignorant* of the magnitude of change & the arbitrary nature of it, the above stuff is anything but trivial.

Some of our customers were impacted by it. Tons of people lost their jobs & if you ask any of those folks I bet they will explain the changes as being a bit more than "no big deal."

Nice try, but wrong.

Let me start off by saying that I actually agree with the bones of this article; one of the major issues dogging SEO these days is that SEOers keep looking for the chink in Google's armor and then stab the hell out of it until Google puts up a new piece of armor to cover the weakness. Of course, when that happens, SEOs take to the street claiming "Why, O lord! Why of thou forsaken me?!" when they had to know what they were doing was wrong.

Anyway, to answer your question, the the factually incorrect part is where you say, "EVERYTHING that is now "the right solution" is the exact opposite of the "best practices" from last year." because it isn't everything. That sort of hyperbole doesn't belong in these kinds of discussions, hence the calling out as a drama queen.

I just got done reading the book "True Grit" (the one the movies were tightly based on, great read btw) and Tom Chaney, the bad guy they spend the entire book and movies chasing because he killed the main character's father, reminds me a lot of SEOs. Once they catch up to him, he gets shot by Mattie Ross and even the other members of his gang turn on him, only for him to say, "Everything is against me."

Well, everything isn't against you; everything that was a best practice hasn't changed, in fact, none of it has. You claim that I may not be as strategic as you, which is wildly incorrect. My strategies are far and wide and varied by the client, but let me tell you, my greatest strategy when it comes to Google is, "don't risk it." Sure, some of your readers may claim I'm sucking on the teat (or other things) of Matt Cutts and Google, but I can tell you this, I've never had a client or a company I've worked for lose it all with a algorithm change because of that strategy.

Matt Cutts never said that duplicate content was acceptable, he said that Google would be the one to sort it out if it was there... that was a warning. When I advise my clients and employers on duplicate content issue, it's not to tell them that they will be penalized, but that Google will end up making the decision on which page to use, so you better clean up the mess that is causing the dupe content before Google does. Why on Earth would anybody think that duplicate content would be OK?

Heavy SEO debates always seem to fall back to a "spirit of the law" vs. the "letter of the law" debates. SEOs that claim they have secret tricks and such have usually just found a chink in the armor that Google hasn't covered up yet because the letter of the law doesn't say its bad thing. Yet, when Google fixes these things, those SEOs stand about yelling, "What? You said!" like a child that pushed the limit of a household rule. Meanwhile, the spirit of the law was always about great content.

Cutts is abstract for the same reason I was always abstract with graphic designers, because if you are too restrictive, it doesn't allow for creativity. This can be frustrating at times, I know, but in the end, if they were equally restrictive, you would complain about that as well.

Anyway, I don't have a tight way to wrap this up and have actual SEO work to do, so I'll say, you are correct, people abusing the vagueness of SEO is screwing it up for everybody, but no, everything is not against you.

Right, but just because one person does something opportunisitic and shifty doesn't make it right that someone else has to pay for it.

Just because she was wearing a short skirt doesn't mean she had it coming.

Well...let us evaluate what Google has suggested as solutions for Panda

So sure...stuff has only mostly changed.

The useless pladitudes are consistent, and the useful specifics are the exact opposite of what has been doled out at past sermons.

Outside of fraud, almost *any* business investment comes down to evaluating risk and reward.

To avoid the bulk of risks is also to avoid the bulk of rewards.

Have you ever built any widely successful affiliate sites from scratch?

Are you saying that you have never had a signle significant down month due to a Google algorithmic anomaly? (I have even seen sites with hundreds of unique linking domains simply disappear from the index and re-appear a couple days later...they did nothing wrong, Google just had a bug).

And, in a future update, when that changes, how righteous will you feel when others are lecturing you about how you risked it?

The only way to avoid failure with 100% certainty is to avoid success.

His VERY FIRST SUGGESTION was to let Googlebot sort it out & then only worry about it if you were having issues after that.

So, yes, he was saying that duplicate content was acceptable.

BECAUSE, IF THEY ACTUALLY LISTENED TO WHAT MATT SAID, HE SAID "I would really try to let Google crawl the pages and see if we could figure out the dupes on our own."

Again, back to the woman who was raped who had the indecency to wear a short skirt.

She didn't deserve it.

A lot of the sites that were torched were NOT some SEOs pushing the limits, but rather small mom and pop webmasters.

In spite of Panda I am still doing quite well. I never claimed that everyone or everything was out against me. Nice misdirection though!

Rather I think Google is pro-big business and anti-small business. At Affiliate Summit they have went so far as highlighting how affiliates are viewed as unneeded duplication and such. But it is not only the affiliates who got whacked. Some of the small businesses with ecommerce sites who are our customers took it up the ass because Google funded eHow's spam. I am not so much pissed off for me, as I am for them. Thanks for caring though!

First, apologies... I am new to this forum and don't know the cool tricks for quotes, etc., so forgive me if this is harder to read than your own...

"Right, but just because one person does something opportunisitic and shifty doesn't make it right that someone else has to pay for it."

Sure they do, if that other person was doing the same bad thing that the shift person was doing. It may not be intended, but they still did it... that's the difference between murder and manslaughter, but in both cases, somebody ends up dead.

"Just because she was wearing a short skirt doesn't mean she had it coming."

Absolutely, but that actually doesn't relate here. We're talking about people who have actually broken one of Google's rules. While I was working at IAC, one of the properties there approached Google while we were all there for a big meeting and claimed that they were being treated unfairly in the rankings (or had even been banned) when they weren't doing anything wrong. One of the Google engineers took a minute and showed her that she was breaking one of their policies (I think it was a gateway page or something) and Google absolutely should have banned her. Just because the rep from the company had no idea that she was breaking the rules, doesn't mean the rule was any less broken.

"Well...let us evaluate what Google has suggested as solutions for Panda"

Again, an issue of semantics. You said everything, not everything to do with Panda (and that probably isn't even totally true either...)

"Outside of fraud, almost *any* business investment comes down to evaluating risk and reward.

To avoid the bulk of risks is also to avoid the bulk of rewards."

True, but in any instance, if you take the risk and fail, you don't get to blame the system, it was you that took the risk.

"Have you ever built any widely successful affiliate sites from scratch?"

No, I avoid affiliate work like the plague. While you guys do a ton of intricate SEO work, it is not representative of the majority of what's out there.

"Are you saying that you have never had a signle significant down month due to a Google algorithmic anomaly? (I have even seen sites with hundreds of unique linking domains simply disappear from the index and re-appear a couple days later...they did nothing wrong, Google just had a bug)."

Of course, back in the old days (I've been doing this for awhile) when there was still a "Dance" happening, etc., but anymore, I rarely experience that kind of trouble with the employers and clients I work for.

"And, in a future update, when that changes, how righteous will you feel when others are lecturing you about how you risked it?"

What an odd statement... but, I think I see your point, but here's the real deal. What I will realize is that, if me or any of my clients ever get dropped after an algo change, I will rush out to learn what I was doing wrong or what has changed and then go fix it and hope for the best... I will not lament over the change and claim that me or anybody else is getting picked on or treated unfairly.

"The only way to avoid failure with 100% certainty is to avoid success."

Great fortune cookie line, but that's not what is happening here.

"His VERY FIRST SUGGESTION was to let Googlebot sort it out & then only worry about it if you were having issues after that."

Correct, but he still never said that it was acceptable. It has been long understood that duplicate content is an issue in SEO and I have always advised clients to fix it with a quickness.

"So, yes, he was saying that duplicate content was acceptable."

Nope, he just said that the bot would sort it out for you if you didn't handle it, that's not the same thing.

"BECAUSE, IF THEY ACTUALLY LISTENED TO WHAT MATT SAID, HE SAID "I would really try to let Google crawl the pages and see if we could figure out the dupes on our own.""

Frankly, that would scare the hell out of me... I want what I want to show up, not for Google to decide.

"Again, back to the woman who was raped who had the indecency to wear a short skirt. She didn't deserve it. A lot of the sites that were torched were NOT some SEOs pushing the limits, but rather small mom and pop webmasters."

They may be mom and pop and didn't realize they were doing something wrong, but they were still doing something wrong. Again, intent doesn't factor into this puzzle. You get popped, you fix it and hope for the best, not ask for leniency.

"In spite of Panda I am still doing quite well. I never claimed that everyone or everything was out against me. Nice misdirection though!"

No misdirection here, I was quoting you.

"Rather I think Google is pro-big business and anti-small business. At Affiliate Summit they have went so far as highlighting how affiliates are viewed as unneeded duplication and such. But it is not only the affiliates who got whacked. Some of the small businesses with ecommerce sites who are our customers took it up the ass because Google funded eHow's spam. I am not so much pissed off for me, as I am for them. Thanks for caring though!"

I would think that would be a rather myopic strategy on their part. Big business certainly helps pay the bills, but small businesses are a big part of their revenue plans for sure.

And let's be clear about affiliates, most of the time, affiliates are unnecessary duplication. Occasionally you'll get one that puts a genuine effort into promoting a brand or product using original content, etc., but most of the time, it's just screen scrapped crap.

I'm sure many a business, big and small, got slapped by Panada and there were because they didn't fall into line with the way the new algo works. So adapt and move on or get out of the way.

but I feel it will just end up in some sort of infinite regression.

One thing I will note about IAC is that the spam garbage auto-generated scraped answer pages on Ask.com were one of the biggest beneficiaries of the Panda update. Their other sites with lesser brand signals (teoma, directhit, life123) all took a major swan dive in traffic...without the brand signal Ask.com would have got hit hard as well (rather than seeing their Google referrals up more than 20%).

So in a very real & very direct sense that is 1 company doing the same spam garbage across a portfolio of websites & getting some sites torched for it, while the other got a ranking boost. In a very real sense that shows that doing the exact same things can lead to different outcomes based on either who is doing it, how they are doing it, where they are doing it, or what other mitigating or circumstancial factors are associated with the situation.

In terms of this...

...again I disagree with it.

In one of the other replies I highlighted how some webmasters got torched because people were buying links *for* them, in an attempt to get them torched.

Likewise a site can do nothing wrong & still have similar footprints to some spam sites simply because a lot of spam sites do things like steal their content & perhaps create other signals that are somehow aligned with their site. So the specific webmaster could have done nothing spammy at all (for years) and simply got blown away because Google funded eHow & other exploitative SEO plays.

If you had a portfolio of small ecommerce customers and just saw 25% of their sites get destroyed you would understand the idea of having similar profiles without going out of your way to try to be spammy. I think most likely you haven't studied the algorithm anywhere near as much as I have.

Also I think there is some level of presumption that people are just whining...totally not the case. The reason some people are complaining are the lack of reassessments & the uneven playing field. I likely have put at least 100+ hours of study & 1,000+ hours of labor into helping client sites overcome some of the Panda issues.

Normally I agree with what you have to say and I'm right there with you holding a pitchfork ready to move in and attack, but I think you may be reading too much into something here.

Matt's post from 2007 comes across to me as more of a recommendation from a technical standpoint and not having to do with anything relating to ranking/value. Prior to that, it was a piece of cake to own most of a page in the SERPs by having a domain and several similar subdomains. When they made that change, I think his point was that they aren't going to show as many results from the same domain so it wouldn't matter as much to have it broken out. I think he assumes that most people can't handle properly setting up a subdomain so he recommended subfolders (although that's sort of outdated too because with so much adoption of WordPress, subfolders aren't really what they used to be). I've always been a fan of subdomains. I've used them to reset penalties (along with rebuilding a better site to go on that subdomain), separate topically different sections of a site, or just to make things easier technically when a section needed to be on a separate server.

I think the situation with HubPages is that they have such a huge mix of crap added in with the good stuff that it's probably easier to separate the good stuff out with subdomains (which have been known to be treated almost as different as having separate domains). IMO, what it does is it allows easier filtering of the bad content. I don't think that has changed at all...at least not from what I've seen in the past few years. I see that as a way to "increase quality." Other options could be to just delete the crap content, but that's likely more difficult to do because it's a guessing game as to what Google considers to be good/bad.

Maybe I'm just not following what you're getting at on this...if I'm off base, feel free to remove my comment :)

And to find out that bit of information, one would need to presume that Matt's advice about it being treated like 1 site had some holes in it. And if one knew that, then indeed that would be a preference toward subdomains over subfolders. Which, incidentally, is the exact opposite of what Matt stated his preference to be.

He was promoting his preference as though it was from the webmaster's position, but upon further inspection, it appears that preference was more from a search engineer's perspective.

Once again, Matt's post was saying that they were treating subdomains like subfolders. Unless you assumed Google's advice was wrong & tested it (and/or had a site where one part got clipped & were able to figure out why), you wouldn't know this. And that means anyone taking their advice at face value is likely operating at a significant disadvantage, which was my whole point.

Now I get where you're going with it. I guess I'm just used to ignoring Matt's advice and basing my decisions on what I've actually seen :)

with the explosion of API's there is going to be a lot more duplicate content. so the question for businesses out there is how can they add value to that content?

For me, the main problem is that "adding value" is still only done on a page-by-page basis where as I think that "duplicate content" fetched from an api and displayed on another site should have the whole site taken into consideration (context) and not just one single page vs another.

Google really need to clarify how they address the duplicate content because companies who re-hack other people's data is only going to increase.

@ BrianL and FengDigital

Perhaps you didn't experience losing nearly all your traffic or have seen your clients that kept to the straight and narrow penalized for sticking to the rules religiously?!

The fact is that you can't trust Google, this Panda update wiped out hundreds of thousands of webmasters that had only bowed to Google throughout their history.

Google's business model is built upon THEIR content - they are the people that were first in the SERPS 10 years ago helping make Google Google has just ass F*~ked them now as a thank you.

You can't trust Google - either build a business that doesn't rely on their traffic or abuse their algo. Simple.

All you ass kissings and Google c*&k suckers will eventually find yourself broke and we'll be laughing.

They keep saying build "quality" WTF does quality mean, it's all relative and is a meaningless word. Eugh.

Better get back to spamming that trying to enlighten suck ignorant people.

C

The absurd issue with that bit is that when J.C. Penny had their rankings restored (after 90 days) Matt said that the penalty period was appropriate & Google did not want to appear vindictive. That implied that a penalty for longer than 3 months would be vindictive.

Yet those who did not violate the guidelines who were clipped by Panda have been penalized for about twice as long so far.

That's because in Google's eyes, Panda wasn't a penalty- it was a restructuring (to favor Fortune 500s and Google properties no less!).

I don't suspect many sites torched in Panda will EVER make it back. Google used them as a stepping stone and now they've been dumped in the trash.

No worries though- if you DON'T want a Google-owned site, Wikipedia, or a Fortune 500 result then just start your search on page 30 of the results (pages 2-10 reserved for content farms; pages 11-29 reserved for spam).

What would be absurd is if he removed the penalty from JC Penny and they hadn't fixed what was causing the issue (poor and paid inbound links, which I had heard they did).

If you're still being "penalized" by Panda, then you just didn't fix the issues.

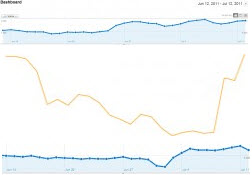

Google decides when to manually recalculate Panda. I haven't seen (m)any public credible claims of recovery outside of HubPages where they showed both the domain name and the analytics screenshots. So far all other claims that I have seen (except for 2) only gave the domain name or a graph or some abstract statement of growth connected to neither.

Over 10,000 websites were torched by Panda.

Webmasters have collectively invested $10,000,000's (or more) modifying their sites since then, and yet the recovery rate is well below 1%.

The only somewhat credible recovery claims where the person making them is being quite transparent have been:

That is not to say there were no other recoveries, but that they are quite rare. And this is nearly a half-year later.

Keep in mind too that Matt was suggesting that penalizing for more than 90 days for intentionally breaking the guidelines could be seen as vindictive...well twice as long for not intentionally breaking them would certainly qualify as being vindictive then.

Yes... again, if you're still getting dinged, you're probably not doing something right.

Trust me, if you were popped for any of the major algo changes, it was for a reason... you weren't "following the rules".

Please give me a list of these magical "rules". The algo is closed and Google themselves say they won't ever reveal the details because of "bad actors".

I guess scrapers are still outranking me for my own content (even when there's a link back to me in the stolen content) because Google has it all figured out.

I wouldn't be here, I'd be working more because I had all the answers.

1.) I have seen sites just disappear from the index for a few days, even though they were widely linked to. Do you remember last October when Google stated they had crawling & indexing issues? There were issues for tons of webmasters.

2.) There have been cases of competitors buying links for a competitor & getting their site torched. I know because I used to be friends with the #1 link broker and he told me about it!

3.) A lot of the sites that were hit by Panda were small business ecommerce sites that were not doing anything particularly spammy. The only things they were guilty of were carrying a lot of products & not being aggressive enough at marketing to build up enough signals of trust to carry their site size in Google.

4.) When the Google position #6 penalty happened tons of people said I was full of crap. Matt Cutts publicly denied it. More people said I was full of crap. A few weeks later Matt said they figured out what it was and fixed it. He didn't admit to it existing until only after it no longer existed. My reputation got drug through the mud by ignorant people in my trade who thought they were intellectually superior, even while they were completely ignorant about what was going on & I was once again right.

5.) Of course there are false positives in any major algorithmic change. Google never makes the perfect algorithm, rather they are trying to incrementally improve. Such efforts require them to measure the wins vs the losses. Anything they do that creates major wins will sometimes create some other losses. Some examples on this front are like accidentally localizing UK search results to Australia, accidentally dropping all .info domain names from their index, etc.

And some trade offs are not accidental, but are more calculated. When Google torched .co.cc they were willing to throw away a few of the good subdomains to get rid of all the bad ones.

They make about 500 algorithmic changes a year & test an order of magnitude more than that. There will be some errors in all that change.

6.) The absolutists statements you make are only so discomforting because they are so uninformed. You might want to work on that.

Yes, there have been plenty of cases where rules were broken and the rule breaker wins... it happens, the algo isn't perfect and can't detect everything (like paid links), but sorry, that doesn't not make me "full of crap" as you charmingly put it.

I know you want perfect out of the algo, but you're just not going to get it. Maybe one day when my grandchildren are using Google, the results will be perfect, but I doubt it because humans will still be behind the programming and black hats will still be trying to eff with the system.

Just a slight aside. Cutts is like Bernake at the Fed. There is so much money at stake that what he says has huge weight and needs to be dissected and analysed with great care and attention. People are looking for direction to get out of Panda Hell. Is Cutts now saying subdomains are the way? The stakes are high. I think people are right to be indignant over changes. I think they are trying to clean things up: big brand in, small brands out. Nice and clean. From googel perspecitve this just makes sense. Big brands = big advertising dollars.

"Don't be evil." Now that is funny!

This whole Panda thing makes me pretty uneasy. I'm still not completely sold!

He's always been full of **it. I don't read his blog and I'd never argue with him or his followers because the nail that sticks out gets the hammer.

I wouldn't get too excited about the weighting they put on subdomains vs subdirectories because that's something that's flipped and flopped around in the past.

The truth is that Google uses the same kind of techniques that hedge funds use when they build their ranking function. Let's say that next year they want to put a negative weight on PageRank but still get rankings that look a lot like they do know. They can do it. They can punch the negative weight into their machine learning algorithm, which will construct a countervailing factor that correlates strongly with PageRank.

For a long time, Google's secret weapon has been that they can make changes faster than SEOs can adapt to changes -- there's no "secret" in the algorithm that's irreplaceable. Everything is designed to prevent you from applying the "make little changes and measure" approach that works for PPC. What works? Search engine compatibility, quality content (or at least the perception of quality) and massive power and scale that overwhelms your opposition.

I have no doubt that google care very little for webmasters and small site owners. They are out to control everything and anything.

It seems that my site has also been torched this week. On Tuesday I had over 4800 visitors, on Wednesday 3000, and on Thursday 2,500. Now, dozens of my pages have disappeared or dropped several pages in search. I am no an aggressive link builder and have never bought or sold any links. All the while, the site that is scraping my feed is still ranking. I don't know why.

Oh yeah, he has adsense ads above the fold. Is this now a ranking factor?

Does anyone know whether there was another Panda update this week, or is it just me?

Just a quick one; the problem with subdomains is that they are treated as completely different sites, you therefore need to apply a backlink strategy to each one rather than concentrating all your efforts on the main domain. For this reason I choose sub-directories!

...it appears to be working, at least thusfar.

@Fangdigital - your point about the hyperbole was salient - though I'd counter that Aaron is a savvy blogger and uses his literary license to make a some very good points herein.

Where I have to jump in with both feet is your myopic assertion that those who were pandalized somehow deserve it for not 'following the rules - equally naive is your assertion that they simply need to step in line and Google will once again shine on them.

I've been playing this game for a while and have never seen a dynamic as one-sided as Panda. Its less about compliance, and more about Google changing the entire game to favor those with the most chips. And while the net impact has been largely positive for some consumers and many large publishers, they've thrown out many, many babies with the bathwater and provided very little recourse other than torching and starting from scratch. And. They. Don't. Really. Care.

they can survive without the losers and you won't hear a single complaint from big media (hmmm wonder why?).

This time its different. Seriously. And you are supremely lucky to sit on your condescending perch and tell us how we got what we deserved.

"Its less about compliance, and more about Google changing the entire game to favor those with the most chips."

That is perhaps the most salient bit right there. Sure other context may be helpful, but that line describes better than any others what the Panda update was in a single sentence.

Just for the record, Matt was very clear in saying that what happened at Cult Of Mac was not a Panda recovery. He suggested that they MAY have had a large increase in offsetting traffic for other reasons but they were not whitelisted or otherwise able to remove the panda quality score issues.

Given what has turned out to be the manual/incremental nature of Panda updates, I think he is pretty much telling the truth here, so I would remove Cult Of Mac from the list of sites that have recovered.

Alan Bleiweiss reports to have had a site recover with Panda 2.2, but that is the only anecdote I have seen about sites recovering that I have any reason to believe is credible beyond what Aaron is reporting for HubSpot.

...even more rare.

I have been told of a few privately as well. But it is still very low single digit % at the very highest. Likely significantly under 1%!

Thanks for mentioning that Jonah!

Hi,

thanks, i started Seo from the beginning, learned at forums called later i-search. Few times i have read or watch a video from M. Cutts. I never mind this, only taken this as input in my strategies for the SEO Chess game.

My resume: "Its less about compliance, and more about Google changing the entire game to favor those with the most chips."

That is the way google is taken since many years, and why "Brand" have been a getting much more weight and with this the Social Signals recently. Google is about Mooney, not about the best search experience. Life is changing, there is no justice in capitalism. Google is serving their clients, and the clients are measured by money.

So take care and build with common sense your site and your relationships to your site. It must be real, you cannot fake it. Google is not evil, it is only reflection how function nowadays society, taken the money from the people and giving it to reach.

Take care and greetings from Hamburg

Hi,

i mean at the end, .... taken the money from the people and giving it to richs, e.q. high society.

Thanks for the great discussion Aaron.

Yes Fang, all of us who were destroyed by panda deserved it, and also we deserve to be bankrupt, to be outcasts forever, because we're obviously too stupid to fix our little problem, even after hundreds or thousands of changes, and at least 1,000 hours of trying.

So what did the smart players do? They just went on to the next google game, but what about those of us who had an established small brand? Well, we're still slugging away. You don't put 10 years into something and just throw it away because some uncaring monopoly comes along and says you're doing it all wrong, do it our way or we'll destroy you, and by the way, we're not going to tell you what's wrong, or even what area we don't like about what you're doing.

I have a client who runs syndicated content for their clients on subdomains. They came to me because of their new concerns over duplicate content. I was just wondering what the current thoughts are on the subdomain duplicate content issue. Thanks!

...thus it wouldn't make much sense for me to offer it for free on a paid project you are working on (many other sites mostly sell tools, but our main offering is knowledge that we share in our member community).

However it is worth saying that generally a lot of the more exploitative subdomain strategies that were used in response to Panda have been closed off. Beyond that...one really needs to know a lot about the project to plot the best path forward...there isn't a universal best answer.

Thanks so much for your response. I completely understand and honestly didn't expect advice. I was really just trying to get a feel for others' experiences with subdomains in a post Panda world. My client's subdomains were not in response to Panda, but created prior to Panda. I was just wondering if anyone else had any good or bad experiences with them in light of Google's changes. Thanks again for having taken the time to respond, I truly appreciate it!

Add new comment